RealTechTalk (RTT) - Linux/Server Administration/Related

We have years of knowledge with technology, especially in the IT (Information Technology) industry.

realtechtalk.com will always have fresh and useful information on a variety of subjects from Graphic Design, Server Administration, Web Hosting Industry and much more.

This site will specialize in unique topics and problems faced by web hosts, Unix/Linux administrators, web developers, computer technicians, hardware, networking, scripting, web design and much more. The aim of this site is to explain common problems and solutions in a simple way. Forums are ineffective because they have a lot of talk, but it's hard to find the answer you're looking for, and as we know, the answer is usually not there. No one has time to scour the net for forums and read pages of irrelevant information on different forums/threads. RTT just gives you what you're looking for.Recommended SFP+ to RJ45 Adapter Module for Switch Juniper Cisco Ubiquiti TP-Link etc...

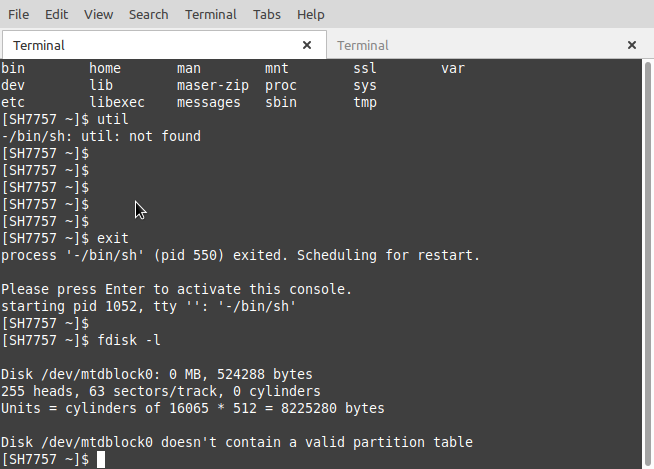

I am mainly used to the enterprise where most connections are deployed by some "normal" kind of fiber eg SFP+, QSFP+, SFP28, QSFP28 and those modules are almost fool proof, run cool and are reliable. Like most normal admin users, I like to use my own hardware for routing and switching rather than the often poor equipment provided by the ISP, especially when you have enterprise hardware that will be more reliable.

The normal path is that you would just take the fiber optic link on an SFP or SFP+ and plug it in directly into your firewall, router or switch, cutting out the extra power usage, insecure ISP devices and another point of failure.

But enter the situation with a lot of home and office connections and anything on ISPs like Telus, AT&T, most ISPs in Japan etc... that are faster than 1.5G, they no longer use the standard GPON which just registers via the serial# of the module that the ISP has on file.

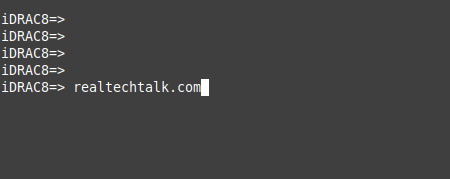

Now you need a device called the NAH (Network Access Hub) which uses XGSPON which is authenticated via this extra and unnecessary device/NAH. Plugging in an XGSPON SFP+ module to your router/switch will not work like a normal GPON.

Solution 1 - buy an expensive XGSPON module and hack and modify it

After more testing, I almost think that #1 in some cases here may be more reliable, yes there is the chance of authentication parameters changing which would instantly take you offline. However, many of the SFP+ to RJ45 adapters are known to be unreliable and randomly die. In theory if your XGSPON module doesn't die, then I will predict it will probably be more reliable than the #2 adapter method.

There are some solutions which modify XGSPON modules from fs.com so you can use it as normal, but they are pricey and this can be risky if the authentication mode/details changes in the future. In plain English, you could be away from home and need access to your home network but an ISP Update could render your internet access useless. Here is the procedure on Github to hack the XGSPON SFP+ module from fs.com.

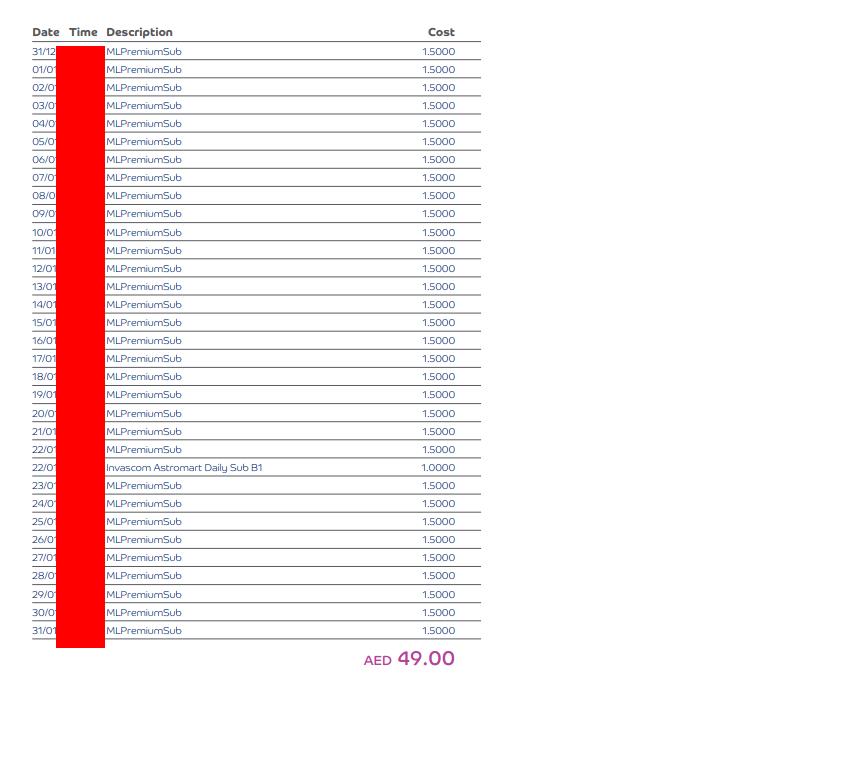

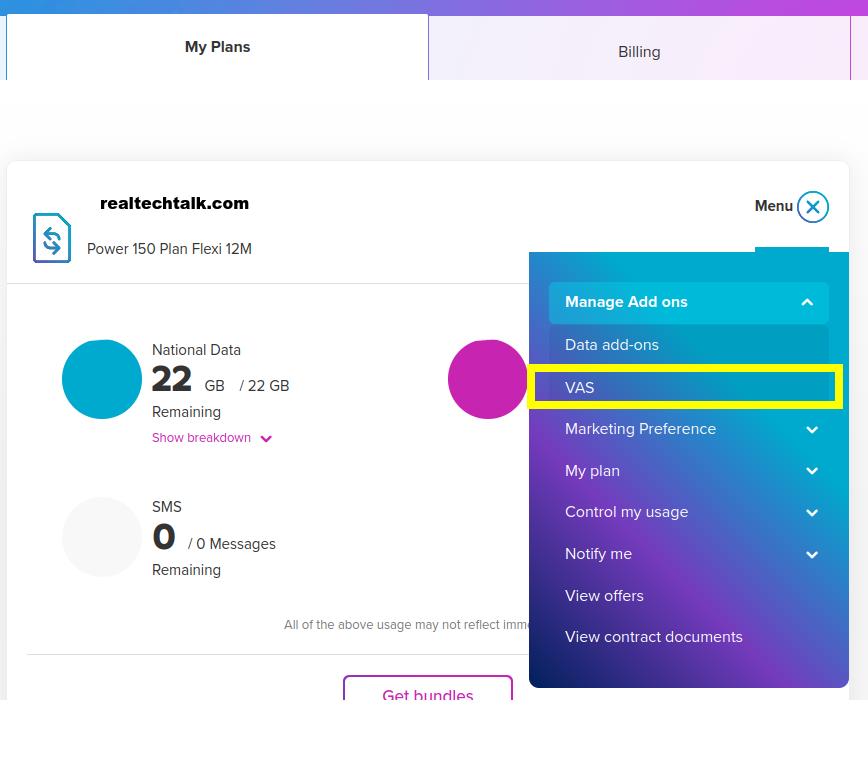

Solution 2 - Use the NAH and use an SFP+ to RJ45 module

As much as I hate the NAH, this is the most reliable way for the reasons mentioned in #1. With a caveat being that I don't trust these adapters, they can die. For example one of mine died after 3 days. They do say the 80M are more reliable.

Recommended 80M SFP+ to RJ45

The 80M or 100M units use the BCM84891L Broadcom chip which is considered more reliable in my experience.

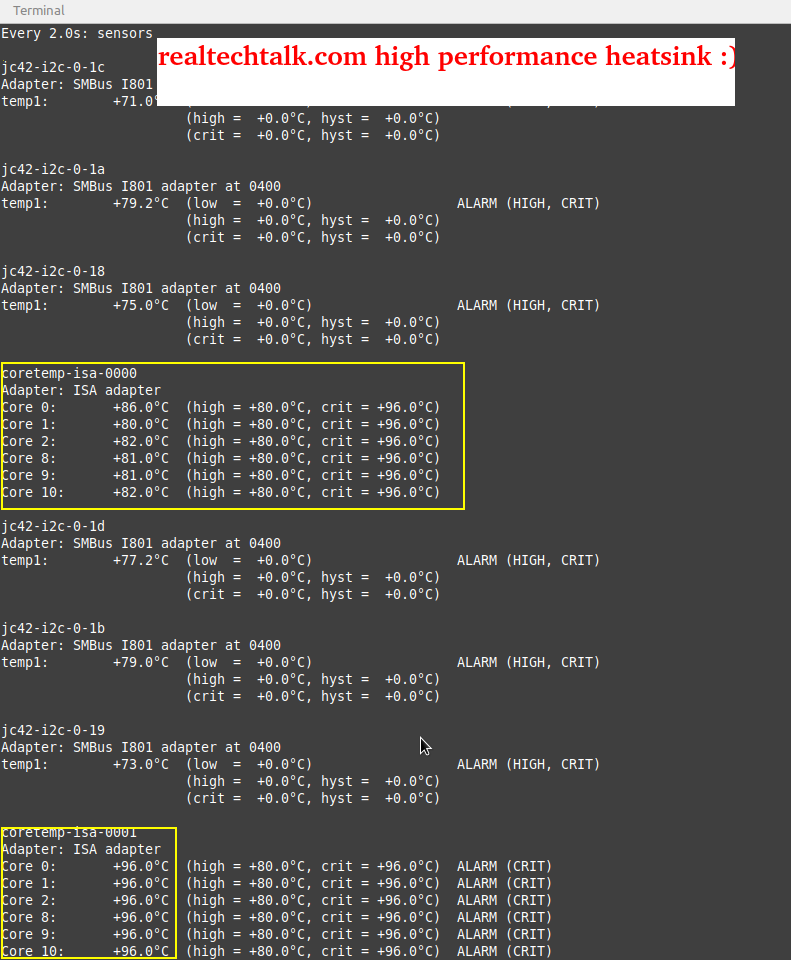

My 80M unit has been going strong for over a month now and temps vary between 42-48C which appears to be based on usage, whereas the 30M units seem to stay at almost a static temperature.

Amazon affil Link to the 80M 10G SFP+ RJ45 adapter I use.

I don't recommend the 30M SFP+ modules anymore below.

The 30Ms almost always use the Marvell AQR113C as mentioned below which is a chip that is proven to overheat and not last (eg. mine died after just 3 days!).

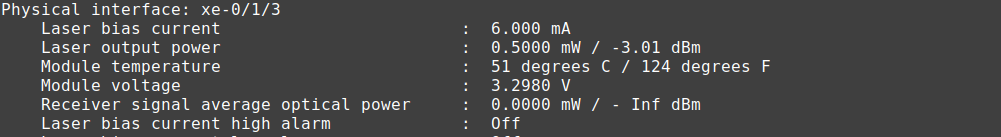

One module I recently tested has an ID of "SFP+-10G-SR" and runs at a reasonable 51C which is much better than what many users report with other modules (eg. burning hot 80-90C). I cannot be certain if the unit runs cool because of the design it claims to use which minimizes heat, or if it is because my enterprise switch has active cooling.

It seems almost all 30M SFP+ modules use the Marvell AQR113C chip which no matter the brand is often not reliable.

The 80M or 100M SFP+ modules usually use Broadcom BCM84891L which is known to be a cooler running chip. It does cost more but if they last longer then it is worth it.

After testing with heavy use for 3-4 days the temperature stayed the same but the module dies/drops out which causes downtime/packetloss:

Jan 25 13:04:08 chassism[1243]: link 3 SFP receive power low warning cleared

Jan 25 13:07:31 mib2d[1262]: SNMP_TRAP_LINK_DOWN: ifIndex 561, ifAdminStatus up(1), ifOperStatus down(2), ifName xe-0/1/3

Jan 25 13:07:33 chassism[1243]: link 3 SFP receive power low alarm set

Jan 25 13:07:33 chassism[1243]: link 3 SFP receive power low warning set

Jan 25 13:07:43 chassism[1243]: link 3 SFP receive power low alarm cleared

Jan 25 13:07:43 chassism[1243]: link 3 SFP receive power low warning cleared

Here is the affiliate link to the bad/dying after 3 days SFP+ to RJ45 item I bought.

Even when pushing 3Gigabit in both directions the module stayed at the same temp or maybe went to 52C.

Bad Power Supply Issue Story Diagnosing Troubleshooting

These are fairly classic symptoms but they may not present obviously enough early on.

I had a power supply issue with my old Corsair CX430 (430 Watt).

The build had two old 95W Xeon CPUs, 2 USB devices and a PCI-E powered Nvidia Quadro or at one point a GT710. This shouldn't have overwhelmed the PS but this power supply is known to be weak by many users.

Symptom 1. Graphics Issues

This would only present it self after coming back with the screensaver, when hitting the keyboard or mouse to stop the screensaver and get the login screen, it would just freeze for a number of seconds. I initially chalked it up to bad cooling or an old Nvidia driver.

Symptom 2. Mouse Issues

Sometimes the mouse would just freeze or not track properly, clearly in hindsight it was a power supply issue but the mouse itself also did test bad, so this was not completely obvious.

Symptom 3. USB Issues

I didn't have this particular issue but a lot of times I've seen issues with power supplies, you may notice a USB SSD does not work right or drops out. This is tricky to diagnose as it is often the USB SSD enclosure/cable itself, OR it is sometimes the internal cabling or resistance from motherboard to the front of the PC.

Symptom 4. Resetting randomly.

This one is fairly obvious but may slowly creep up. You may assume if you aren't at the PC all the time that it may be due to an update or even a bad motherboard or a watchdog service acting up.

Conclusion

If you ever have any issues like this, if practical, swap out for a known good power supply and see if any of these types of symptoms goes away.

In my example, I swapped in a known good 750W EVGA and all has been fine since (no resets, no screensaver freezing, no USB issues etc...)

Getting started with AI (Artificial Intelligence) in Linux / Ubuntu using by deploying LLM (Language Learing Models) using Ollama LLMA

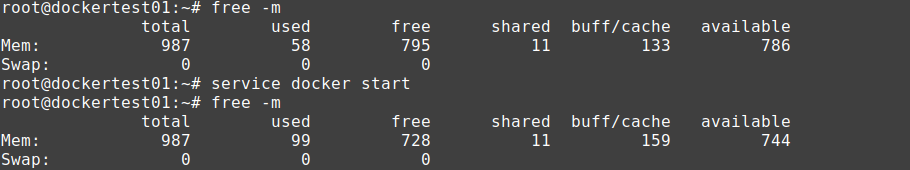

In this quick tutorial we will deploy various AI models and compare the differences. We will be using smaller models so that most users should be able to follow this guide as long as they have at least 16G of memory and no other applications using much memory.

In this tutorial we recommend that you use Docker to deploy ollama. If you are not familiar with Docker, you can check our Docker Tutorial guide here.

Step 1.) Deploy

I recommend having a Ubuntu or Debian host for this, but it is supported in Windows and Mac too.

Visit the Ollama website to download it.

Step 2.) Install Ollama

Install curl if you don't have it already: apt update && apt install curl

curl -fsSL https://ollama.com/install.sh | sh

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

WARNING: Unable to detect NVIDIA/AMD GPU. Install lspci or lshw to automatically detect and install GPU dependencies.

>>> The Ollama API is now available at 127.0.0.1:11434.

>>> Install complete. Run "ollama" from the command line.

Step 3.) Start ollama server

ollama serve&

Make sure you use the & so it puts the service in the background, yet it will still output to the console. This is very useful for debugging and troubleshooting performance issues.

2025/04/22 21:00:21 routes.go:1231: INFO server config env="map[CUDA_VISIBLE_DEVICES: GPU_DEVICE_ORDINAL: HIP_VISIBLE_DEVICES: HSA_OVERRIDE_GFX_VERSION: HTTPS_PROXY: HTTP_PROXY: NO_PROXY: OLLAMA_CONTEXT_LENGTH:2048 OLLAMA_DEBUG:false OLLAMA_FLASH_ATTENTION:false OLLAMA_GPU_OVERHEAD:0 OLLAMA_HOST:http://127.0.0.1:11434 OLLAMA_INTEL_GPU:false OLLAMA_KEEP_ALIVE:5m0s OLLAMA_KV_CACHE_TYPE: OLLAMA_LLM_LIBRARY: OLLAMA_LOAD_TIMEOUT:5m0s OLLAMA_MAX_LOADED_MODELS:0 OLLAMA_MAX_QUEUE:512 OLLAMA_MODELS:/root/.ollama/models OLLAMA_MULTIUSER_CACHE:false OLLAMA_NEW_ENGINE:false OLLAMA_NOHISTORY:false OLLAMA_NOPRUNE:false OLLAMA_NUM_PARALLEL:0 OLLAMA_ORIGINS:[http://localhost https://localhost http://localhost:* https://localhost:* http://127.0.0.1 https://127.0.0.1 http://127.0.0.1:* https://127.0.0.1:* http://0.0.0.0 https://0.0.0.0 http://0.0.0.0:* https://0.0.0.0:* app://* file://* tauri://* vscode-webview://* vscode-file://*] OLLAMA_SCHED_SPREAD:false ROCR_VISIBLE_DEVICES: http_proxy: https_proxy: no_proxy:]"

time=2025-04-22T21:00:21.697Z level=INFO source=images.go:458 msg="total blobs: 0"

time=2025-04-22T21:00:21.697Z level=INFO source=images.go:465 msg="total unused blobs removed: 0"

time=2025-04-22T21:00:21.697Z level=INFO source=routes.go:1298 msg="Listening on 127.0.0.1:11434 (version 0.6.5)"

time=2025-04-22T21:00:21.697Z level=INFO source=gpu.go:217 msg="looking for compatible GPUs"

time=2025-04-22T21:00:21.727Z level=INFO source=gpu.go:377 msg="no compatible GPUs were discovered"

time=2025-04-22T21:00:21.727Z level=INFO source=types.go:130 msg="inference compute" id=0 library=cpu variant="" compute="" driver=0.0 name="" total="267.6 GiB" available="255.5 GiB"

Step 4.) Run your first llm!

Find the LLM you want to test on the ollama website.

Keep in mind that the larger the model, the more memory and more disk space and resources it consumes when running. For this reason, our example will use a smaller model.

ollama run qwen2.5:0.5b

Note that this smaller 0.5b model uses just about 397MB

You should see similar output as below and then have a chat prompt at the end.

ollama run qwen2.5:0.5b

[GIN] 2025/04/22 - 21:04:44 | 200 | 79.024µs | 127.0.0.1 | HEAD "/"

[GIN] 2025/04/22 - 21:04:44 | 404 | 455.218µs | 127.0.0.1 | POST "/api/show"

pulling manifest ⠇ time=2025-04-22T21:04:45.299Z level=INFO source=download.go:177 msg="downloading c5396e06af29 in 4 100 MB part(s)"

pulling manifest

pulling manifest

pulling c5396e06af29... 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 397 MB tpulling manifest

pulling c5396e06af29... 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 397 MB

pulling manifest

pulling c5396e06af29... 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 397 MB

pulling manifest

pulling c5396e06af29... 100% ▕██████████████████████████████████████████████████████████████████████████████▏ 397 MB

pulling manifest

pulling manifest

pulling c5396e06af29... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 397 MB

pulling 66b9ea09bd5b... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 68 B

pulling eb4402837c78... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 1.5 KB

pulling 832dd9e00a68... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 11 KB

pulling 005f95c74751... 100% ▕██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▏ 490 B

verifying sha256 digest

writing manifest

success

[GIN] 2025/04/22 - 21:04:57 | 200 | 66.099807ms | 127.0.0.1 | POST "/api/show"

⠙ time=2025-04-22T21:04:57.759Z level=INFO source=server.go:105 msg="system memory" total="267.6 GiB" free="255.5 GiB" free_swap="976.0 MiB"

time=2025-04-22T21:04:57.759Z level=WARN source=ggml.go:152 msg="key not found" key=qwen2.vision.block_count default=0

time=2025-04-22T21:04:57.759Z level=WARN source=ggml.go:152 msg="key not found" key=qwen2.attention.key_length default=64

time=2025-04-22T21:04:57.759Z level=WARN source=ggml.go:152 msg="key not found" key=qwen2.attention.value_length default=64

time=2025-04-22T21:04:57.759Z level=INFO source=server.go:138 msg=offload library=cpu layers.requested=-1 layers.model=25 layers.offload=0 layers.split="" memory.available="[255.5 GiB]" memory.gpu_overhead="0 B" memory.required.full="782.6 MiB" memory.required.partial="0 B" memory.required.kv="96.0 MiB" memory.required.allocations="[782.6 MiB]" memory.weights.total="373.7 MiB" memory.weights.repeating="235.8 MiB" memory.weights.nonrepeating="137.9 MiB" memory.graph.full="298.5 MiB" memory.graph.partial="405.0 MiB"

llama_model_loader: loaded meta data with 34 key-value pairs and 290 tensors from /root/.ollama/models/blobs/sha256-c5396e06af294bd101b30dce59131a76d2b773e76950acc870eda801d3ab0515 (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen2

llama_model_loader: - kv 1: general.type str = model

llama_model_loader: - kv 2: general.name str = Qwen2.5 0.5B Instruct

llama_model_loader: - kv 3: general.finetune str = Instruct

llama_model_loader: - kv 4: general.basename str = Qwen2.5

llama_model_loader: - kv 5: general.size_label str = 0.5B

llama_model_loader: - kv 6: general.license str = apache-2.0

llama_model_loader: - kv 7: general.license.link str = https://huggingface.co/Qwen/Qwen2.5-0...

llama_model_loader: - kv 8: general.base_model.count u32 = 1

llama_model_loader: - kv 9: general.base_model.0.name str = Qwen2.5 0.5B

llama_model_loader: - kv 10: general.base_model.0.organization str = Qwen

llama_model_loader: - kv 11: general.base_model.0.repo_url str = https://huggingface.co/Qwen/Qwen2.5-0.5B

llama_model_loader: - kv 12: general.tags arr[str,2] = ["chat", "text-generation"]

llama_model_loader: - kv 13: general.languages arr[str,1] = ["en"]

llama_model_loader: - kv 14: qwen2.block_count u32 = 24

llama_model_loader: - kv 15: qwen2.context_length u32 = 32768

llama_model_loader: - kv 16: qwen2.embedding_length u32 = 896

llama_model_loader: - kv 17: qwen2.feed_forward_length u32 = 4864

llama_model_loader: - kv 18: qwen2.attention.head_count u32 = 14

llama_model_loader: - kv 19: qwen2.attention.head_count_kv u32 = 2

llama_model_loader: - kv 20: qwen2.rope.freq_base f32 = 1000000.000000

llama_model_loader: - kv 21: qwen2.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 22: general.file_type u32 = 15

llama_model_loader: - kv 23: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 24: tokenizer.ggml.pre str = qwen2

⠹ llama_model_loader: - kv 25: tokenizer.ggml.tokens arr[str,151936] = ["!", """, "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 26: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 27: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 28: tokenizer.ggml.eos_token_id u32 = 151645

llama_model_loader: - kv 29: tokenizer.ggml.padding_token_id u32 = 151643

llama_model_loader: - kv 30: tokenizer.ggml.bos_token_id u32 = 151643

llama_model_loader: - kv 31: tokenizer.ggml.add_bos_token bool = false

llama_model_loader: - kv 32: tokenizer.chat_template str = {%- if tools %}n {{- '<|im_start|>...

llama_model_loader: - kv 33: general.quantization_version u32 = 2

llama_model_loader: - type f32: 121 tensors

llama_model_loader: - type q5_0: 132 tensors

llama_model_loader: - type q8_0: 13 tensors

llama_model_loader: - type q4_K: 12 tensors

llama_model_loader: - type q6_K: 12 tensors

print_info: file format = GGUF V3 (latest)

print_info: file type = Q4_K - Medium

print_info: file size = 373.71 MiB (6.35 BPW)

⠼ load: special tokens cache size = 22

⠴ load: token to piece cache size = 0.9310 MB

print_info: arch = qwen2

print_info: vocab_only = 1

print_info: model type = ?B

print_info: model params = 494.03 M

print_info: general.name = Qwen2.5 0.5B Instruct

print_info: vocab type = BPE

print_info: n_vocab = 151936

print_info: n_merges = 151387

print_info: BOS token = 151643 '<|endoftext|>'

print_info: EOS token = 151645 '<|im_end|>'

print_info: EOT token = 151645 '<|im_end|>'

print_info: PAD token = 151643 '<|endoftext|>'

print_info: LF token = 198 'Ċ'

print_info: FIM PRE token = 151659 '<|fim_prefix|>'

print_info: FIM SUF token = 151661 '<|fim_suffix|>'

print_info: FIM MID token = 151660 '<|fim_middle|>'

print_info: FIM PAD token = 151662 '<|fim_pad|>'

print_info: FIM REP token = 151663 '<|repo_name|>'

print_info: FIM SEP token = 151664 '<|file_sep|>'

print_info: EOG token = 151643 '<|endoftext|>'

print_info: EOG token = 151645 '<|im_end|>'

print_info: EOG token = 151662 '<|fim_pad|>'

print_info: EOG token = 151663 '<|repo_name|>'

print_info: EOG token = 151664 '<|file_sep|>'

print_info: max token length = 256

llama_model_load: vocab only - skipping tensors

time=2025-04-22T21:04:58.219Z level=INFO source=server.go:405 msg="starting llama server" cmd="/usr/local/bin/ollama runner --model /root/.ollama/models/blobs/sha256-c5396e06af294bd101b30dce59131a76d2b773e76950acc870eda801d3ab0515 --ctx-size 8192 --batch-size 512 --threads 12 --no-mmap --parallel 4 --port 38569"

time=2025-04-22T21:04:58.219Z level=INFO source=sched.go:451 msg="loaded runners" count=1

time=2025-04-22T21:04:58.219Z level=INFO source=server.go:580 msg="waiting for llama runner to start responding"

time=2025-04-22T21:04:58.220Z level=INFO source=server.go:614 msg="waiting for server to become available" status="llm server error"

time=2025-04-22T21:04:58.241Z level=INFO source=runner.go:853 msg="starting go runner"

time=2025-04-22T21:04:58.243Z level=INFO source=ggml.go:109 msg=system CPU.0.LLAMAFILE=1 compiler=cgo(gcc)

time=2025-04-22T21:04:58.250Z level=INFO source=runner.go:913 msg="Server listening on 127.0.0.1:38569"

⠦ llama_model_loader: loaded meta data with 34 key-value pairs and 290 tensors from /root/.ollama/models/blobs/sha256-c5396e06af294bd101b30dce59131a76d2b773e76950acc870eda801d3ab0515 (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen2

llama_model_loader: - kv 1: general.type str = model

llama_model_loader: - kv 2: general.name str = Qwen2.5 0.5B Instruct

llama_model_loader: - kv 3: general.finetune str = Instruct

llama_model_loader: - kv 4: general.basename str = Qwen2.5

llama_model_loader: - kv 5: general.size_label str = 0.5B

llama_model_loader: - kv 6: general.license str = apache-2.0

llama_model_loader: - kv 7: general.license.link str = https://huggingface.co/Qwen/Qwen2.5-0...

llama_model_loader: - kv 8: general.base_model.count u32 = 1

llama_model_loader: - kv 9: general.base_model.0.name str = Qwen2.5 0.5B

llama_model_loader: - kv 10: general.base_model.0.organization str = Qwen

llama_model_loader: - kv 11: general.base_model.0.repo_url str = https://huggingface.co/Qwen/Qwen2.5-0.5B

llama_model_loader: - kv 12: general.tags arr[str,2] = ["chat", "text-generation"]

llama_model_loader: - kv 13: general.languages arr[str,1] = ["en"]

llama_model_loader: - kv 14: qwen2.block_count u32 = 24

llama_model_loader: - kv 15: qwen2.context_length u32 = 32768

llama_model_loader: - kv 16: qwen2.embedding_length u32 = 896

llama_model_loader: - kv 17: qwen2.feed_forward_length u32 = 4864

llama_model_loader: - kv 18: qwen2.attention.head_count u32 = 14

llama_model_loader: - kv 19: qwen2.attention.head_count_kv u32 = 2

llama_model_loader: - kv 20: qwen2.rope.freq_base f32 = 1000000.000000

llama_model_loader: - kv 21: qwen2.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 22: general.file_type u32 = 15

llama_model_loader: - kv 23: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 24: tokenizer.ggml.pre str = qwen2

llama_model_loader: - kv 25: tokenizer.ggml.tokens arr[str,151936] = ["!", """, "#", "$", "%", "&", "'", ...

⠧ llama_model_loader: - kv 26: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 27: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 28: tokenizer.ggml.eos_token_id u32 = 151645

llama_model_loader: - kv 29: tokenizer.ggml.padding_token_id u32 = 151643

llama_model_loader: - kv 30: tokenizer.ggml.bos_token_id u32 = 151643

llama_model_loader: - kv 31: tokenizer.ggml.add_bos_token bool = false

llama_model_loader: - kv 32: tokenizer.chat_template str = {%- if tools %}n {{- '<|im_start|>...

llama_model_loader: - kv 33: general.quantization_version u32 = 2

llama_model_loader: - type f32: 121 tensors

llama_model_loader: - type q5_0: 132 tensors

llama_model_loader: - type q8_0: 13 tensors

llama_model_loader: - type q4_K: 12 tensors

llama_model_loader: - type q6_K: 12 tensors

print_info: file format = GGUF V3 (latest)

print_info: file type = Q4_K - Medium

print_info: file size = 373.71 MiB (6.35 BPW)

⠇ time=2025-04-22T21:04:58.472Z level=INFO source=server.go:614 msg="waiting for server to become available" status="llm server loading model"

⠏ load: special tokens cache size = 22

⠋ load: token to piece cache size = 0.9310 MB

print_info: arch = qwen2

print_info: vocab_only = 0

print_info: n_ctx_train = 32768

print_info: n_embd = 896

print_info: n_layer = 24

print_info: n_head = 14

print_info: n_head_kv = 2

print_info: n_rot = 64

print_info: n_swa = 0

print_info: n_embd_head_k = 64

print_info: n_embd_head_v = 64

print_info: n_gqa = 7

print_info: n_embd_k_gqa = 128

print_info: n_embd_v_gqa = 128

print_info: f_norm_eps = 0.0e+00

print_info: f_norm_rms_eps = 1.0e-06

print_info: f_clamp_kqv = 0.0e+00

print_info: f_max_alibi_bias = 0.0e+00

print_info: f_logit_scale = 0.0e+00

print_info: n_ff = 4864

print_info: n_expert = 0

print_info: n_expert_used = 0

print_info: causal attn = 1

print_info: pooling type = 0

print_info: rope type = 2

print_info: rope scaling = linear

print_info: freq_base_train = 1000000.0

print_info: freq_scale_train = 1

print_info: n_ctx_orig_yarn = 32768

print_info: rope_finetuned = unknown

print_info: ssm_d_conv = 0

print_info: ssm_d_inner = 0

print_info: ssm_d_state = 0

print_info: ssm_dt_rank = 0

print_info: ssm_dt_b_c_rms = 0

print_info: model type = 1B

print_info: model params = 494.03 M

print_info: general.name = Qwen2.5 0.5B Instruct

print_info: vocab type = BPE

print_info: n_vocab = 151936

print_info: n_merges = 151387

print_info: BOS token = 151643 '<|endoftext|>'

print_info: EOS token = 151645 '<|im_end|>'

print_info: EOT token = 151645 '<|im_end|>'

print_info: PAD token = 151643 '<|endoftext|>'

print_info: LF token = 198 'Ċ'

print_info: FIM PRE token = 151659 '<|fim_prefix|>'

print_info: FIM SUF token = 151661 '<|fim_suffix|>'

print_info: FIM MID token = 151660 '<|fim_middle|>'

print_info: FIM PAD token = 151662 '<|fim_pad|>'

print_info: FIM REP token = 151663 '<|repo_name|>'

print_info: FIM SEP token = 151664 '<|file_sep|>'

print_info: EOG token = 151643 '<|endoftext|>'

print_info: EOG token = 151645 '<|im_end|>'

print_info: EOG token = 151662 '<|fim_pad|>'

print_info: EOG token = 151663 '<|repo_name|>'

print_info: EOG token = 151664 '<|file_sep|>'

print_info: max token length = 256

load_tensors: loading model tensors, this can take a while... (mmap = false)

load_tensors: CPU model buffer size = 373.71 MiB

⠹ llama_init_from_model: n_seq_max = 4

llama_init_from_model: n_ctx = 8192

llama_init_from_model: n_ctx_per_seq = 2048

llama_init_from_model: n_batch = 2048

llama_init_from_model: n_ubatch = 512

llama_init_from_model: flash_attn = 0

llama_init_from_model: freq_base = 1000000.0

llama_init_from_model: freq_scale = 1

llama_init_from_model: n_ctx_per_seq (2048) < n_ctx_train (32768) -- the full capacity of the model will not be utilized

llama_kv_cache_init: kv_size = 8192, offload = 1, type_k = 'f16', type_v = 'f16', n_layer = 24, can_shift = 1

llama_kv_cache_init: CPU KV buffer size = 96.00 MiB

llama_init_from_model: KV self size = 96.00 MiB, K (f16): 48.00 MiB, V (f16): 48.00 MiB

llama_init_from_model: CPU output buffer size = 2.33 MiB

⠸ llama_init_from_model: CPU compute buffer size = 300.25 MiB

llama_init_from_model: graph nodes = 846

llama_init_from_model: graph splits = 1

time=2025-04-22T21:04:58.973Z level=INFO source=server.go:619 msg="llama runner started in 0.75 seconds"

[GIN] 2025/04/22 - 21:04:58 | 200 | 1.319178734s | 127.0.0.1 | POST "/api/generate"

>>> Send a message (/? for help)

Checking the ollama process we can see it uses about

1589132 root 20 0 2874.7m 553.7m 21.5m S 1140 0.2 3:03.60 ollama

553.7MB of RAM

microk8s kubernetes how to install OpenEBS

This is the official guide here from OpenEBS.

Step 1.) Make sure you have the right version.

As of this time 2025-04, we need Kubernetes/Microk8s version 1.23 or higher (note this will continue to increment higher).

Usually a good sign of having the wrong/old version is that you will encounter namespace and other errors:

error: unknown flag: --namespace

Step 2.) Enable Helm

microk8s enable helm

Step 3.) Get OpenEBS repo & update

microk8s helm repo add openebs https://openebs.github.io/openebs

microk8s helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "openebs" chart repository

Update Complete. ⎈Happy Helming!⎈

Step 4.) Install OpenEBS

microk8s helm install openebs --namespace openebs openebs/openebs --create-namespace

NAME: openebs

LAST DEPLOYED: Thu Apr 17 17:38:46 2025

NAMESPACE: openebs

STATUS: deployed

REVISION: 1

NOTES:

Successfully installed OpenEBS.

Check the status by running: kubectl get pods -n openebs

The default values will install both Local PV and Replicated PV. However,

the Replicated PV will require additional configuration to be fuctional.

The Local PV offers non-replicated local storage using 3 different storage

backends i.e Hostpath, LVM and ZFS, while the Replicated PV provides one replicated highly-available

storage backend i.e Mayastor.

For more information,

- view the online documentation at https://openebs.io/docs

- connect with an active community on our Kubernetes slack channel.

- Sign up to Kubernetes slack: https://slack.k8s.io

- #openebs channel: https://kubernetes.slack.com/messages/openebs

Flash LSI MegaRAID 2208 to IT mode in Linux Mint/Debian/Ubuntu

03:00.0 RAID bus controller: Broadcom / LSI MegaRAID SAS 2208 [Thunderbolt] (rev 05)

This is risky but if you have an extra RAID/SAS card to replace it then you can try this to get IT mode.

https://docs.broadcom.com/docs-and-downloads/host-bus-adapters/host-bus-adapters-common-files/sas_sata_6g_p14/9207_8e_Package_P14_IT_Firmware_BIOS_for_MSDOS_Windows.zip

https://docs.broadcom.com/docs/12350477

Get the sas2flash for Linux:

https://docs.broadcom.com/docs-and-downloads/host-bus-adapters/host-bus-adapters-common-files/sas_sata_6g_p20/Installer_P20_for_Linux.zip

unzip Installer_P20_for_Linux.zip

Archive: Installer_P20_for_Linux.zip

inflating: Installer_P20_for_Linux/README_Installer_P20_Linux.txt

creating: Installer_P20_for_Linux/sas2flash_linux_i686_x86-64_rel/

inflating: Installer_P20_for_Linux/sas2flash_linux_i686_x86-64_rel/sas2flash

creating: Installer_P20_for_Linux/sas2flash_linux_ppc64_rel/

inflating: Installer_P20_for_Linux/sas2flash_linux_ppc64_rel/sas2flash

inflating: Installer_P20_for_Linux/SAS2FLASH_Phase20.0-20.00.00.00.pdf

inflating: Installer_P20_for_Linux/SAS2Flash_ReferenceGuide.pdf

chmod +x Installer_P20_for_Linux/sas2flash_linux_i686_x86-64_rel/sas2flash

Save Your Card Details:

Installer_P20_for_Linux/sas2flash_linux_i686_x86-64_rel/sas2flash -list -c 0

LSI Corporation SAS2 Flash Utility

Version 20.00.00.00 (2014.09.18)

Copyright (c) 2008-2014 LSI Corporation. All rights reserved

No LSI SAS adapters found! Limited Command Set Available!

ERROR: Command Not allowed without an adapter!

ERROR: Couldn't Create Command -list

Exiting Program.

P14 sas2flash won't run on Ubuntu 20 or similar/newer:

sas2flash: sas2flash: cannot execute binary file

LSI MegaRAID in Linux Ubuntu / Centos Tutorial Setup Guide megacli

Usually when I come across a system like this, I just flash the firmware to an IT mode so we get just an AHCI system with JBOD, but sometimes it is not practical for remote systems or if we fear that flashing is too risky if something goes wrong.

In this case, we unfortunately have to use LSI/Broadcom proprietary CLI tools (megacli) to even make the drives visible.

Step 1 - Download MegaCLI

The official broadcom packages lack .deb packages and only have .rpm for Linux

At this time the latest MegaCLI 5.5 P2 can be downloaded from here.

Step 2 - Extract MegaCLI

unzip 8-07-14_MegaCLI.zip

Archive: 8-07-14_MegaCLI.zip

inflating: 8.07.14_MegaCLI.txt

inflating: DOS/MegaCLI.exe

extracting: FreeBSD/MegaCLI.zip

extracting: FreeBSD/MegaCli64.zip

inflating: Linux/MegaCli-8.07.14-1.noarch.rpm

inflating: Solaris/MegaCli.pkg

inflating: Windows/MegaCli.exe

inflating: Windows/MegaCli64.exe

Step 3 - Convert to .deb using alien

apt install libncurses5 alien

alien MegaCli-8.07.06-1.noarch.rpm

Warning: Skipping conversion of scripts in package MegaCli: postinst postrm

Warning: Use the --scripts parameter to include the scripts.

megacli_8.07.06-2_all.deb generated

Step 4 - Install .deb

dpkg -i megacli_8.07.06-2_all.deb

Selecting previously unselected package megacli.

(Reading database ... 295031 files and directories currently installed.)

Preparing to unpack megacli_8.07.06-2_all.deb ...

Unpacking megacli (8.07.06-2) ...

Setting up megacli (8.07.06-2) ...

Processing triggers for libc-bin (2.31-0ubuntu9) ...

/opt/MegaRAID/MegaCli/MegaCli64: error while loading shared libraries: libncurses.so.5: cannot open shared object file: No such file or directory

If you get the above: apt install libncurses5

Step 5 - Run megacli

root@mint:~/Linux# /opt/MegaRAID/MegaCli/MegaCli64 -h

MegaCLI SAS RAID Management Tool Ver 8.07.06 Nov 13, 2012

(c)Copyright 2011, LSI Corporation, All Rights Reserved.

NOTE: The following options may be given at the end of any command below:

[-Silent] [-AppLogFile filename] [-NoLog] [-page[N]]

[-] is optional.

N - Number of lines per page.

MegaCli -v

MegaCli -help|-h|?

MegaCli -adpCount

MegaCli -AdpSetProp {CacheFlushInterval -val} | { RebuildRate -val}

| {PatrolReadRate -val} | {BgiRate -val} | {CCRate -val} | {ForceSGPIO -val}

| {ReconRate -val} | {SpinupDriveCount -val} | {SpinupDelay -val}

| {CoercionMode -val} | {ClusterEnable -val} | {PredFailPollInterval -val}

| {BatWarnDsbl -val} | {EccBucketSize -val} | {EccBucketLeakRate -val}

| {AbortCCOnError -val} | AlarmEnbl | AlarmDsbl | AlarmSilence

| {SMARTCpyBkEnbl -val} | {SSDSMARTCpyBkEnbl -val} | NCQEnbl | NCQDsbl

| {MaintainPdFailHistoryEnbl -val} | {RstrHotSpareOnInsert -val}

| {DisableOCR -val} | {BootWithPinnedCache -val} | {enblPI -val} |{PreventPIImport -val}

| AutoEnhancedImportEnbl | AutoEnhancedImportDsbl

| {EnblSpinDownUnConfigDrvs -val}|{UseDiskActivityforLocate -val} -aN|-a0,1,2|-aALL

| {ExposeEnclDevicesEnbl -val} | {SpinDownTime -val}

| {SpinUpEncDrvCnt -val} | {SpinUpEncDelay -val} | {Perfmode -val} -aN|-a0,1,2|-aALL

| {PerfMode -val �MaxFlushLines -val �MaxPDLatencyMS -val} -aN|-a0,1,2|-aALL

MegaCli -AdpSetProp -AutoDetectBackPlaneDsbl -val -aN|-a0,1,2|-aALL

val - 0=Enable Auto Detect of SGPIO and i2c SEP.

1=Disable Auto Detect of SGPIO.

2=Disable Auto Detect of i2c SEP.

3=Disable Auto Detect of SGPIO and i2c SEP.

MegaCli -AdpSetProp -CopyBackDsbl -val -aN|-a0,1,2|-aALL

val - 0=Enable Copyback.

1=Disable Copyback.

MegaCli -AdpSetProp -EnableJBOD -val -aN|-a0,1,2|-aALL

val - 0=Disable JBOD mode.

1=Enable JBOD mode.

MegaCli -AdpSetProp -DsblCacheBypass -val -aN|-a0,1,2|-aALL

val - 0=Enable Cache Bypass.

1=Disable Cache Bypass.

MegaCli -AdpSetProp -LoadBalanceMode -val -aN|-a0,1,2|-aALL

val - 0=Auto Load balance mode.

1=Disable Load balance mode.

MegaCli -AdpSetProp -UseFDEOnlyEncrypt -val -aN|-a0,1,2|-aALL

val - 0=FDE and controller encryption (if HW supports) is allowed.

1=Only support FDE encryption, disallow controller encryption.

MegaCli -AdpSetProp -PrCorrectUncfgdAreas -val -aN|-a0,1,2|-aALL

val - 0= Correcting Media error during PR is disabled.

1=Correcting Media error during PR is allowed.

MegaCli -AdpSetProp -DsblSpinDownHSP -val -aN|-a0,1,2|-aALL

val - 0= Spinning down the Hot Spare is enabled.

1=Spinning down the Hot Spare is disabled.

MegaCli -AdpSetProp -DefaultLdPSPolicy -Automatic| -None | -Maximum| -MaximumWithoutCaching -aN|-a0,1,2|-aALL

MegaCli -AdpSetProp -DisableLdPS -interval n1 -time n2 -aN|-a0,1,2|-aALL

where n1 is the number of hours beginning at time n2

where n2 is the number of minutes from 12:00am

MegaCli -AdpSetProp -ENABLEEGHSP -val -aN|-a0,1,2|-aALL

val - 0= Disabled Emergency GHSP.

1= Enabled Emergency GHSP.

MegaCli -AdpSetProp -ENABLEEUG -val -aN|-a0,1,2|-aALL

val - 0= Disabled Emergency UG as Spare.

1= Enabled Emergency UG as Spare.

MegaCli -AdpSetProp -ENABLEESMARTER -val -aN|-a0,1,2|-aALL

val - 0= Disabled Emergency Spare as Smarter.

1= Enabled Emergency Spare as Smarter.

MegaCli -AdpSetProp -DPMenable -val -aN|-a0,1,2|-aALL

val - 0=Disable Drive Performance Monitoring .

1=Enable Drive Performance Monitoring.

MegaCli -AdpSetProp -SupportSSDPatrolRead -val -aN|-a0,1,2|-aALL

val - 0=Disable Patrol read for SSD drives .

1=Enable Patrol read for SSD drives.

MegaCli -AdpGetProp CacheFlushInterval | RebuildRate | PatrolReadRate | ForceSGPIO

| BgiRate | CCRate | ReconRate | SpinupDriveCount | SpinupDelay

| CoercionMode | ClusterEnable | PredFailPollInterval | BatWarnDsbl

| EccBucketSize | EccBucketLeakRate | EccBucketCount | AbortCCOnError

| AlarmDsply | SMARTCpyBkEnbl | SSDSMARTCpyBkEnbl | NCQDsply

| MaintainPdFailHistoryEnbl | RstrHotSpareOnInsert

| EnblSpinDownUnConfigDrvs | DisableOCR

| BootWithPinnedCache | enblPI |PreventPIImport | AutoEnhancedImportDsply | AutoDetectBackPlaneDsbl

| CopyBackDsbl | LoadBalanceMode | UseFDEOnlyEncrypt | WBSupport | EnableJBOD

| DsblCacheBypass | ExposeEnclDevicesEnbl | SpinDownTime | PrCorrectUncfgdAreas

| UseDiskActivityforLocate | ENABLEEGHSP | ENABLEEUG | ENABLEESMARTER | Perfmode | PerfModeValues

| -DPMenable -aN|-a0,1,2|-aALL

| DefaultLdPSPolicy | DisableLdPsInterval | DisableLdPsTime | SpinUpEncDrvCnt

| SpinUpEncDelay | PrCorrectUncfgdAreas

| DsblSpinDownHSP | SupportSSDPatrolRead -aN|-a0,1,2|-aALL

MegaCli -AdpAllInfo -aN|-a0,1,2|-aALL

MegaCli -AdpGetTime -aN|-a0,1,2|-aALL

MegaCli -AdpSetTime yyyymmdd hh:mm:ss -aN

MegaCli -AdpSetVerify -f fileName -aN|-a0,1,2|-aALL

MegaCli -AdpBIOS -Enbl |-Dsbl | -SOE | -BE | -HCOE | - HSM | EnblAutoSelectBootLd | DsblAutoSelectBootLd | -Dsply -aN|-a0,1,2|-aALL

MegaCli -AdpBootDrive {-Set {-Lx | -physdrv[E0:S0]}} | {-Unset {-Lx | -physdrv[E0:S0]}} |-Get -aN|-a0,1,2|-aALL

MegaCli -AdpAutoRbld -Enbl|-Dsbl|-Dsply -aN|-a0,1,2|-aALL

MegaCli -AdpCacheFlush -aN|-a0,1,2|-aALL

MegaCli -AdpPR -Dsbl|EnblAuto|EnblMan|Start|Suspend|Resume|Stop|Info|SSDPatrolReadEnbl |SSDPatrolReadDsbl

|{SetDelay Val}|{-SetStartTime yyyymmdd hh}|{maxConcurrentPD Val} -aN|-a0,1,2|-aALL

MegaCli -AdpCcSched -Dsbl|-Info|{-ModeConc | -ModeSeq [-ExcludeLD -LN|-L0,1,2]

[-SetStartTime yyyymmdd hh ] [-SetDelay val ] } -aN|-a0,1,2|-aALL

MegaCli -AdpCcSched -SetStartTime yyyymmdd hh -aN|-a0,1,2|-aALL

MegaCli -AdpCcSched -SetDelay val -aN|-a0,1,2|-aALL

MegaCli -FwTermLog -BBUoff|BBUoffTemp|BBUon|BBUGet|Dsply|Clear -aN|-a0,1,2|-aALL

MegaCli -AdpAlILog -aN|-a0,1,2|-aALL

MegaCli -AdpDiag [val] -aN|-a0,1,2|-aALL

val - Time in second.

MegaCli -AdpGetPciInfo -aN|-a0,1,2|-aALL

MegaCli -AdpShutDown -aN|-a0,1,2|-aALL

MegaCli -AdpDowngrade -aN|-a0,1,2|-aALL

MegaCli -PDList -aN|-a0,1,2|-aALL

MegaCli -PDGetNum -aN|-a0,1,2|-aALL

MegaCli -pdInfo -PhysDrv[E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PDOnline -PhysDrv[E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PDOffline -PhysDrv[E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PDMakeGood -PhysDrv[E0:S0,E1:S1,...] | [-Force] -aN|-a0,1,2|-aALL

MegaCli -PDMakeJBOD -PhysDrv[E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PDHSP {-Set [-Dedicated [-ArrayN|-Array0,1,2...]] [-EnclAffinity] [-nonRevertible]}

|-Rmv -PhysDrv[E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PDRbld -Start|-Stop|-Suspend|-Resume|-ShowProg |-ProgDsply

-PhysDrv [E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PDClear -Start|-Stop|-ShowProg |-ProgDsply

-PhysDrv [E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PdLocate {[-start] | -stop} -physdrv[E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PdMarkMissing -physdrv[E0:S0,E1:S1,...] -aN|-a0,1,2|-aALL

MegaCli -PdGetMissing -aN|-a0,1,2|-aALL

MegaCli -PdReplaceMissing -physdrv[E0:S0] -arrayA, -rowB -aN

MegaCli -PdPrpRmv [-UnDo] -physdrv[E0:S0] -aN|-a0,1,2|-aALL

MegaCli -EncInfo -aN|-a0,1,2|-aALL

MegaCli -EncStatus -aN|-a0,1,2|-aALL

MegaCli -PhyInfo -phyM -aN|-a0,1,2|-aALL

MegaCli -PhySetLinkSpeed -phyM -speed -aN|-a0,1,2|-aALL

MegaCli -PdFwDownload [offline][ForceActivate] {[-SataBridge] -PhysDrv[0:1] }|{-EncdevId[devId1]} -f

MegaCli -LDInfo -Lx|-L0,1,2|-Lall -aN|-a0,1,2|-aALL

MegaCli -LDSetProp {-Name LdNamestring} | -RW|RO|Blocked|RemoveBlocked | WT|WB|ForcedWB [-Immediate] |RA|NORA|ADRA | DsblPI

| Cached|Direct | -EnDskCache|DisDskCache | CachedBadBBU|NoCachedBadBBU

-Lx|-L0,1,2|-Lall -aN|-a0,1,2|-aALL

MegaCli -LDSetPowerPolicy -Default| -Automatic| -None| -Maximum| -MaximumWithoutCaching

-Lx|-L0,1,2|-Lall -aN|-a0,1,2|-aALL

MegaCli -LDGetProp -Cache | -Access | -Name | -DskCache | -PSPolicy | Consistency -Lx|-L0,1,2|-LALL

-aN|-a0,1,2|-aALL

MegaCli -LDInit {-Start [-full]}|-Abort|-ShowProg|-ProgDsply -Lx|-L0,1,2|-LALL -aN|-a0,1,2|-aALL

MegaCli -LDCC {-Start [-force]}|-Abort|-Suspend|-Resume|-ShowProg|-ProgDsply -Lx|-L0,1,2|-LALL -aN|-a0,1,2|-aALL

MegaCli -LDBI -Enbl|-Dsbl|-getSetting|-Abort|-Suspend|-Resume|-ShowProg|-ProgDsply -Lx|-L0,1,2|-LALL -aN|-a0,1,2|-aALL

MegaCli -LDRecon {-Start -rX [{-Add | -Rmv} -Physdrv[E0:S0,...]]}|-ShowProg|-ProgDsply

-Lx -aN

MegaCli -LdPdInfo -aN|-a0,1,2|-aALL

MegaCli -LDGetNum -aN|-a0,1,2|-aALL

MegaCli -LDBBMClr -Lx|-L0,1,2,...|-Lall -aN|-a0,1,2|-aALL

MegaCli -getLdExpansionInfo -Lx|-L0,1,2|-Lall -aN|-a0,1,2|-aALL

MegaCli -LdExpansion -pN -dontExpandArray -Lx|-L0,1,2|-Lall -aN|-a0,1,2|-aALL

MegaCli -GetBbtEntries -Lx|-L0,1,2|-Lall -aN|-a0,1,2|-aALL

MegaCli -Cachecade -assign|-remove -Lx|-L0,1,2|-LALL -aN|-a0,1,2|-aALL

MegaCli -CfgLdAdd -rX[E0:S0,E1:S1,...] [WT|WB] [NORA|RA|ADRA] [Direct|Cached]

[CachedBadBBU|NoCachedBadBBU] [-szXXX [-szYYY ...]]

[-strpszM] [-Hsp[E0:S0,...]] [-AfterLdX] | [Secure]

[-Default| -Automatic| -None| -Maximum| -MaximumWithoutCaching] [-Cache] [-enblPI] [-Force]-aN

MegaCli -CfgCacheCadeAdd [-rX] -Physdrv[E0:S0,...] {-Name LdNamestring} [WT|WB|ForcedWB] [-assign -LX|L0,2,5..|LALL] -aN|-a0,1,2|-aALL

MegaCli -CfgEachDskRaid0 [WT|WB] [NORA|RA|ADRA] [Direct|Cached] [-enblPI]

[CachedBadBBU|NoCachedBadBBU] [-strpszM]|[Secure] [-Default| -Automatic| -None| -Maximum| -MaximumWithoutCaching] [-Cache] -aN|-a0,1,2|-aALL

MegaCli -CfgClr [-Force] -aN|-a0,1,2|-aALL

MegaCli -CfgDsply -aN|-a0,1,2|-aALL

MegaCli -CfgCacheCadeDsply -aN|-a0,1,2|-aALL

MegaCli -CfgLdDel -LX|-L0,2,5...|-LALL [-Force] -aN|-a0,1,2|-aALL

MegaCli -CfgCacheCadeDel -LX|-L0,2,5...|-LALL -aN|-a0,1,2|-aALL

MegaCli -CfgFreeSpaceinfo -aN|-a0,1,2|-aALL

MegaCli -CfgSpanAdd -r10 -Array0[E0:S0,E1:S1] -Array1[E0:S0,E1:S1] [-ArrayX[E0:S0,E1:S1] ...]

[WT|WB] [NORA|RA|ADRA] [Direct|Cached] [CachedBadBBU|NoCachedBadBBU]

[-szXXX[-szYYY ...]][-strpszM][-AfterLdX]| [Secure]

[-Default| -Automatic| -None| -Maximum| -MaximumWithoutCaching] [-Cache] [-enblPI] [-Force] -aN

MegaCli -CfgSpanAdd -r50 -Array0[E0:S0,E1:S1,E2:S2,...] -Array1[E0:S0,E1:S1,E2:S2,...]

[-ArrayX[E0:S0,E1:S1,E2:S2,...] ...] [WT|WB] [NORA|RA|ADRA] [Direct|Cached]

[CachedBadBBU|NoCachedBadBBU][-szXXX[-szYYY ...]][-strpszM][-AfterLdX]

[Secure] [-Default| -Automatic| -None| -Maximum| -MaximumWithoutCaching] [-Cache] [-enblPI] [-Force] -aN

MegaCli -CfgSpanAdd -r60 -Array0[E0:S0,E1:S1,E2:S2,E3,S3...] -Array1[E0:S0,E1:S1,E2:S2,E3,S3...]

[-ArrayX[E0:S0,E1:S1,E2:S2,E3,S3...] ...] [WT|WB] [NORA|RA|ADRA] [Direct|Cached]

[CachedBadBBU|NoCachedBadBBU][-szXXX[-szYYY ...]][-strpszM][-AfterLdX]|

[Secure] [-Default| -Automatic| -None| -Maximum| -MaximumWithoutCaching] [-Cache] [-enblPI] [-Force]-aN

MegaCli -CfgAllFreeDrv -rX [-SATAOnly] [-SpanCount XXX] [WT|WB] [NORA|RA|ADRA]

[Direct|Cached] [CachedBadBBU|NoCachedBadBBU] [-strpszM]

[-HspCount XX [-HspType -Dedicated|-EnclAffinity|-nonRevertible]]|

[Secure] [-Default| -Automatic| -None| -Maximum| -MaximumWithoutCaching] [-Cache] [-enblPI] -aN

MegaCli -CfgSave -f filename -aN

MegaCli -CfgRestore -f filename -aN

MegaCli -CfgForeign -Scan | [-Passphrase sssssssssss] -aN|-a0,1,2|-aALL

MegaCli -CfgForeign -Dsply [x] | [-Passphrase sssssssssss] -aN|-a0,1,2|-aALL

MegaCli -CfgForeign -Preview [x] | [-Passphrase sssssssssss] -aN|-a0,1,2|-aALL

MegaCli -CfgForeign -Import [x] | [-Passphrase sssssssssss] -aN|-a0,1,2|-aALL

MegaCli -CfgForeign -Clear [x]|[-Passphrase sssssssssss] -aN|-a0,1,2|-aALL

x - index of foreign configurations. Optional. All by default.

MegaCli -AdpEventLog -GetEventLogInfo -aN|-a0,1,2|-aALL

MegaCli -AdpEventLog -GetEvents {-info -warning -critical -fatal} {-f

MegaCli -AdpEventLog -GetSinceShutdown {-info -warning -critical -fatal} {-f

MegaCli -AdpEventLog -GetSinceReboot {-info -warning -critical -fatal} {-f

MegaCli -AdpEventLog -IncludeDeleted {-info -warning -critical -fatal} {-f

MegaCli -AdpEventLog -GetLatest n {-info -warning -critical -fatal} {-f

MegaCli -AdpEventLog -GetCCIncon -f

MegaCli -AdpEventLog -Clear -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -GetBbuStatus -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -GetBbuCapacityInfo -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -GetBbuDesignInfo -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -GetBbuProperties -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -BbuLearn -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -BbuMfgSleep -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -BbuMfgSeal -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -getBbumodes -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -SetBbuProperties -f

MegaCli -AdpBbuCmd -GetGGEEPData offset [Hexaddress] NumBytes n -aN|-a0,1,2|-aALL

MegaCli -AdpBbuCmd -ScheduleLearn -Dsbl|-Info|[-STARTTIME DDD hh] -aN|-a0,1,2|-aALL

MegaCli -AdpFacDefSet -aN

MegaCli -AdpFwFlash -f filename [-ResetNow] [-NoSigChk] [-NoVerChk] [-FWTYPE n] -aN|-a0,1,2|-aALL

MegaCli -AdpGetConnectorMode -ConnectorN|-Connector0,1|-ConnectorAll -aN|-a0,1,2|-aALL

MegaCli -AdpSetConnectorMode -Internal|-External|-Auto -ConnectorN|-Connector0,1|-ConnectorAll -aN|-a0,1,2|-aALL

MegaCli -PhyErrorCounters -aN|-a0,1,2|-aALL

MegaCli -DirectPdMapping -Enbl|-Dsbl|-Dsply -aN|-a0,1,2|-aALL

MegaCli -PDCpyBk -Start -PhysDrv[E0:S0,E1:S1] -aN|-a0,1,2|-aALL

MegaCli -PDCpyBk -Stop|-Suspend|-Resume|-ShowProg|-ProgDsply -PhysDrv[E0:S0] -aN|-a0,1,2|-aALL

MegaCli -PDInstantSecureErase -PhysDrv[E0:S0,E1:S1,...] | [-Force] -aN|-a0,1,2|-aALL

MegaCli -CfgSpanAdd -rX -array0[E0:S1,E1:S1.....] array1[E0:S1,E1:S1.....] -szxxx -enblPI -aN|-a0,1,2|-aALL

MegaCli -LDMakeSecure -Lx|-L0,1,2,...|-Lall -aN|-a0,1,2|-aALL

MegaCli -DeleteSecurityKey | [-Force] -aN

MegaCli -CreateSecurityKey -Passphrase sssssssssss [-KeyID kkkkkkkkkkk] -aN

MegaCli -CreateSecurityKey useEKMS -aN

MegaCli -ChangeSecurityKey -OldPassphrase sssssssssss | -Passphrase sssssssssss |

[-KeyID kkkkkkkkkkk] -aN

MegaCli -ChangeSecurityKey -Passphrase sssssssssss |

[-KeyID kkkkkkkkkkk] -aN

MegaCli -ChangeSecurityKey useEKMS -oldPassphrase sssssssssss -aN

MegaCli -ChangeSecurityKey -useEKMS -aN

MegaCli -GetKeyID [-PhysDrv[E0:S0]] -aN

MegaCli -SetKeyID -KeyID kkkkkkkkkkk -aN

MegaCli -VerifySecurityKey -Passphrase sssssssssss -aN

MegaCli -GetPreservedCacheList -aN|-a0,1,2|-aALL

MegaCli -DiscardPreservedCache -Lx|-L0,1,2|-Lall -force -aN|-a0,1,2|-aALL

sssssssssss - It must be between eight and thirty-two

characters and contain at least one number,

one lowercase letter, one uppercase

letter and one non-alphanumeric character.

kkkkkkkkkkk - Must be less than 256 characters.

MegaCli -ShowSummary [-f filename] -aN

MegaCli -ELF -GetSafeId -aN|-a0,1,2|-aALL

MegaCli -ELF -ControllerFeatures -aN|-a0,1,2|-aALL

MegaCli -ELF -Applykey key

MegaCli -ELF -TransferToVault -aN|-a0,1,2|-aALL

MegaCli -ELF -DeactivateTrialKey -aN|-a0,1,2|-aALL

MegaCli -ELF -ReHostInfo -aN|-a0,1,2|-aALL

MegaCli -ELF -ReHostComplete -aN|-a0,1,2|-aALL

MegaCli -LDViewMirror -Lx|-L0,1,2,...|-Lall -aN|-a0,1,2|-aALL

MegaCli -LDJoinMirror -DataSrc

MegaCli -SecureErase

Start[

Simple|

[Normal [ |ErasePattern ErasePatternA|ErasePattern ErasePatternA ErasePattern ErasePatternB]]|

[Thorough [ |ErasePattern ErasePatternA|ErasePattern ErasePatternA ErasePattern ErasePatternB]]]

| Stop

| ShowProg

| ProgDsply

[-PhysDrv [E0:S0,E1:S1,...] | -Lx|-L0,1,2|-LALL] -aN|-a0,1,2|-aALL

MegaCli -Version -Cli|-Ctrl|-Driver|-Pd -aN|-a0,1,2|-aALL

MegaCli -Perfmon {-start -interval

MegaCli -DpmStat -Dsply {lct | hist | ra | ext } [-physdrv[E0:S0]] -aN|-a0,1,2|-aALL

MegaCli -DpmStat -Clear {lct | hist | ra | ext } -aN|-a0,1,2|-aALL

Note: The directly connected drives can be specified as [:S]

Wildcard '?' can be used to specify the enclosure ID for the drive in the

only enclosure without direct connected device or the direct connected

drives with no enclosure in the system.

Note:[-aALL] option assumes that the parameters specified are valid

for all the Adapters.

Note:ProgDsply option is not supported in VMWARE-COSLESS.

The following options may be given at the end of any command above:

[-Silent] [-AppLogFile filename] [-NoLog] [-page[N]]

[-] is optional.

N - Number of lines per page.

MegaCli XD -AddVd

MegaCli XD -RemVd

MegaCli XD -AddCdev

MegaCli XD -RemCdev

MegaCli XD -VdList | -Configured | -Unconfigured

MegaCli XD -CdevList | -Configured | -Unconfigured

MegaCli XD -ConfigInfo

MegaCli XD -PerfStats

MegaCli XD -OnlineVd

MegaCli XD -WarpDriveInfo -iN | -iALL

MegaCli XD -FetchSafeId -iN | -iALL

MegaCli XD -ApplyActivationKey

Exit Code: 0x00

MegaCLI Command Tutorial

This command lists all of the physical drives on adapter 0 or (-a0).

/opt/MegaRAID/MegaCli/MegaCli64 -pdlist -a0

| Useful Items | |

| Slot Number: | the physical slot the drive is on the server |

| Raw Size: | the size of the disk in GB |

Notable things

Slot Number:

Notice it will list the slot# so you know the physical position of the drive in the server.

Adapter #0

Enclosure Device ID: 32

Slot Number: 1

Enclosure position: 1

Device Id: 1

WWN: 5000C5003A260844

Sequence Number: 1

Media Error Count: 0

Other Error Count: 0

Predictive Failure Count: 0

Last Predictive Failure Event Seq Number: 0

PD Type: SAS

Raw Size: 279.396 GB [0x22ecb25c Sectors]

Non Coerced Size: 278.896 GB [0x22dcb25c Sectors]

Coerced Size: 278.875 GB [0x22dc0000 Sectors]

Sector Size: 0

Firmware state: Unconfigured(good), Spun Up

Device Firmware Level: FS64

Shield Counter: 0

Successful diagnostics completion on : N/A

SAS Address(0): 0x5000c5003a260845

SAS Address(1): 0x0

Connected Port Number: 1(path0)

Inquiry Data: SEAGATE ST9300603SS FS646SE3T176

FDE Capable: Not Capable

FDE Enable: Disable

Secured: Unsecured

Locked: Unlocked

Needs EKM Attention: No

Foreign State: None

Device Speed: 6.0Gb/s

Link Speed: 6.0Gb/s

Media Type: Hard Disk Device

Drive Temperature :28C (82.40 F)

PI Eligibility: No

Drive is formatted for PI information: No

PI: No PI

Port-0 :

Port status: Active

Port's Linkspeed: 6.0Gb/s

Port-1 :

Port status: Active

Port's Linkspeed: Unknown

Drive has flagged a S.M.A.R.T alert : No

MegaRAID Create RAID 10 Example

First we need the slots of our drives, in this exampe on this server I only had 4 drives and knew I wanted each of them, so doing a grep on slot was fine to use any that came out.

Get Slot Numbers of Drives

MegaCli64 -PDList -aALL|grep -i slot

Slot Number: 1

Slot Number: 3

Slot Number: 5

Slot Number: 7

Use the slot numbers from above later on in our array creation.

Get "Enclosure Device ID":

MegaCli64 -PDList -aALL|grep -i enclosure

Enclosure Device ID: 32

Enclosure position: 1

Enclosure Device ID: 32

Enclosure position: 1

Enclosure Device ID: 32

Enclosure position: 1

Enclosure Device ID: 32

Enclosure position: 1

In our case we can see the enclosure ID is 32

Create RAID 10 Array

CfgSpanAdd command which is required for a RAID 10 array.

r10 specifies it as RAID 10

Array is required as RAID 10 in theory is 2 RAID 1's combined into a RAID 0.

Note that in the brackets the 32:1 is based on the fact from above that our enclosure ID is "32" and the 1 is one of our slot numbers of a disk we want to use. Be sure to adjust according

MegaCli64 -CfgSpanAdd -r10 -Array0[32:1,32:3] -Array1[32:5,32:7] -a0

Adapter 0: Created VD 0

Adapter 0: Configured the Adapter!!

Exit Code: 0x00

Success in RAID 10

You will now see that you have a new device that automatically appears as the next available drive letter.

Disk /dev/sdc: 557.77 GiB, 598879502336 bytes, 1169686528 sectors

Disk model: PERC H710P

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

MegaRAID RAID 0 Example

MegaCli64 -CfgLdAdd -r0[32:5] -a0

The above creates a RAID 0 array out of a single drive located in enclosure 32 and slot#5 on adapter 0.

This is the way if you want simpler and better performance by using mdadm to create your own array.

Of course you could have specified multiple drives if you truly wanted a fast but totally unreliable RAID 0 multi disk array (eg. -r0[32:5,32:4]

Check array/rebuild state:

./MegaCli64 -LDInfo -LALL -aALL

Adapter 0 -- Virtual Drive Information:

Virtual Drive: 0 (Target Id: 0)

Name :

RAID Level : Primary-1, Secondary-0, RAID Level Qualifier-0

Size : 557.75 GB

Sector Size : 512

Mirror Data : 557.75 GB

State : Optimal

Strip Size : 64 KB

Number Of Drives per span:2

Span Depth : 2

Default Cache Policy: WriteBack, ReadAdaptive, Direct, No Write Cache if Bad BBU

Current Cache Policy: WriteBack, ReadAdaptive, Direct, No Write Cache if Bad BBU

Default Access Policy: Read/Write

Current Access Policy: Read/Write

Disk Cache Policy : Disk's Default

Ongoing Progresses:

Background Initialization: Completed 24%, Taken 10 min.

Encryption Type : None

Default Power Savings Policy: Controller Defined

Current Power Savings Policy: None

Can spin up in 1 minute: Yes

LD has drives that support T10 power conditions: Yes

LD's IO profile supports MAX power savings with cached writes: No

Bad Blocks Exist: No

Is VD Cached: Yes

Cache Cade Type : Read Only

MegaRAID Create Single Disk RAID 0 Example

If you don't want to use the entire RAID function and want to use mdadm, you can create a fake RAID 0 of each drive to expose it as a sort of normal drive and then make a RAID out of that for use with mdadm. mdadm usually gives better performance, especially in RAID 10.

For example using an old 4 disk RAID 10 array with MegaRAID it produces only 249MB/s:

5242880000 bytes (5.2 GB, 4.9 GiB) copied, 21.0175 s, 249 MB/s

Now compare the same 4 disks with mdadm RAID 10 with virtual raid 0 drives

5242880000 bytes (5.2 GB, 4.9 GiB) copied, 16.2648 s, 322 MB/s

Note that this test was done while the array was still initializing too!

Look at how much faster the fully sync'd mdadm RAID 10 is (nearly 2x faster than the MegaRAID 10):

5242880000 bytes (5.2 GB, 4.9 GiB) copied, 10.412 s, 504 MB/s

Create each PD/physical drive using the enlosure ID and slot ID [32:1] for example.

MegaCli64 CfgLdAdd -r0 [32:1] -a0

Adapter 0: Created VD 0

Adapter 0: Configured the Adapter!!

Exit Code: 0x00

MegaCli64 CfgLdAdd -r0 [32:3] -a0

Adapter 0: Created VD 1

Adapter 0: Configured the Adapter!!

Exit Code: 0x00

MegaCli64 CfgLdAdd -r0 [32:5] -a0

Adapter 0: Created VD 2

Adapter 0: Configured the Adapter!!

Exit Code: 0x00

MegaCli64 CfgLdAdd -r0 [32:7] -a0

Adapter 0: Created VD 3

Adapter 0: Configured the Adapter!!

After this just do the normal mdadm config on each disk (in my case the above created a /dev/sdc sdd sde sdf).

How To Delete Virtual Drive

For example above we created the Virtual Drive with ID "0"

Virtual Drive: 0 (Target Id: 0)

./MegaCli64 CfgLdDel -L0 -Force -a0

The 0 in -L0 means logical drive 0 from above. Change to match your ID.

If you don't use -Force before -a0 you get this error: Virtual Disk is associate with Cache Cade. Please Use force option to delete

Adapter 0: Deleted Virtual Drive-0(target id-0)

How to use smartctl normally with megaraid

One other irritating thing about tools like megaraid is that smart doesn't work as you expect. Take a virtual drive /dev/sdc it doesn't show anything useful.

With smartctl you can use the megaraid option/plugin and specify the drive#/slot# and get normal info like this:

Note below the magic is in -d megaraid,3 which says we want the megaraid drive#3 or slot#3 smart info, which then gives us the normal expected info.

smartctl -a /dev/sdc -d megaraid,3 -T permissive

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-5.4.0-26-generic] (local build)

Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Vendor: IBM-ESXS

Product: ST9300603SS F

Revision: B53A

Compliance: SPC-3

User Capacity: 300,000,000,000 bytes [300 GB]

Logical block size: 512 bytes

Rotation Rate: 10000 rpm

Form Factor: 2.5 inches

Logical Unit id: 0x5000c5001dbdea07

Serial number: 3SE1MFK400009035N4B3

Device type: disk

Transport protocol: SAS (SPL-3)

Local Time is: Mon Mar 24 20:16:16 2025 UTC

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

Temperature Warning: Enabled

=== START OF READ SMART DATA SECTION ===

SMART Health Status: OK

Current Drive Temperature: 0 C

Drive Trip Temperature: 0 C

Elements in grown defect list: 0

Error Counter logging not supported

Device does not support Self Test logging

Convert-im6.q16: attempt to perform an operation not allowed by the security policy `PDF' @ error/constitute.c/IsCoderAuthorized/413. convert-im6.q16: no images defined `pts-time.jpg' @ error/convert.c/ConvertImageCommand/3258. solution ImageMagick P

Were you trying to convert a PDF and get this message?:

Convert-im6.q16: attempt to perform an operation not allowed by the security policy `PDF' @ error/constitute.c/IsCoderAuthorized/413.

convert-im6.q16: no images defined `pts-time.jpg' @ error/convert.c/ConvertImageCommand/3258.

Solution:

Find the "PDF" pattern and set it like below:

<policy domain="coder" rights="read|write" pattern="PDF" />

By default the rights are none (rights="none") which is why you get that error.

Apache PHP sending expires header solution cannot use cache with CDN

We've had clients asking why their CDN is not working, it is often a PHP setting that causes the below header to be sent:

expires: Thu, 19 Nov 1981 08:52:00 GMT

Solution Edit your /etc/php.ini

Set the option below as just being empty. Generally the default is nocache and will result in sending the expires header from 1981.

session.cache_limiter =

Here is what the man says about the option:

; Set to {nocache,private,public,} to determine HTTP caching aspects

; or leave this empty to avoid sending anti-caching headers.

; http://php.net/session.cache-limiter

However, we have often seen that any option but being empty results in the expires header being sent. If you want your content to be cachable by CDN, then make sure the session.cache_limiter contains a NULL/empty value.

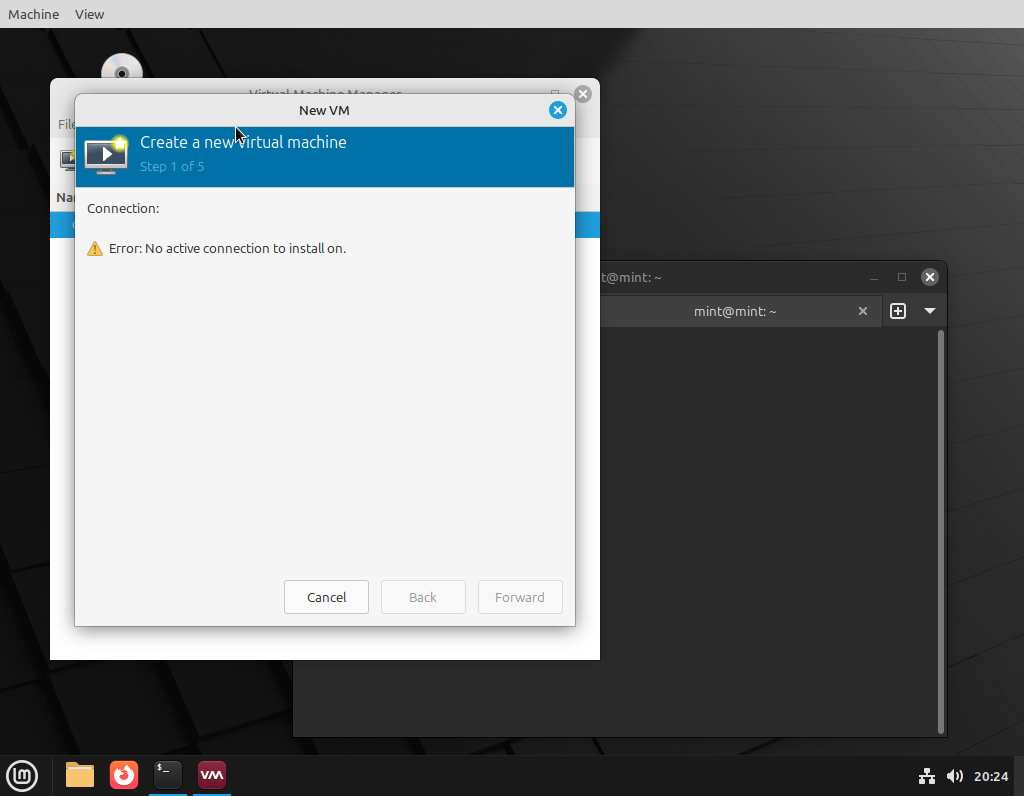

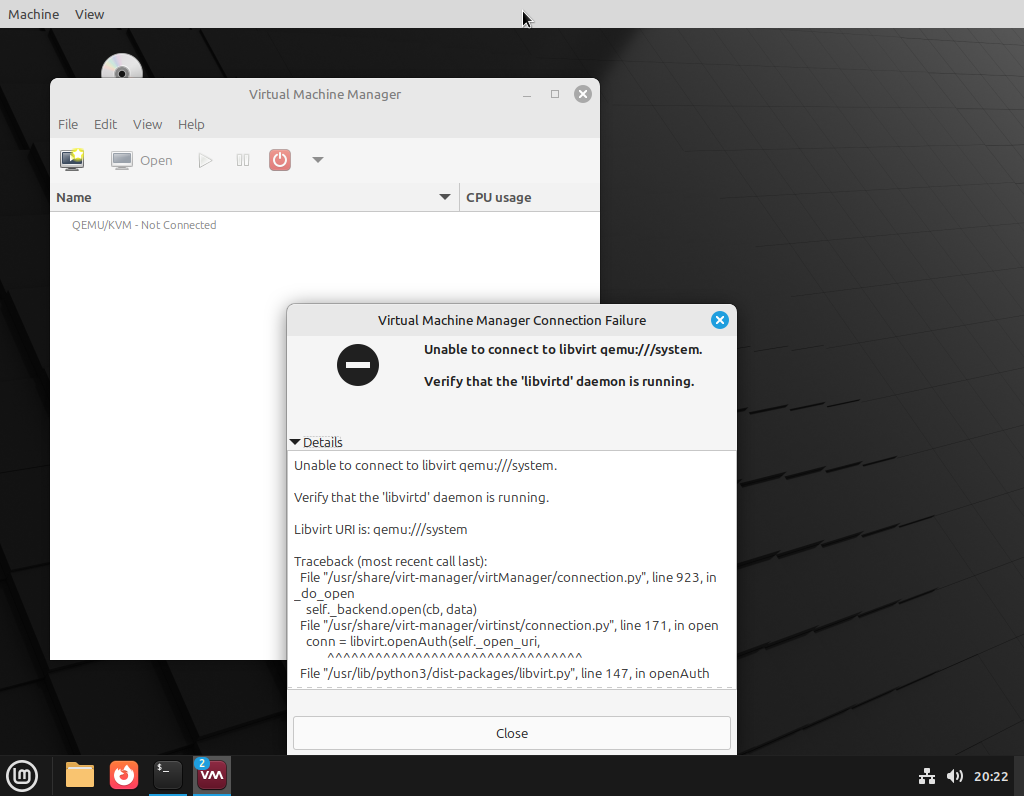

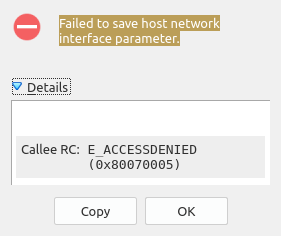

How to install virt-manager in Mint 22/Ubuntu 22

Step 1.) Install virt-manager

sudo apt install virt-manager

Step 2.) Start libvirtd

sudo systemctl start libvirtd

sudo systemctl enable libvirtd

Step 3.) Permissions

Your user needs access to libvirt and kvm or it won't work without running as sudo.

sudo usermod -a -G kvm yourusername

sudo usermond -a -G libvirt yourusername

Step 4.) Logout and Login

If you get errors relating to being unable to connect to QEMU or not active connection, it is probably a permissions issue.

if you get errors with being unable to connect to libvirtd, it is probably not started.

Infiniband Guide

A practical guide for admins who need to plan the required amount of bandwidth and what connectors/cards are needed for which.

| Standard | Speed | Cable/Connector |

| SDR | 8G | SFP |

| DDR | 10/16G | SFP, QSFP |

| QDR | 40/32G | QSFP |

| FDR | 56G | QSFP |

| EDR | 100G | QSP28 |

| HDR | 200G | QSP56 |

| NDR | 400G | QSFP-DD |

| XDR | 800G | OSFP, QSFP-DD |

python mysql install error: /bin/sh: 1: mysql_config: not found /bin/sh: 1: mariadb_config: not found /bin/sh: 1: mysql_config: not found mysql_config --version

These errors are usually caused by the lack mysql client dev files

If using mariadb install this:

apt-get install libmariadbclient-dev

If using mysql install this:

apt-get install libmysqlclient-dev

pip3 install mysql

The directory '/root/.cache/pip/http' or its parent directory is not owned by the current user and the cache has been disabled. Please check the permissions and owner of that directory. If executing pip with sudo, you may want sudo's -H flag.

The directory '/root/.cache/pip' or its parent directory is not owned by the current user and caching wheels has been disabled. check the permissions and owner of that directory. If executing pip with sudo, you may want sudo's -H flag.

Collecting mysql

Downloading https://files.pythonhosted.org/packages/9a/52/8d29c58f6ae448a72fbc612955bd31accb930ca479a7ba7197f4ae4edec2/mysql-0.0.3-py3-none-any.whl

Collecting mysqlclient (from mysql)

Downloading https://files.pythonhosted.org/packages/50/5f/eac919b88b9df39bbe4a855f136d58f80d191cfea34a3dcf96bf5d8ace0a/mysqlclient-2.1.1.tar.gz (88kB)

100% |████████████████████████████████| 92kB 5.5MB/s

Complete output from command python setup.py egg_info:

/bin/sh: 1: mysql_config: not found

/bin/sh: 1: mariadb_config: not found

/bin/sh: 1: mysql_config: not found

mysql_config --version

mariadb_config --version

mysql_config --libs

Traceback (most recent call last):

File "

File "/tmp/pip-install-twfzngc5/mysqlclient/setup.py", line 15, in

metadata, options = get_config()

File "/tmp/pip-install-twfzngc5/mysqlclient/setup_posix.py", line 70, in get_config

libs = mysql_config("libs")

File "/tmp/pip-install-twfzngc5/mysqlclient/setup_posix.py", line 31, in mysql_config

raise OSError("{} not found".format(_mysql_config_path))

OSError: mysql_config not found

----------------------------------------

Command "python setup.py egg_info" failed with error code 1 in /tmp/pip-install-twfzngc5/mysqlclient/

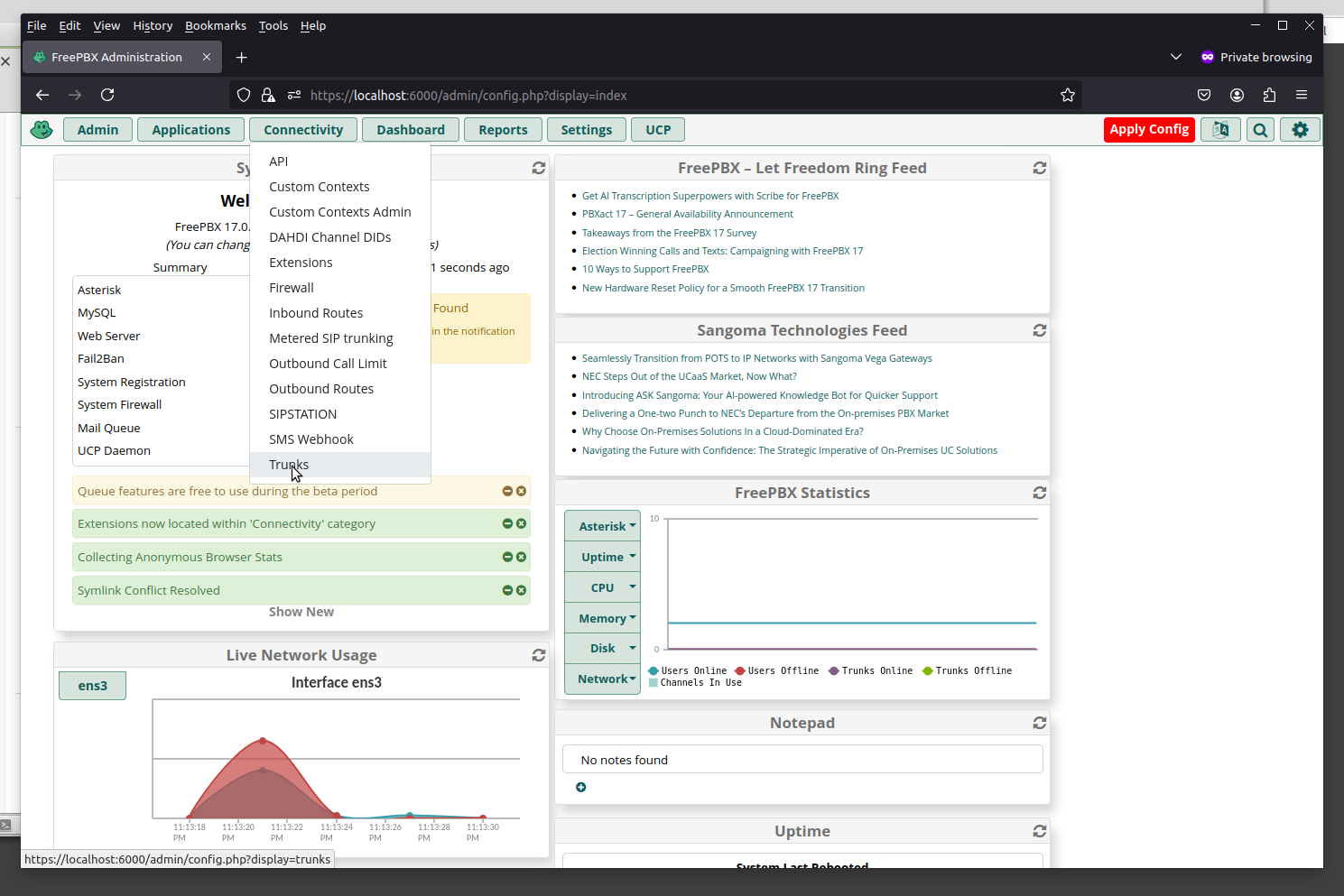

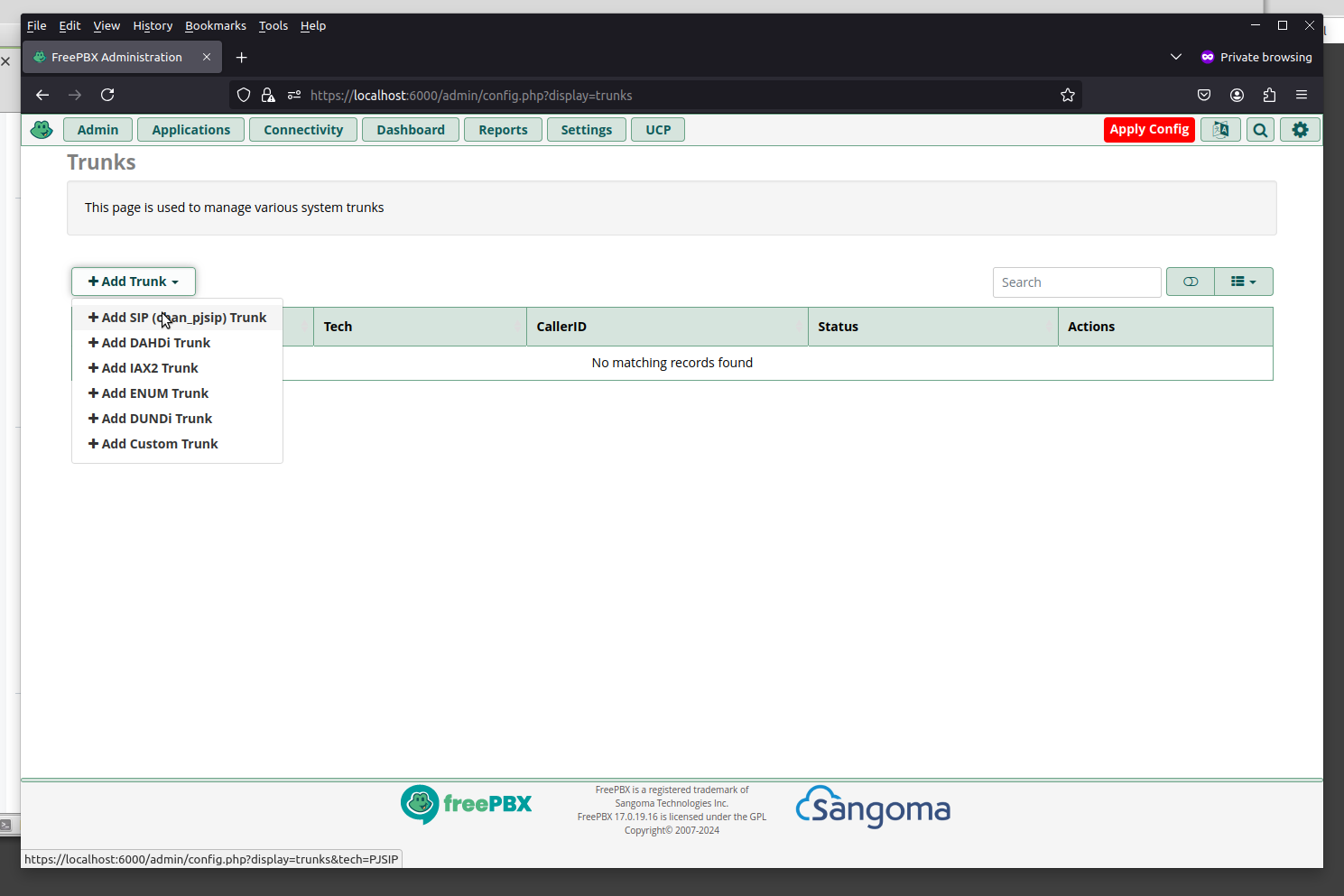

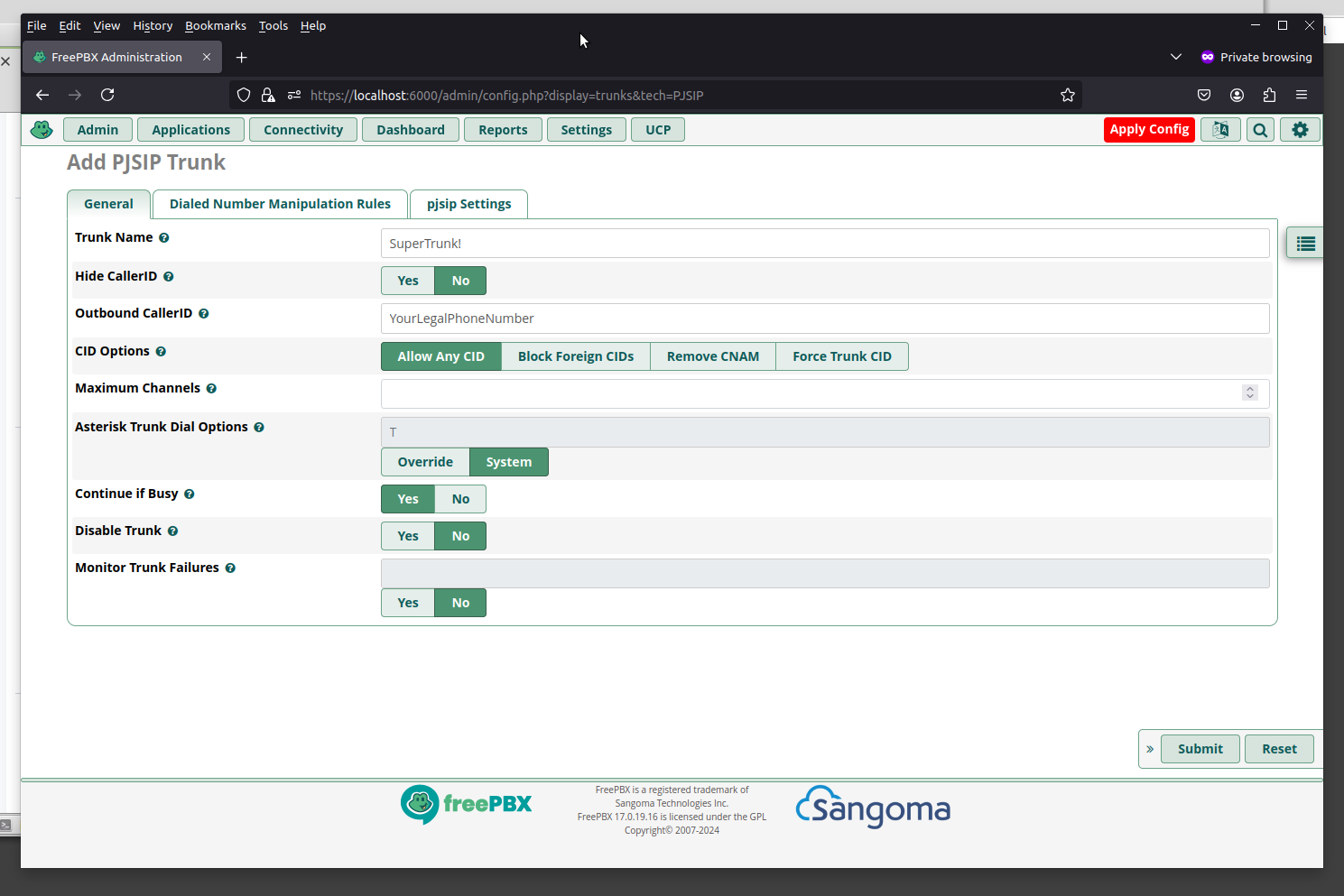

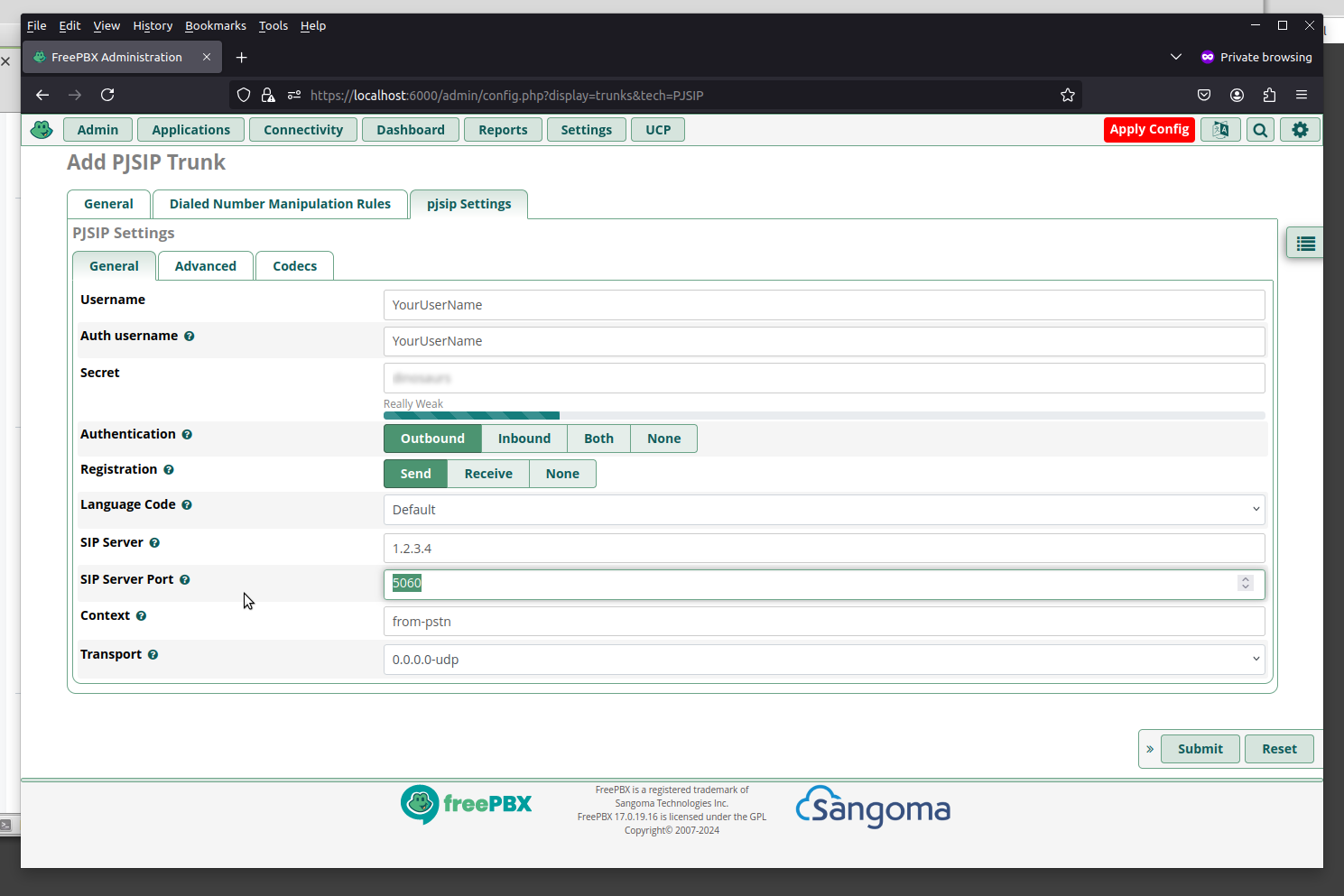

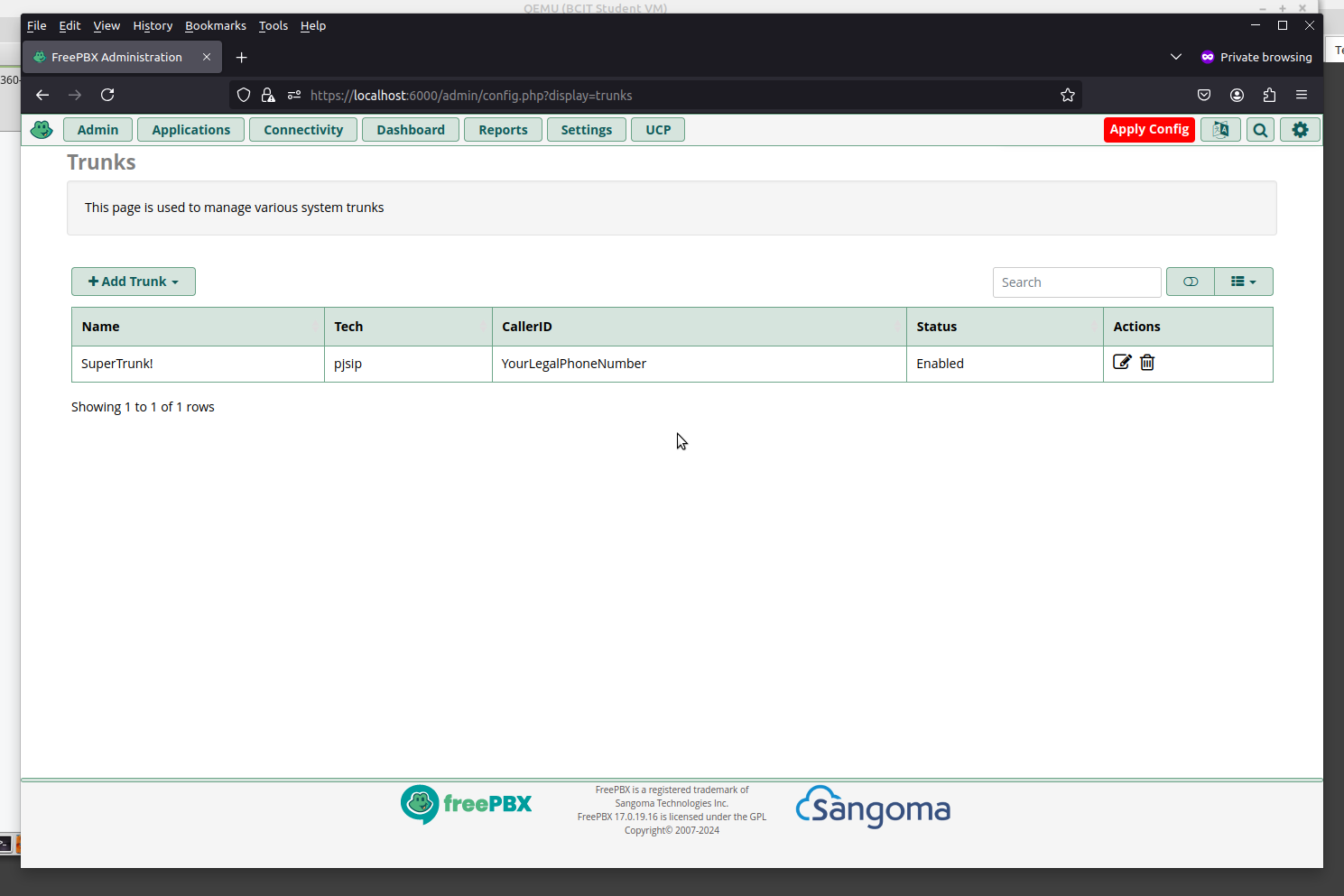

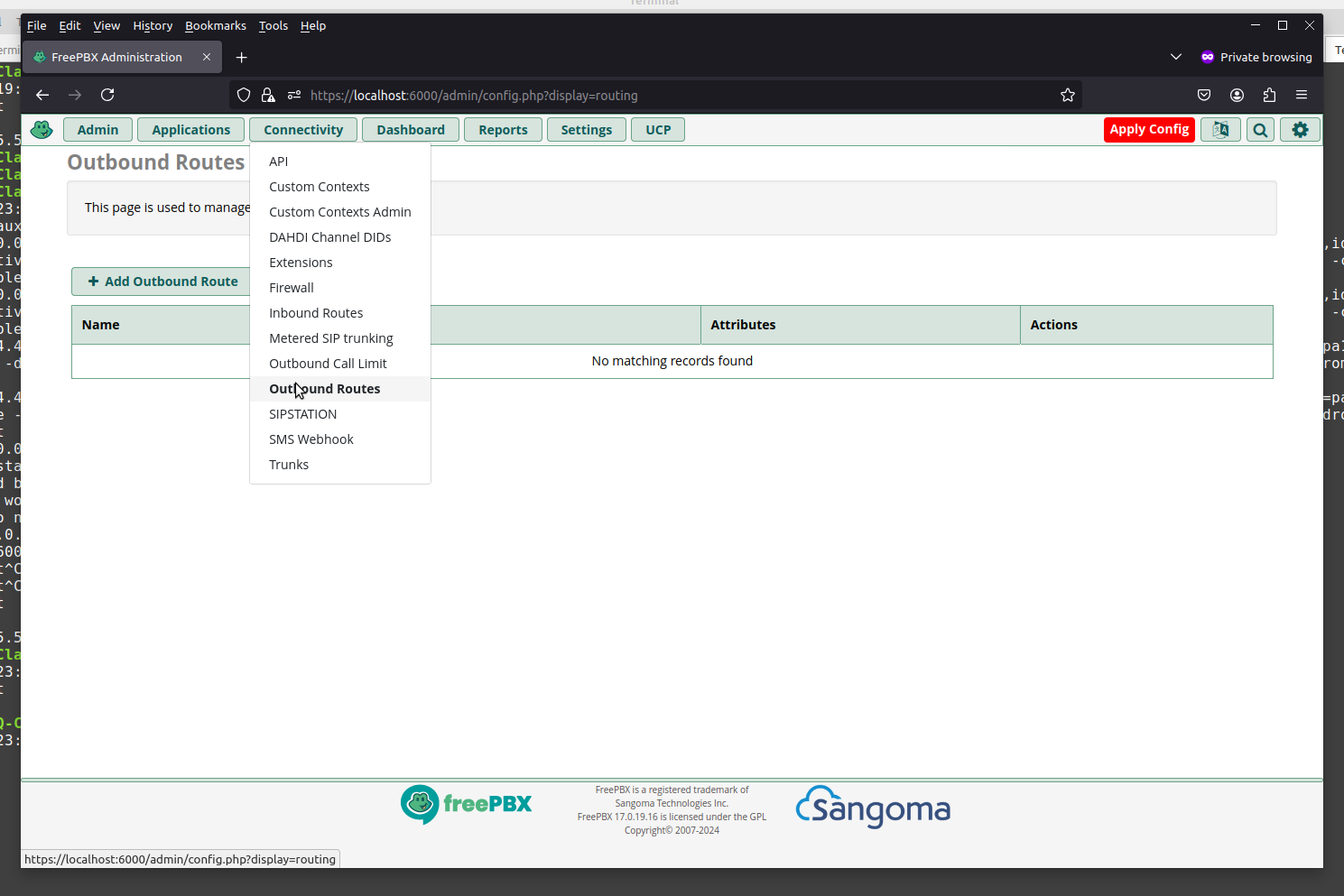

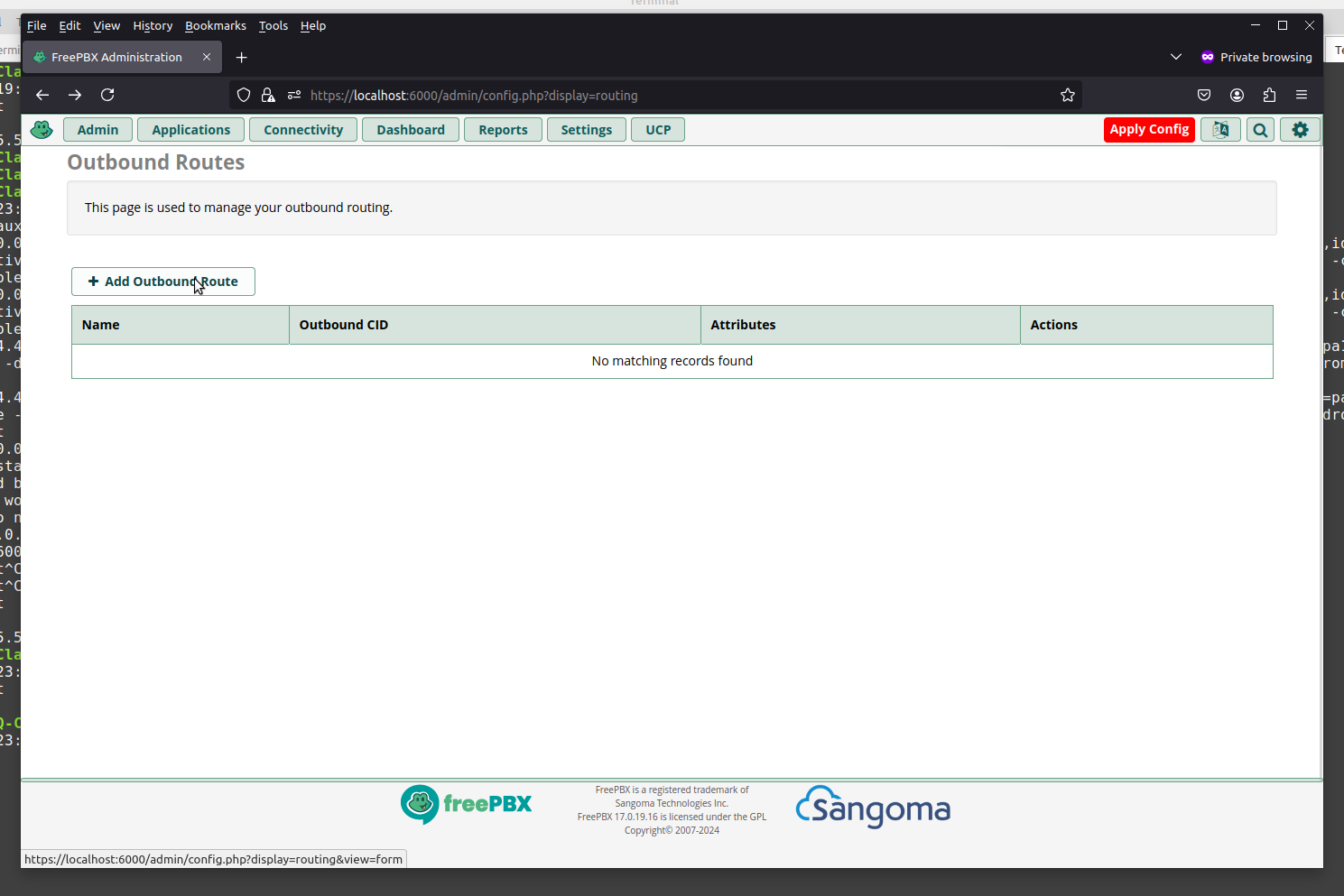

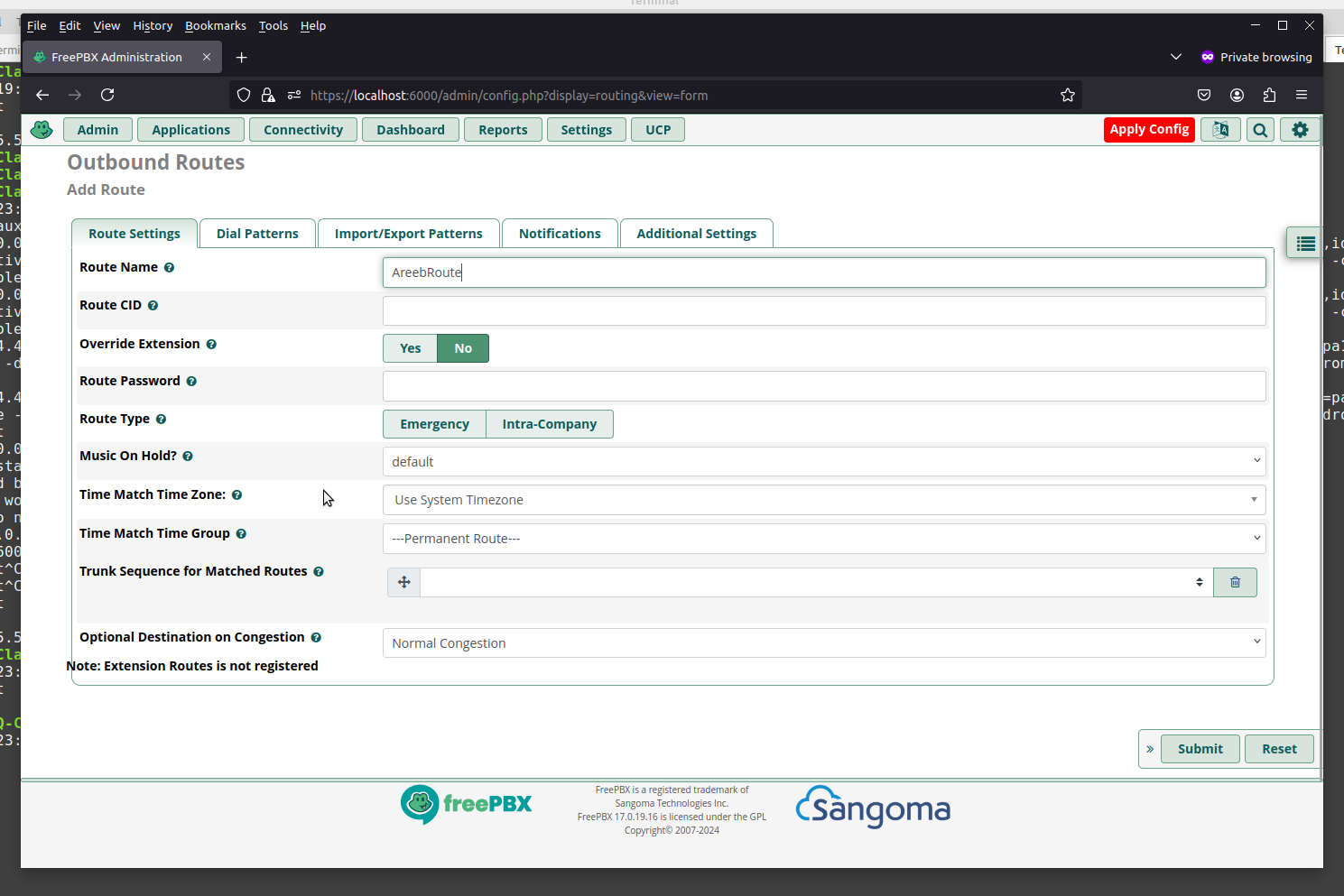

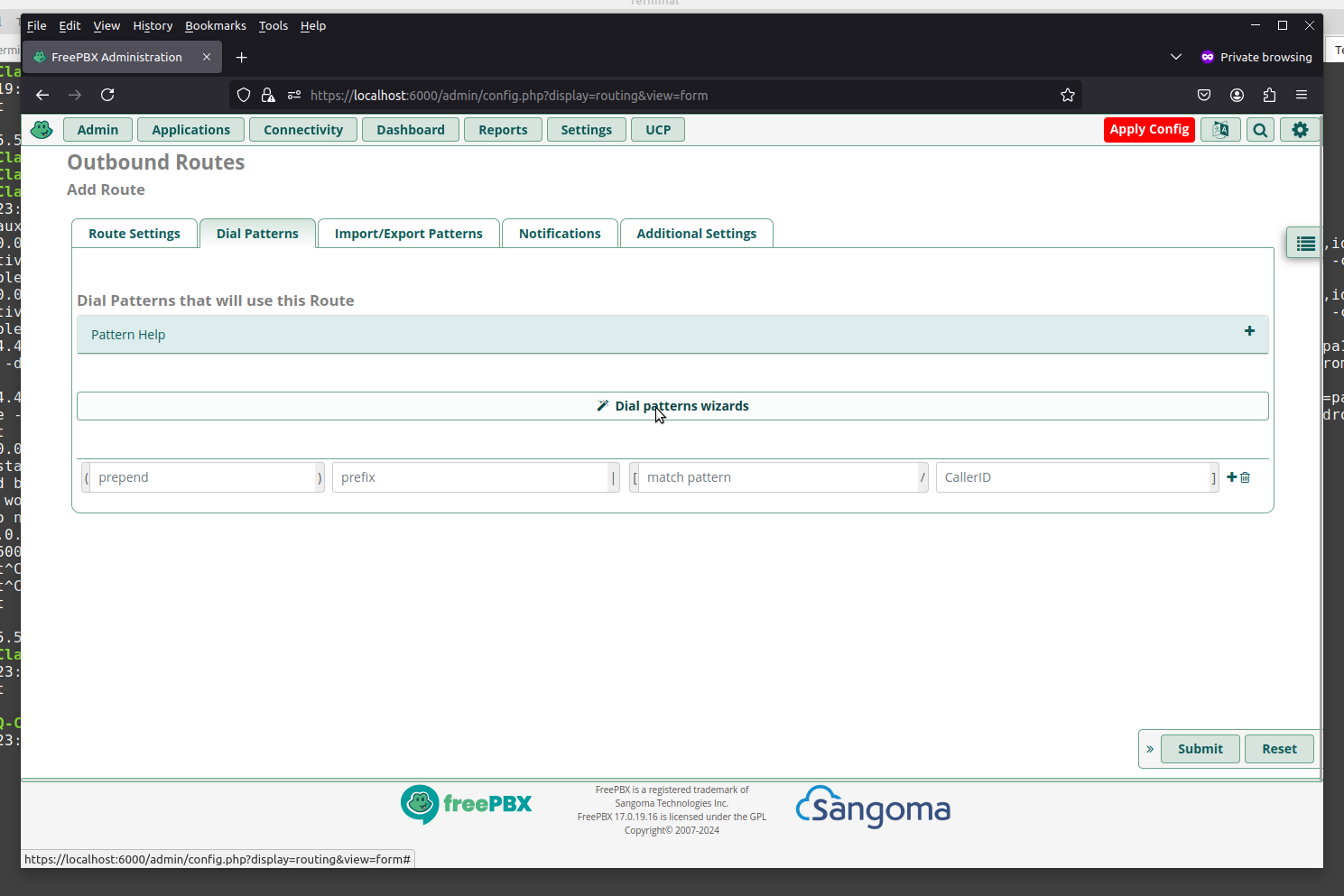

FreePBX 17 How To Add a Trunk

Make sure you choose your Trunk under "Trunk Sequence for Matched Routes"

Setup Your Dial-Patterns Using the Wizard

Remember to Click Submit and then Apply

Docker Container Onboot Policy - How to make sure a container is always running

Generally most containers are by default set not to start automatically.

Ther eare 3 settings for the "RestartPolicy" of containers:

No: Do not automatically restart the container (default).Always: Always restart the container regardless of the exit status.Unless-stopped: Always restart the container unless it is explicitly stopped.On-failure: Restart the container only if it exits with a non-zero status

Here is how you can see the policy:

docker ps -aq | xargs -I {} docker inspect --format='{{.Name}}: {{.HostConfig.RestartPolicy.Name}}' {}

We can see the output of all containers on the node and in the case below we see that all 3 are set to "no".

/festive_wiles: no

/magical_goldberg: no

/angry_hamilton: no

Say if we wanted "festive_wiles" to always be running (eg. if the server/node is rebooted we want the container to startup automatically):

docker update --restart=always festive_wiles

How can set these startup/restart settings when creating the container?

We just specify --restart=

docker run --name YourContainerName --restart=Always -d nginx

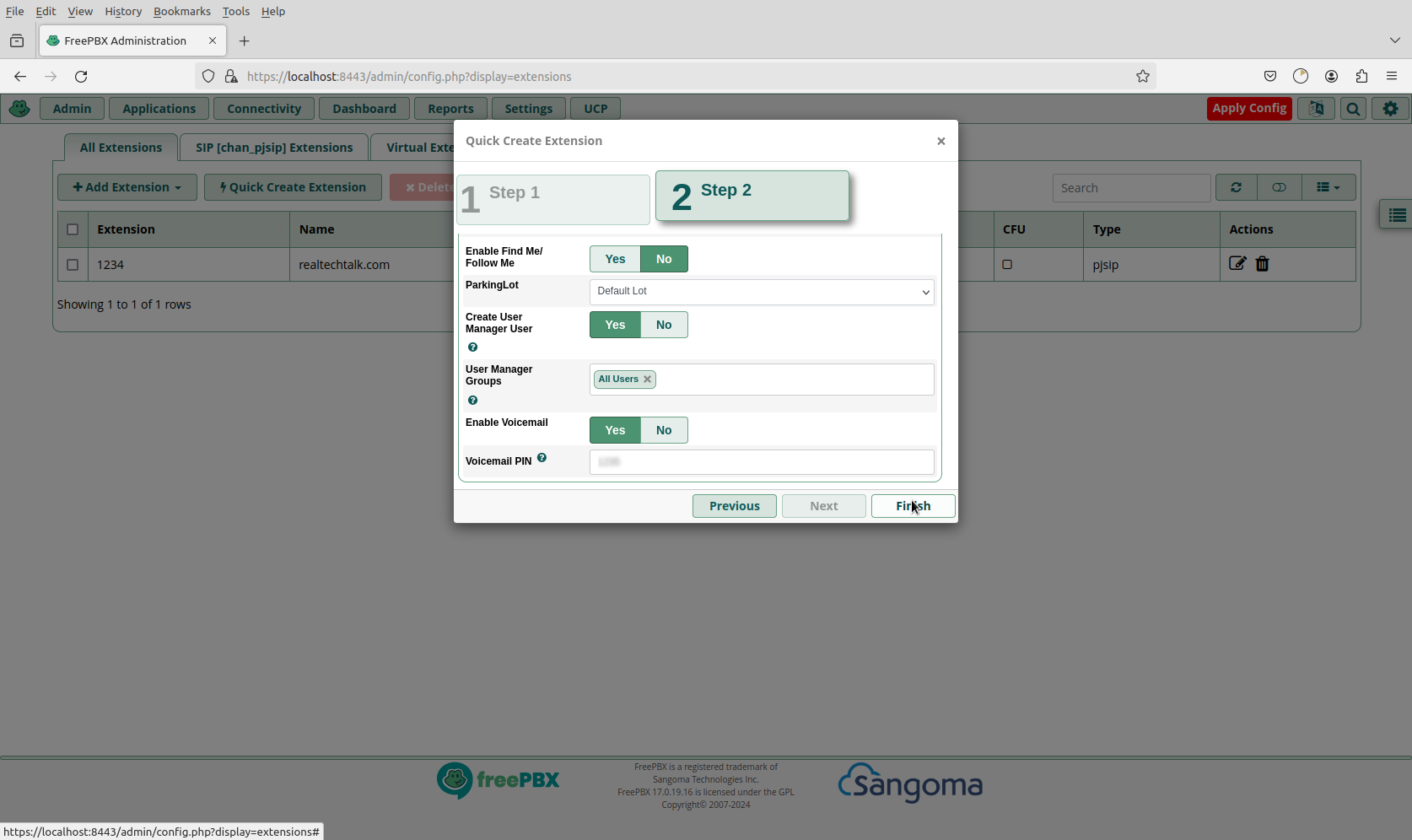

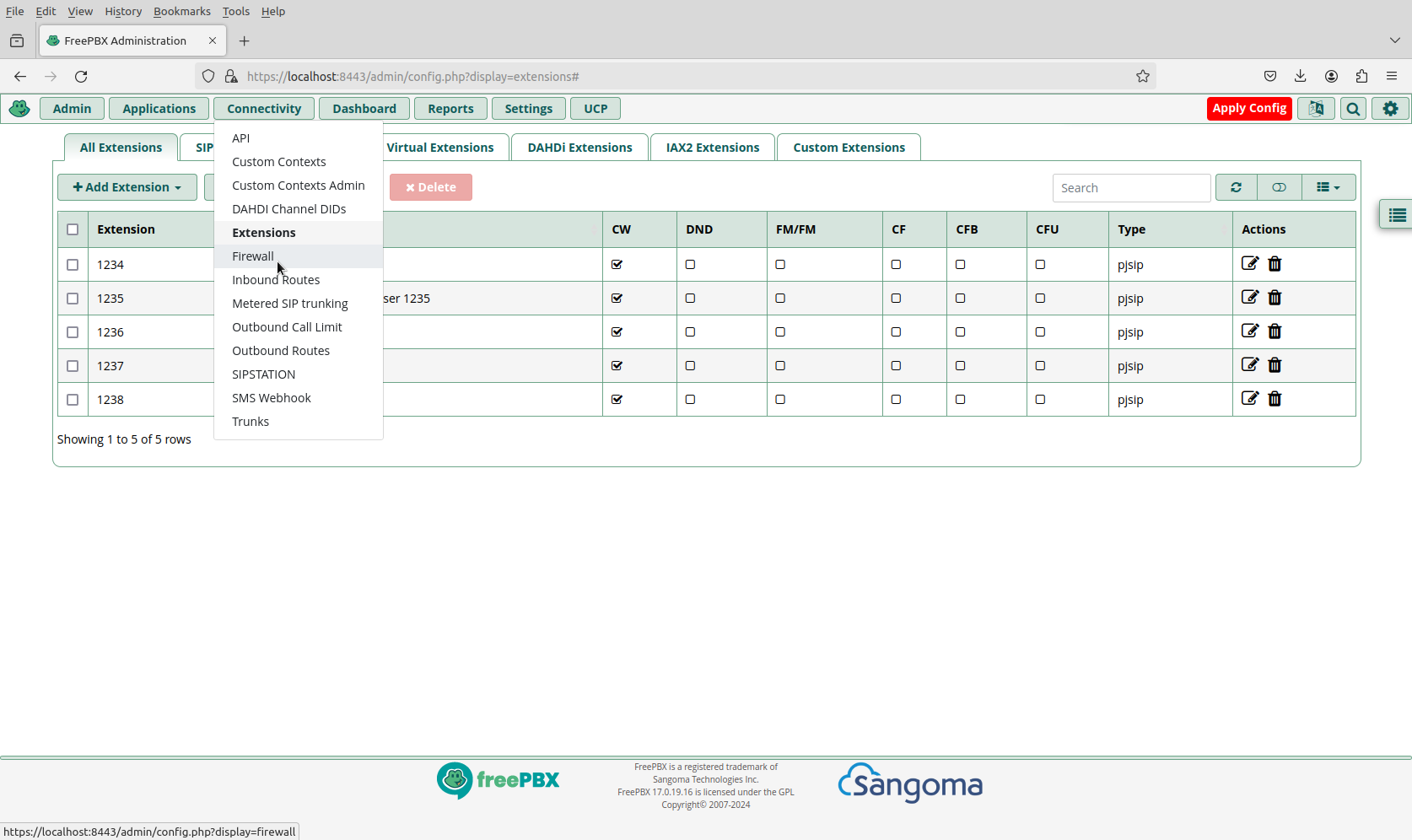

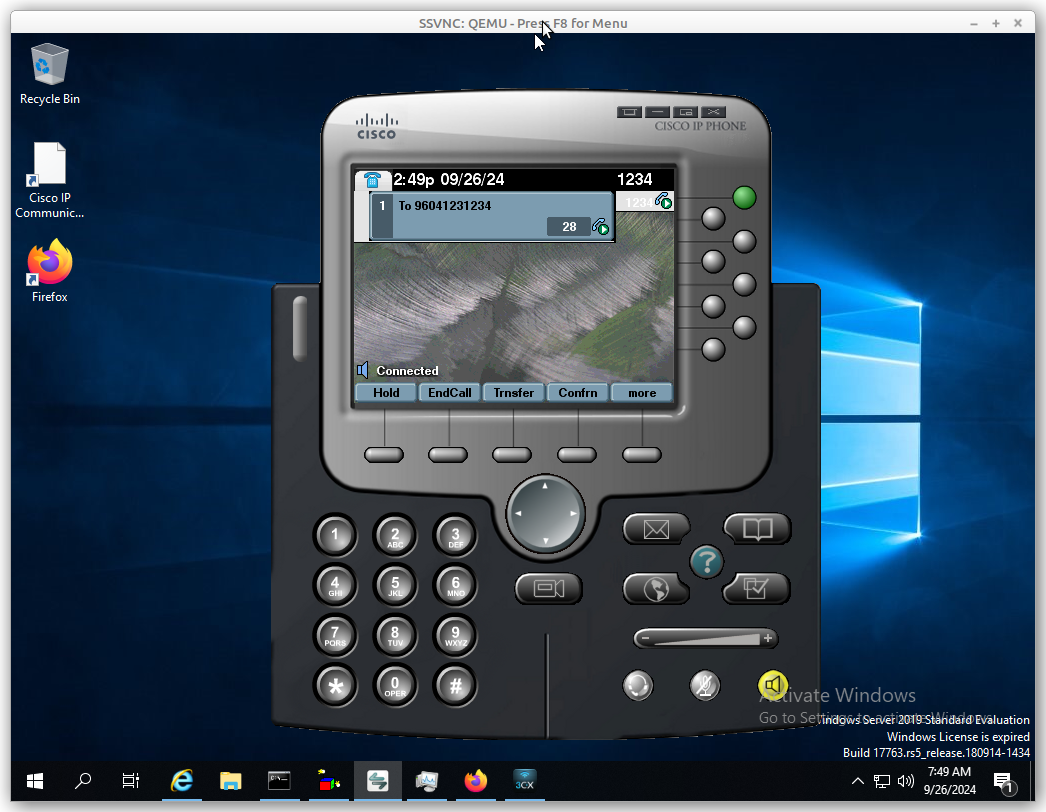

FreePBX 17 How To Add Phones / Extensions and Register

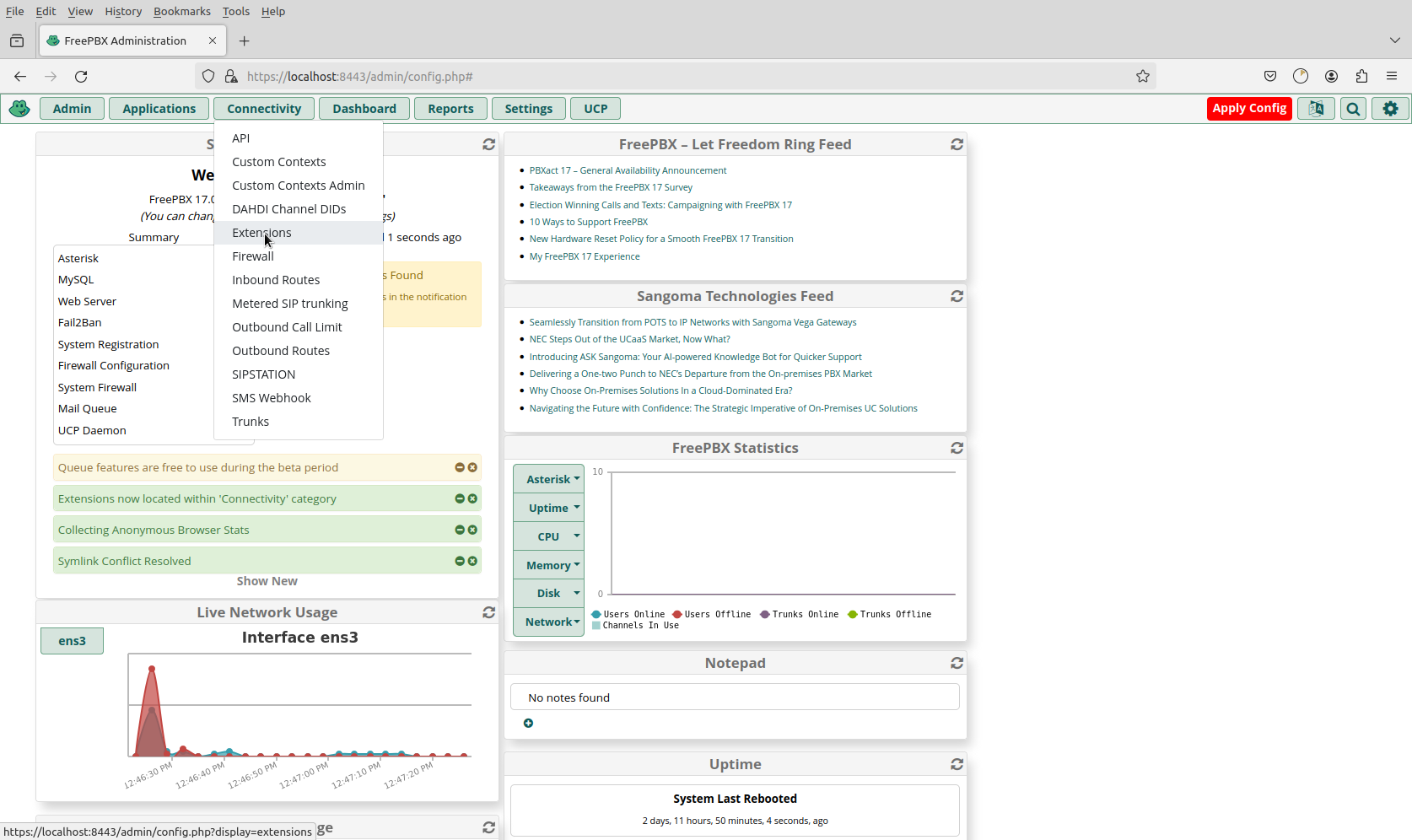

Step 1 - Login to FreePBX and Go to Connectivity

Click Connectivity -> Extensions

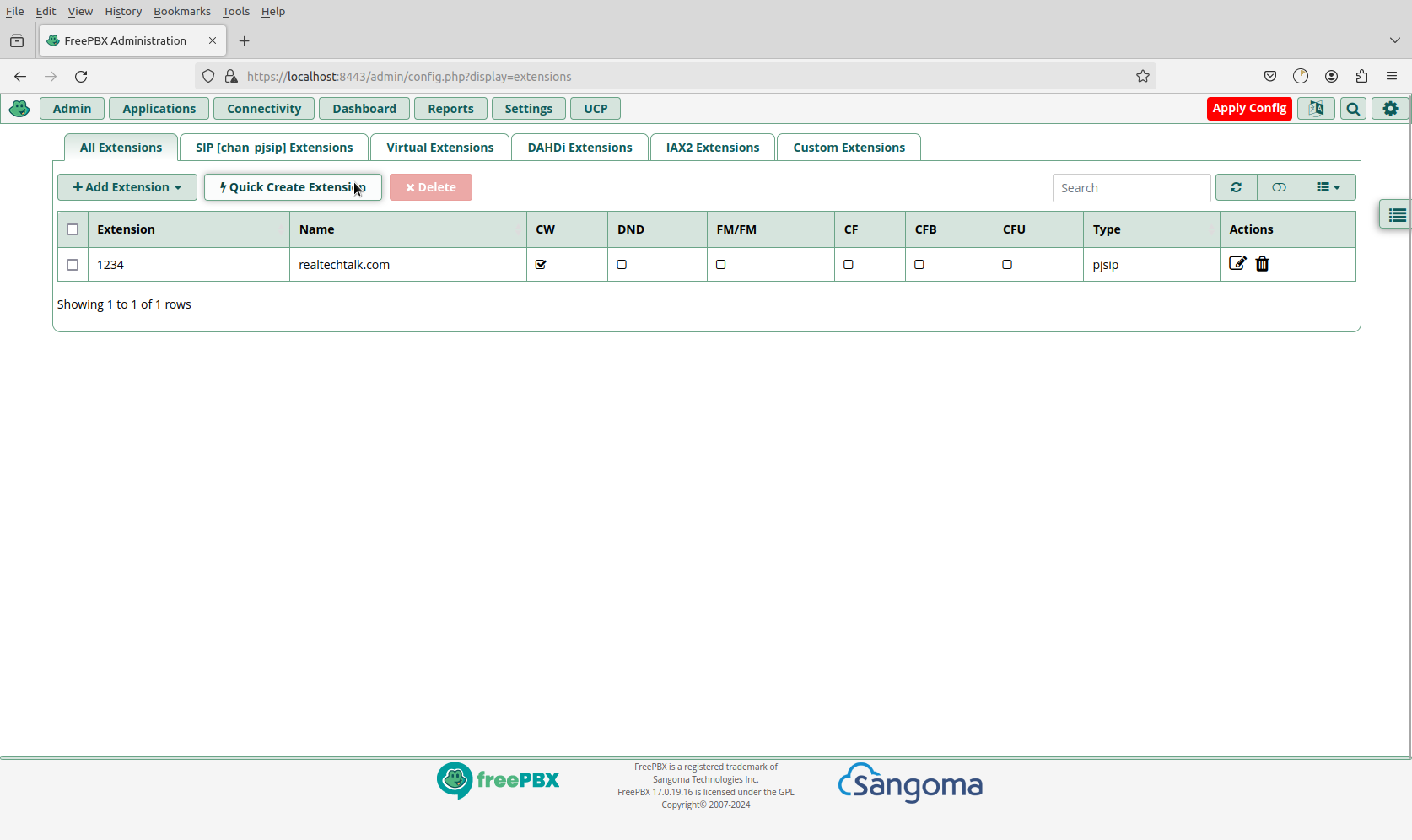

Step 2 - Create Extension

Click on "Quick Create Extension" or "Add Extension".

Quick is fine for more users, unless you have more specific requirements.

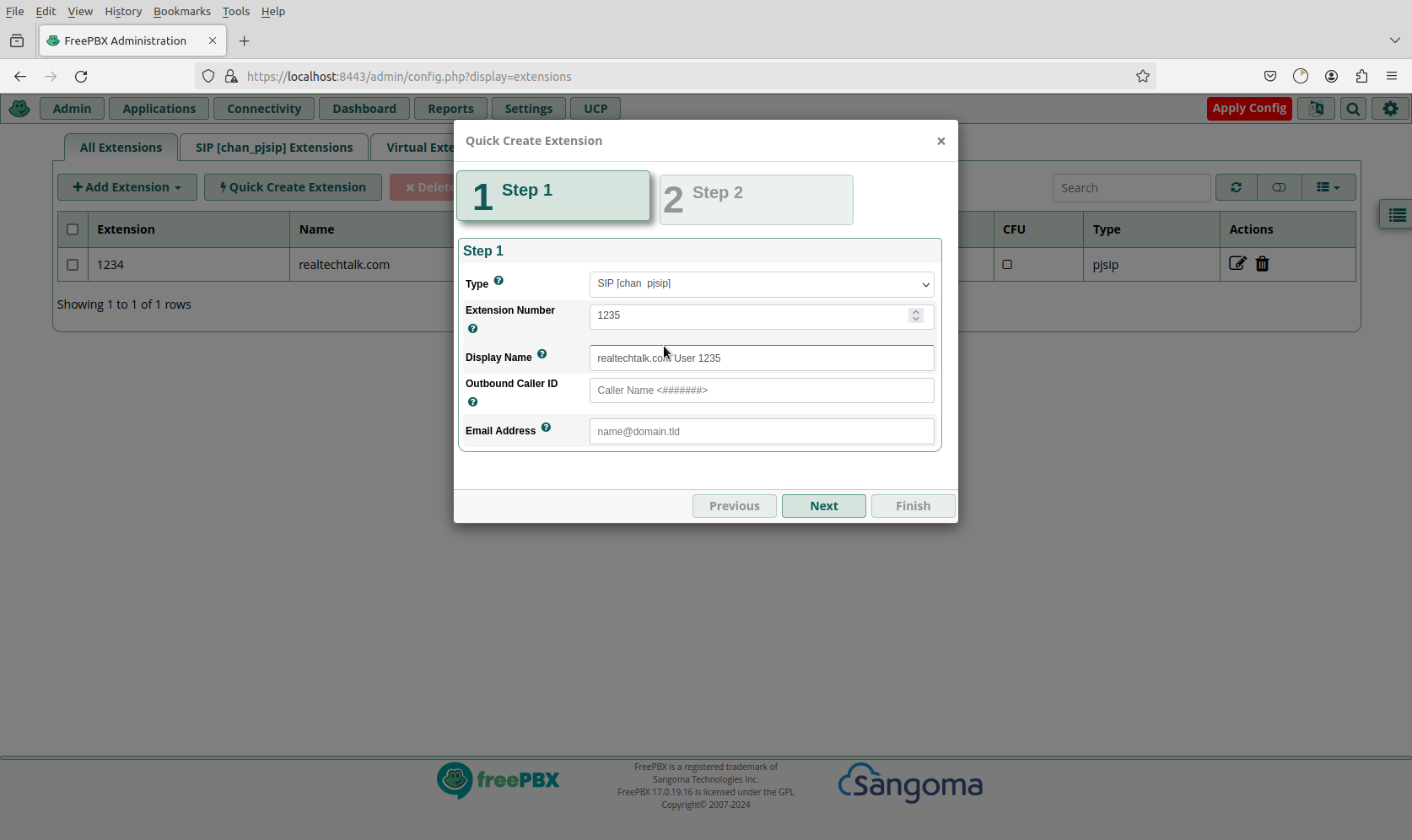

Step 3 - Create the Extension

Once you click finish, the details are then e-mailed to the user.

If you use Quick Create, you can just edit the extension and click on their "secret" field to find the password.

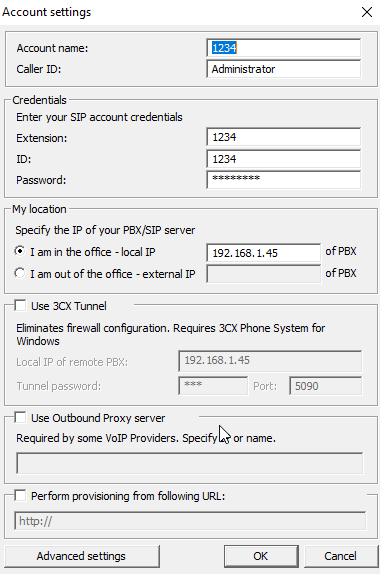

To make your SIP softphone or real register use the following info:

SIP Server: Your IP/Domain of FreePBX

Username: Extension# (eg 1234)

Password: the secret

3CX Example

Warning: The driver descriptor says the physical block size is 2048 bytes, but Linux says it is 512 bytes. solution

Disk /dev/sdb: 15.22 GiB, 16336814080 bytes, 31907840 sectors

Disk model: SD/MMC

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x00000000

The fdisk output is above and below is the error you may get when trying to use the drive or even format it.

Warning: The driver descriptor says the physical block size is 2048 bytes, but Linux says it is 512 bytes.

wipefs is a useful tool to show us what filesystems exist on the drive.

root@mint:/home/mint# wipefs /dev/sdb

DEVICE OFFSET TYPE UUID LABEL

sdb 0x52 vfat 53E2-5BC6

sdb 0x0 vfat 53E2-5BC6

sdb 0x1fe vfat 53E2-5BC6

Let's wipe out all filesystems to fix the error

Make sure you have backed up any data.

Change sdX below with the actual drive you want to do this to.

wipefs --all /dev/sdX

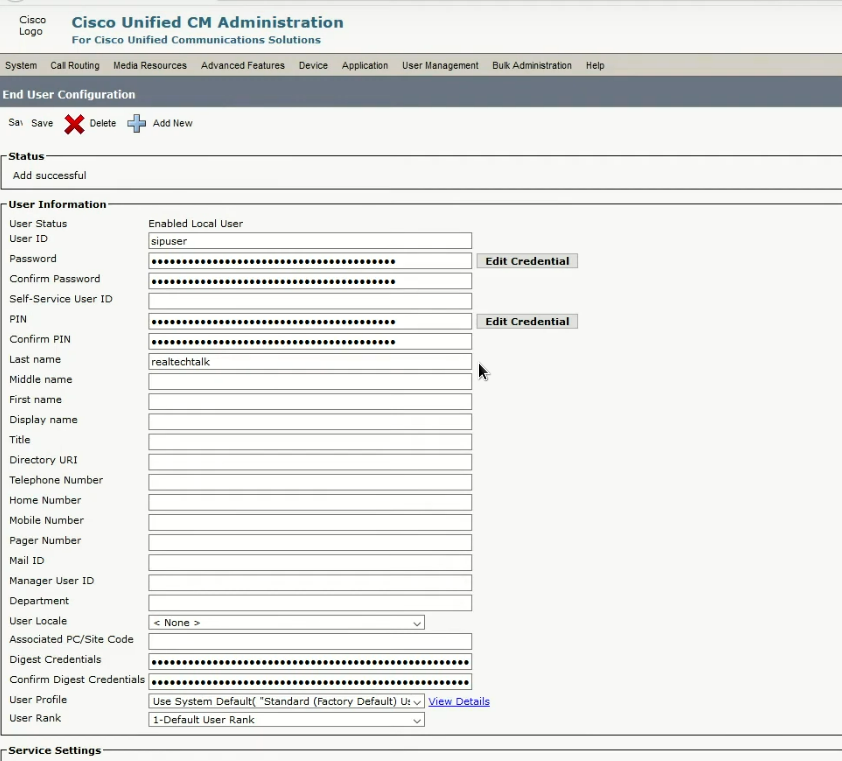

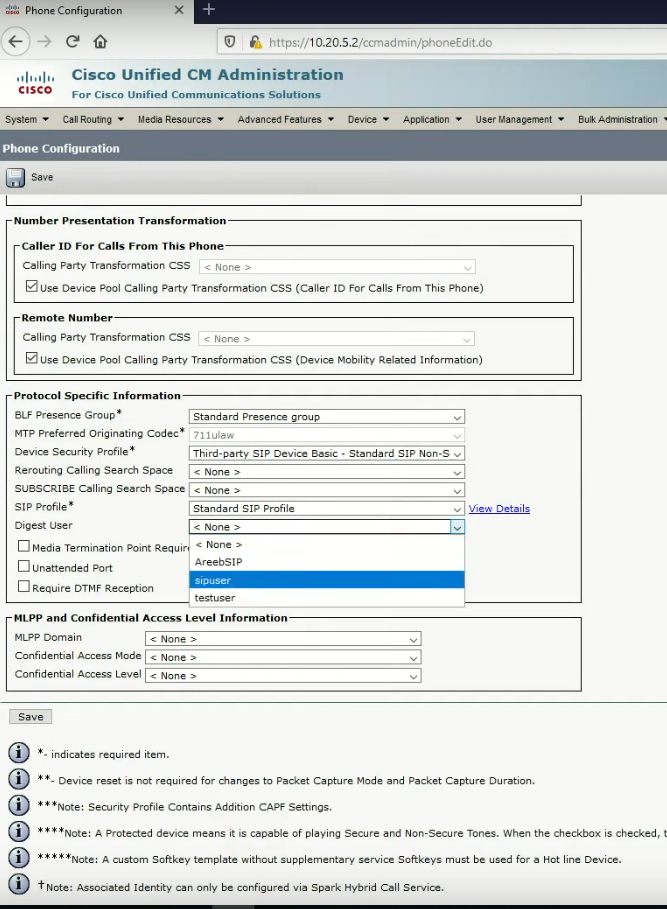

Cisco How To Use a Third Party SIP Phone (eg. Avaya, 3CX)

Most relevant config points from my video here.

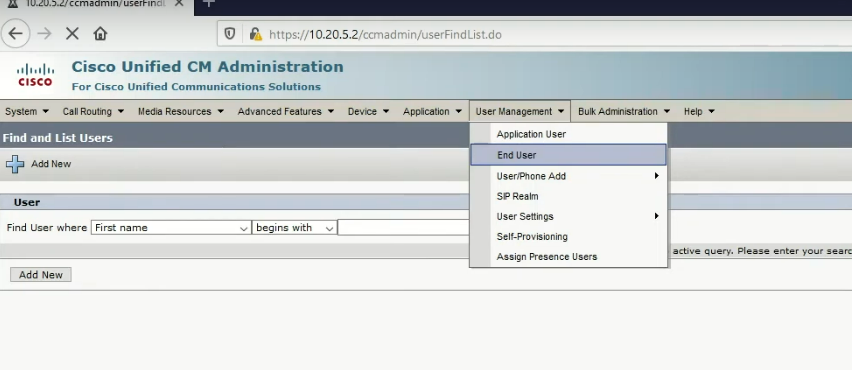

1.) Create a new End User

The most important part is setting the "Digest Credentials", that is the password that the phone will use to authenticate.

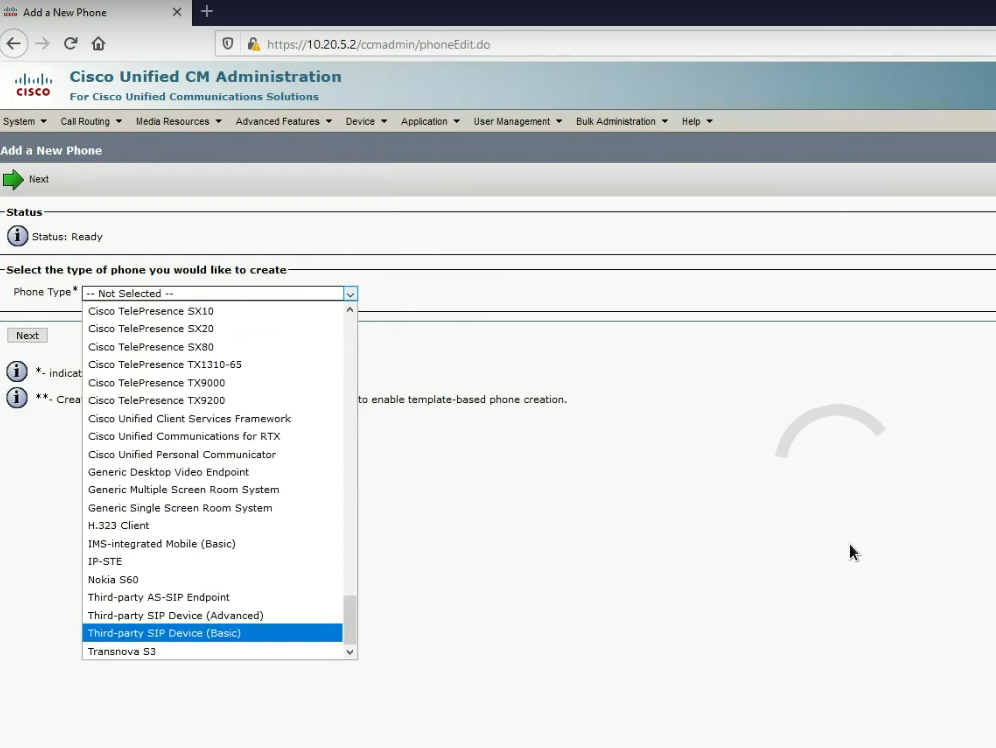

2.) Add a New Phone

Device -> Phone

3.)

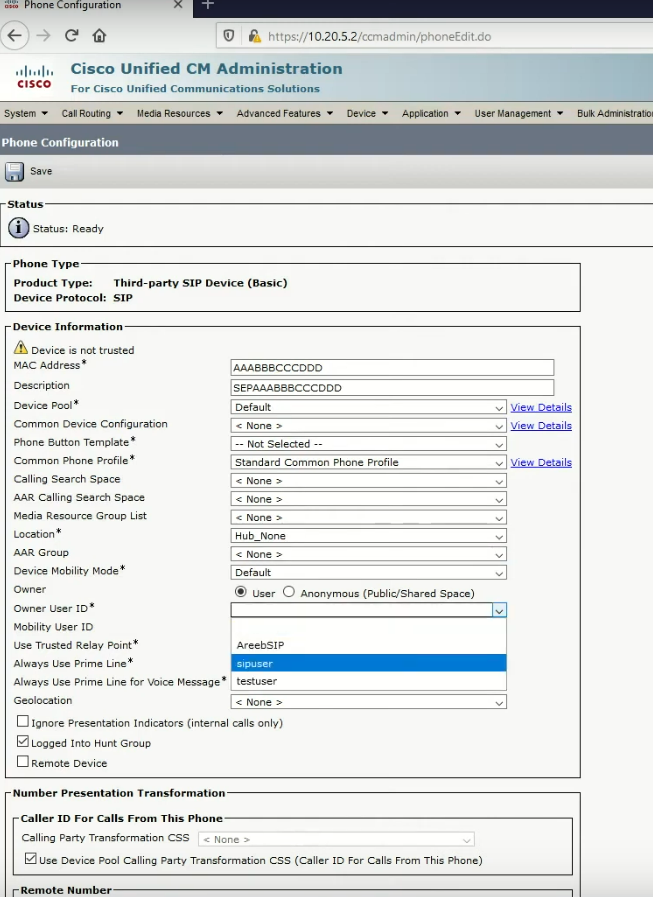

Set a fake MAC address in the right format eg. AAABBBCCCDDD.

Remember to assign the phone to the user you created earlier.

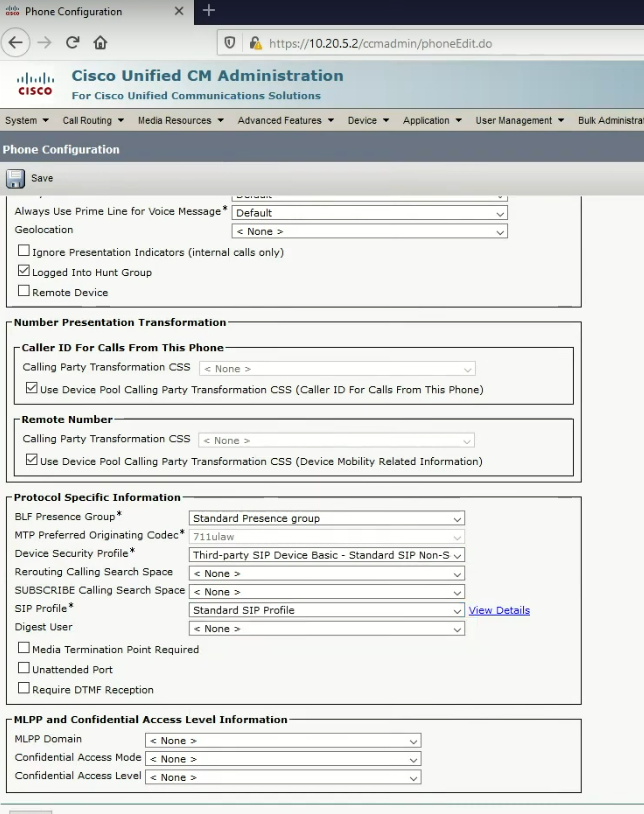

Set the "Third-party SIP Device Basic - Standard SIP" security profile as below.

Scroll down and set the Digest user to the user we sent earlier.

Remember to create a DN, your phone will then authenticate with the DN as the username and the password of the "Digest User".

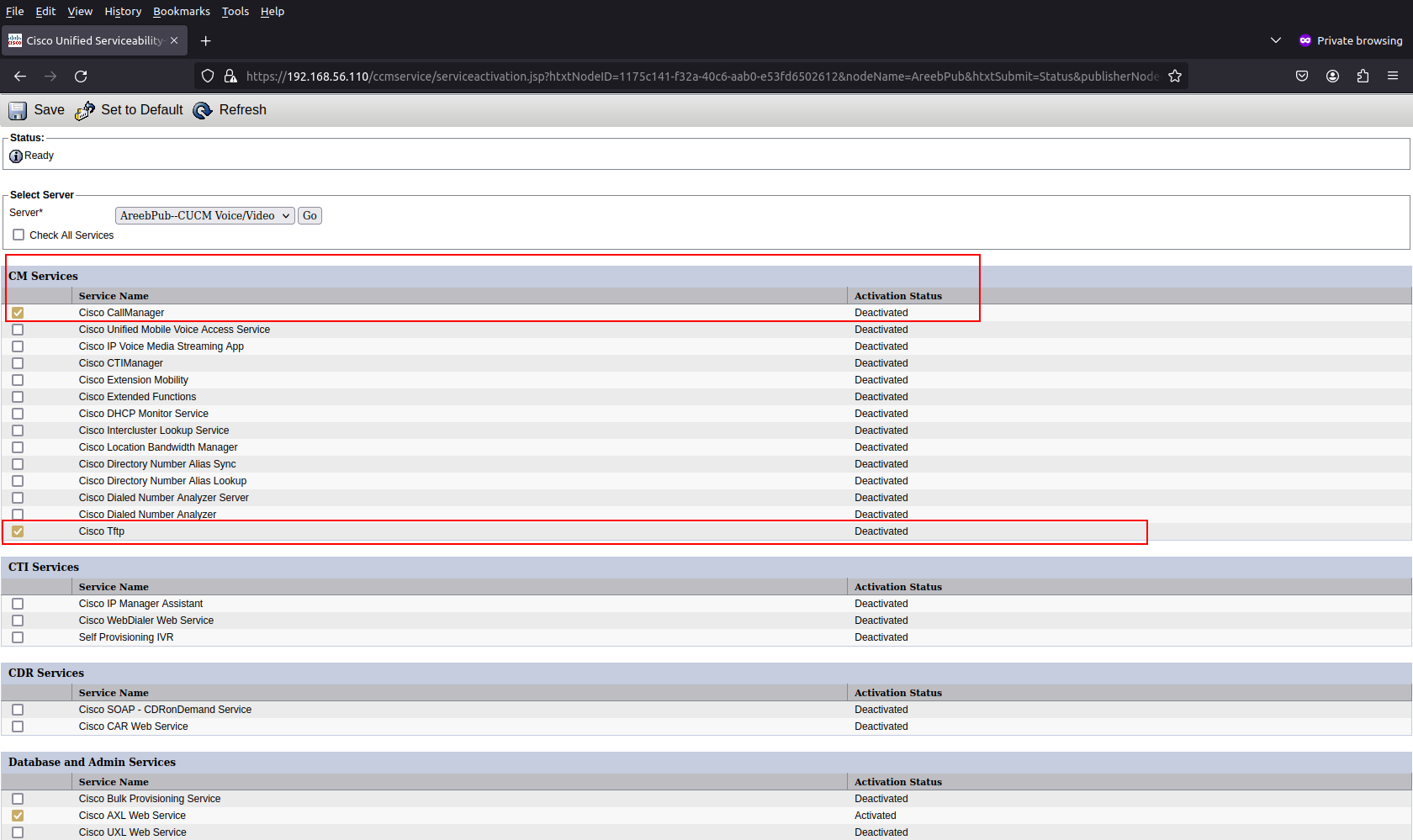

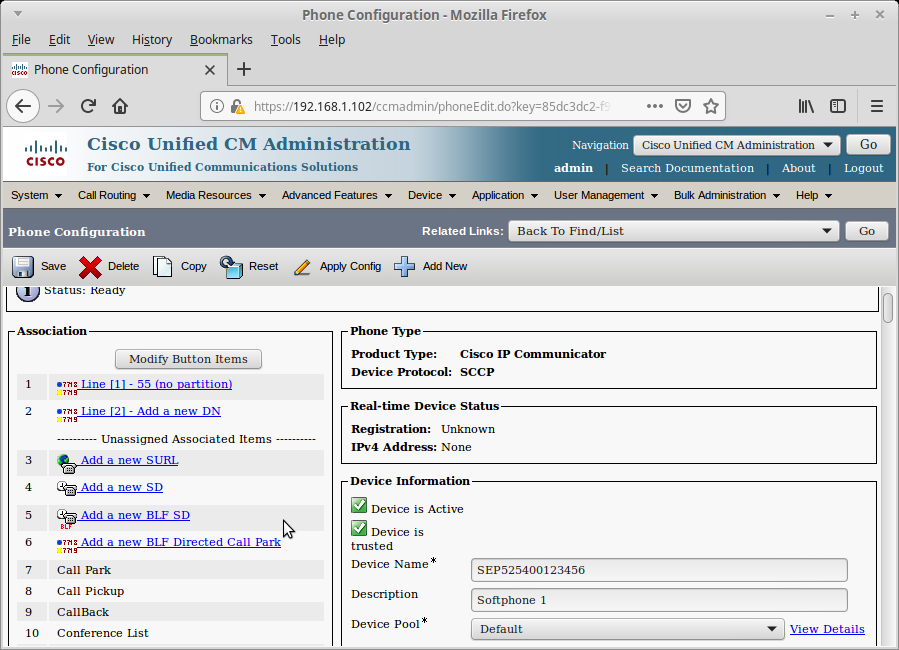

Cisco Unified Communication Manager (CUCM) - How To Add Phones

Before starting you should reset your phone(s) if they have been previously registered to another CME/CUCM:

Before starting go to "Cisco Unified Serviceability" drop-down on the top-right

Under Tools go to Service Activation.

Enable "CallManger" and "tftp" in order for phones to register.

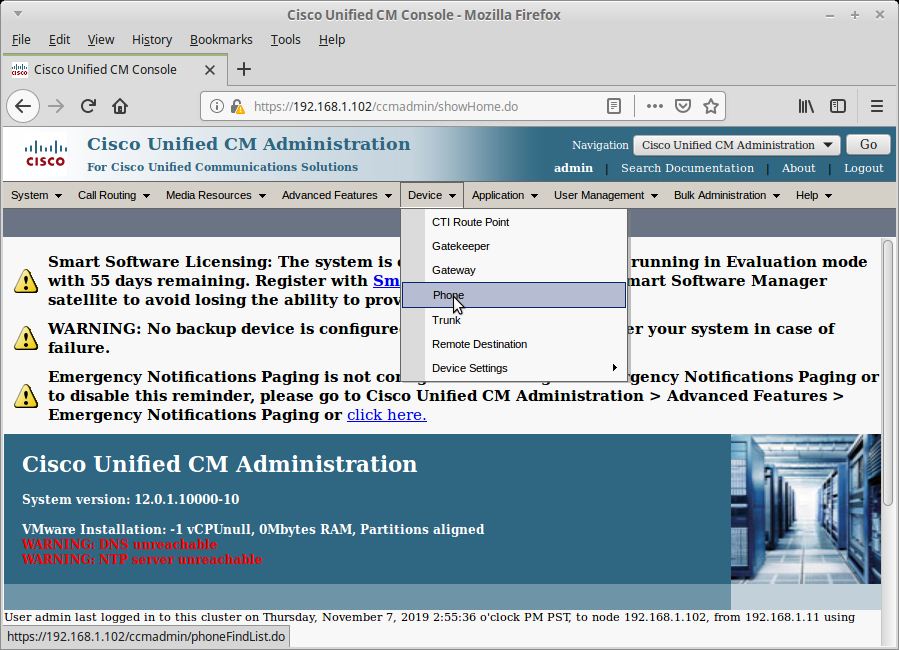

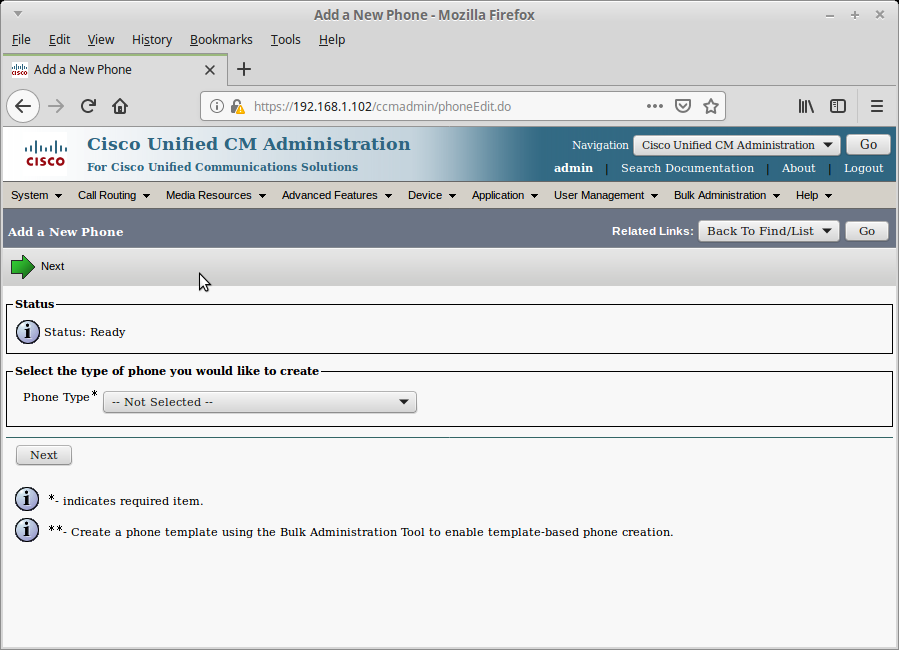

Step 1 - Go The Phone Menu

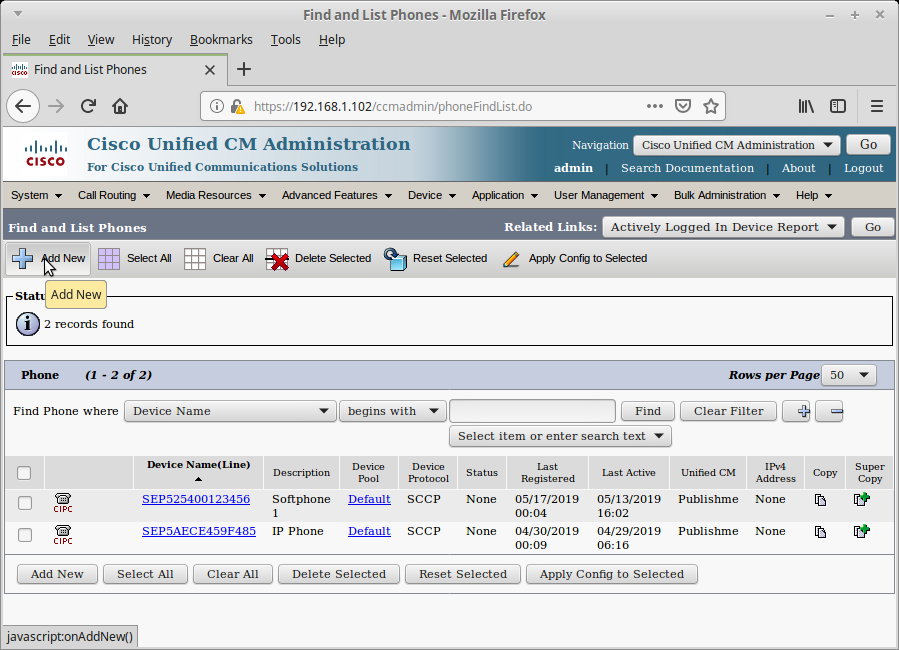

Step 2 - Add New Phone

Step 3 - Choose Phone Type

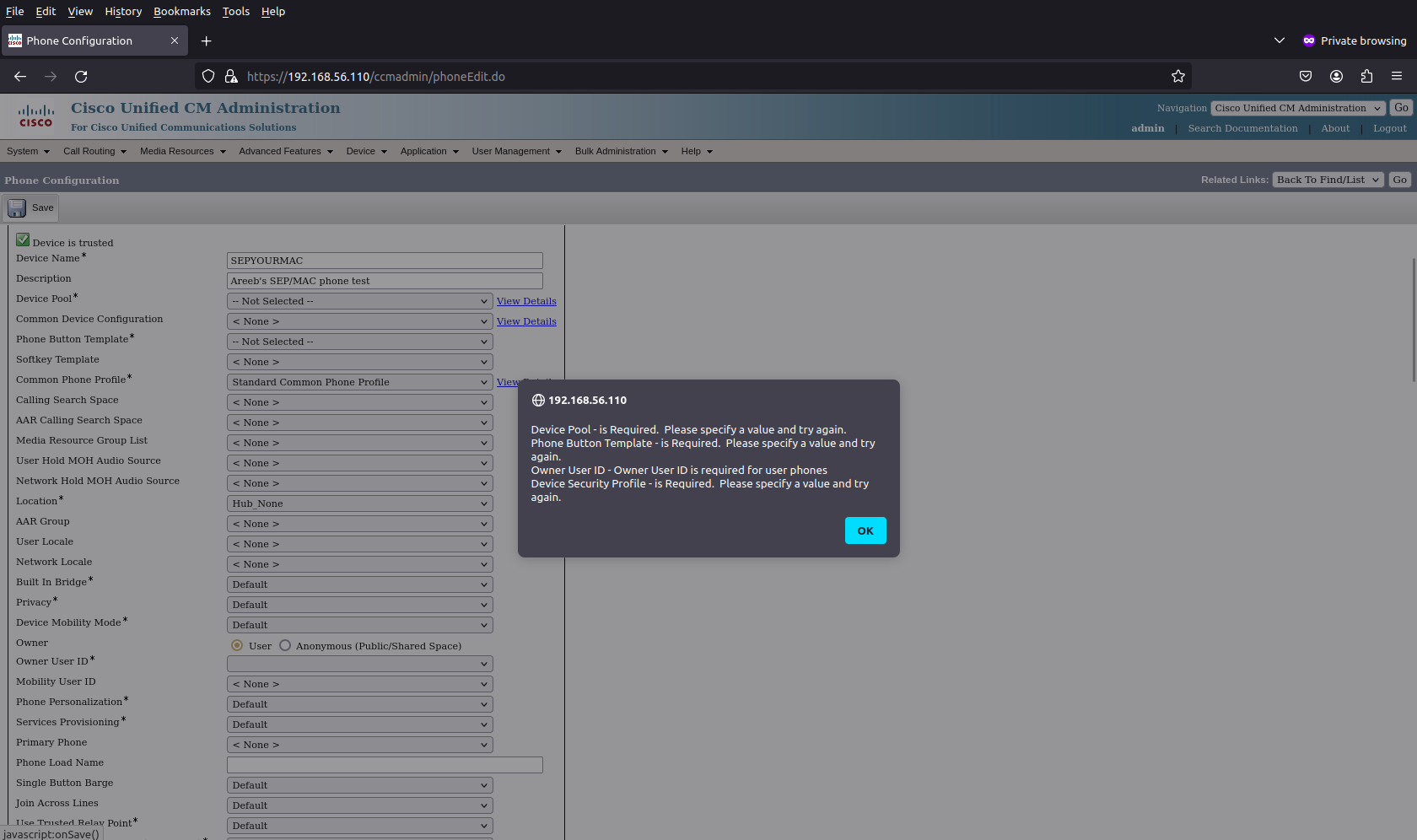

Step 4 - Set Phone MAC

The format is SEPMACADDRESS

As we can see, the minimum requirements are that we specify the following:

Device Name:

Device Pool:

Owner User ID:

Device Security Profile:

Step 5 - Add New DN (top left)

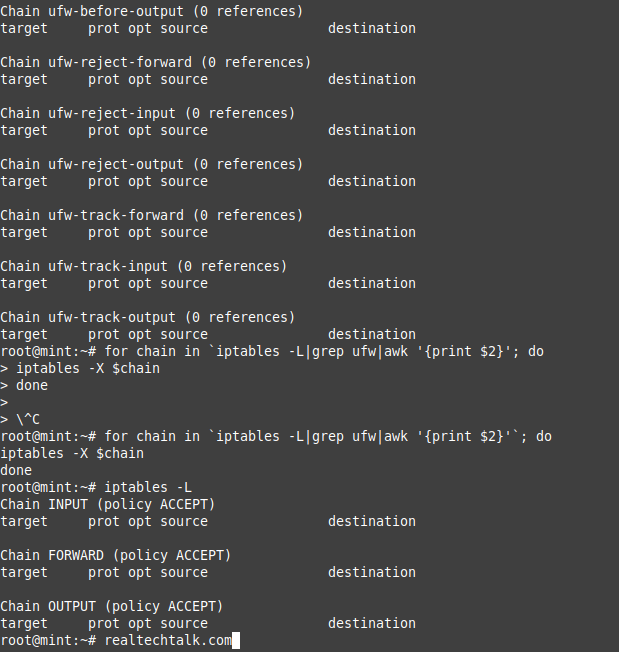

pptp / pptpd not working in DD-WRT iptables / router

Although it is well-known that pptp is not secure and is subject to many forms of attacks, the reality is that a lot of legacy and embedded devices use pptp. I argue that if it is being used for routing or remote access or over an already secure connection (eg. another VPN like ikev2) then this is still acceptable. Or in a LAN or in a public environment where no private data is exchanged. However, if the nature of the data is extremely sensitive, you should do whatever it takes to have the second layer of encryption by using a secure VPN protocol.

In iptables you can find many threads and discussions about how to make pptp work with iptables, with crazy forwarding rules and blindly and manually allowing all GRE etc... However it's much more simple in my experience. You just need to enable the netfilter conntracking to be able to connect your pptp client.

This solution also applies to a node running Kubernetes and Docker containers (eg. an embedded device that is for some odd reason using pptp). If you can, switch to ikev2 or OpenVPN.

sysctl -w net.netfilter.nf_conntrack_helper=1

For permanent changes add this to /etc/sysctl.conf:

net.netfilter.nf_conntrack_helper=1

There are other modules necessary to make pptp work, but you can see that any recent kernel will load them on its own:

nf_nat_pptp 20480 0

nf_conntrack_pptp 24576 1 nf_nat_pptp

nf_nat 45056 3 nf_nat_pptp,iptable_nat,xt_MASQUERADE

nf_conntrack 139264 6 xt_conntrack,nf_nat,nf_conntrack_pptp,nf_nat_pptp,nf_conntrack_netlink,xt_MASQUERADE

systemd-journald high memory usage solution

Sometimes systemd-journald can take several hundred megs of RAM or more which is bad for microservices and embedded devices.

Edit /etc/systemd/journald.conf

You can set it to max 5M of RAM like below:

SystemMaxUse=5M

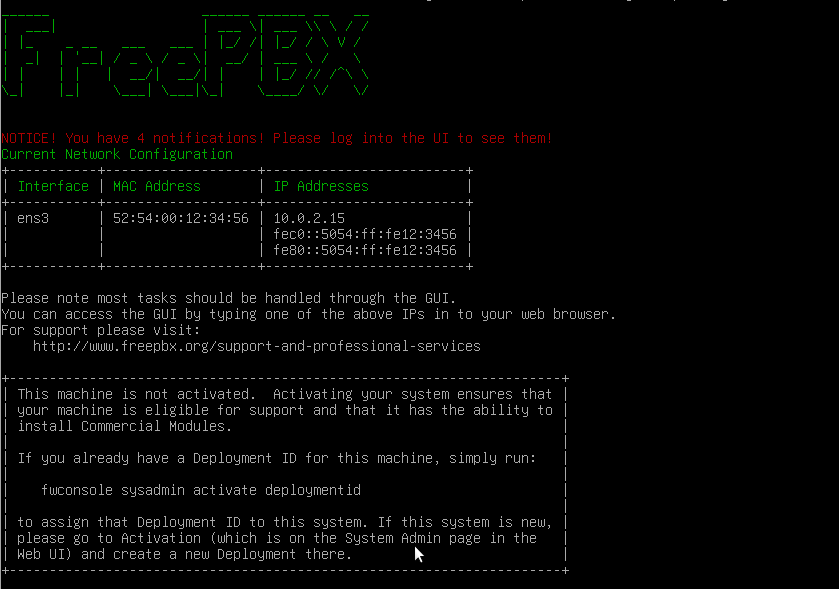

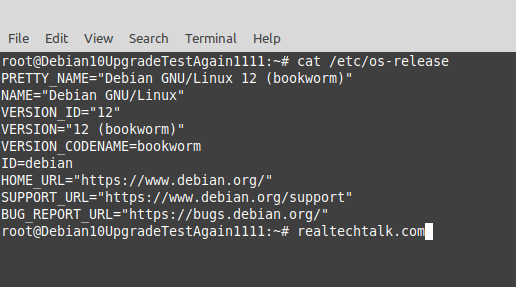

How to Install FreePBX 17 in Linux Debian Ubuntu Mint Guide

FreePBX official install guide is here.

Requirements:

- Debian 12 Download Link

- Minimal - System Utilities

- RAM: 4G

- HDD: 20G

Note that if you don't have the required base OS you will get an error like this at the end of the install

2024-10-23 00:30:22 - Upgrading FreePBX 17 modules

2024-10-23 00:30:22 - Installation failed at step Upgrading FreePBX 17 modules. Please check log /var/log/pbx/freepbx17-install-2024.11.05-00.14.36.log for details.

2024-10-23 00:30:22 - Error at line: 1135 exiting with code 255 (last command was: fwconsole ma upgradeall >> $log)

2024-10-23 00:30:22 - Exiting script

#log

In modulefunctions.class.php line 2814:

preg_replace(): Passing null to parameter #3 ($subject) of type array|string is deprecated

moduleadmin [-f|--force] [-d|--debug] [--edge] [--ignorecache] [--stable] [--color] [--skipchown] [-e|--autoenable] [--skipdisabled] [--snapshot SNAPSHOT] [--format FORMAT] [-R|--repo REPO] [-t|--tag TAG] [--skipbreakingcheck] [--sendemail] [--onlystdout] [--] [

2024-10-23 00:30:22 - ****** INSTALLATION FAILED *****

2024-10-23 00:30:22 - Installation failed at step Upgrading FreePBX 17 modules. Please check log /var/log/pbx/freepbx17-install-2024.11.05-00.14.36.log for details.

2024-10-23 00:30:22 - Error at line: 1135 exiting with code 255 (last command was: fwconsole ma upgradeall >> $log)

2024-10-23 00:30:22 - Exiting script

Step 1- Get and execute the official Sangoma (maker of Asterisk) install script:

wget https://github.com/FreePBX/sng_freepbx_debian_install/raw/master/sng_freepbx_debian_install.sh

bash sng_freepbx_debian_install.sh

The install took about 20 minutes for me on a single core Xeon on an SSD.

If successful it should look like this at the end:

______ ______ ______ __ __

| ___| | ___ | ___ \ / /

| |_ _ __ ___ ___ | |_/ /| |_/ / V /

| _| | '__| / _ / _ | __/ | ___ /

| | | | | __/| __/| | | |_/ // /^

_| |_| ___| ___|_| ____/ / /

NOTICE! You have 4 notifications! Please log into the UI to see them!

Current Network Configuration

+-----------+-------------------+---------------------------+

| Interface | MAC Address | IP Addresses |

+-----------+-------------------+---------------------------+

| ens3 | | |

| | | fe80::dcad:beff:feef:6e79 |

+-----------+-------------------+---------------------------+

Please note most tasks should be handled through the GUI.

You can access the GUI by typing one of the above IPs in to your web browser.

For support please visit:

http://www.freepbx.org/support-and-professional-services

+---------------------------------------------------------------------+

| This machine is not activated. Activating your system ensures that |

| your machine is eligible for support and that it has the ability to |

| install Commercial Modules. |

| |

| If you already have a Deployment ID for this machine, simply run: |

| |

| fwconsole sysadmin activate deploymentid |

| |

| to assign that Deployment ID to this system. If this system is new, |

| please go to Activation (which is on the System Admin page in the |

| Web UI) and create a new Deployment there. |

+---------------------------------------------------------------------+

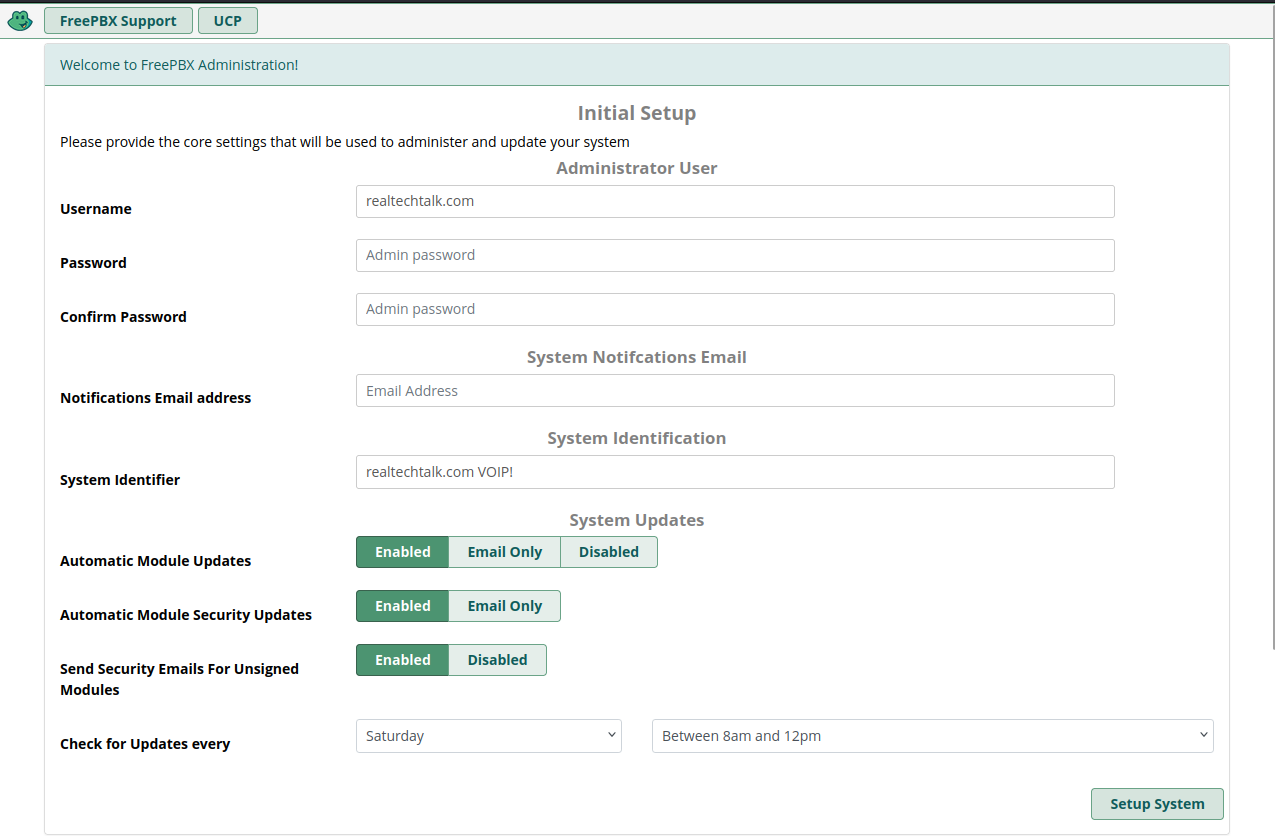

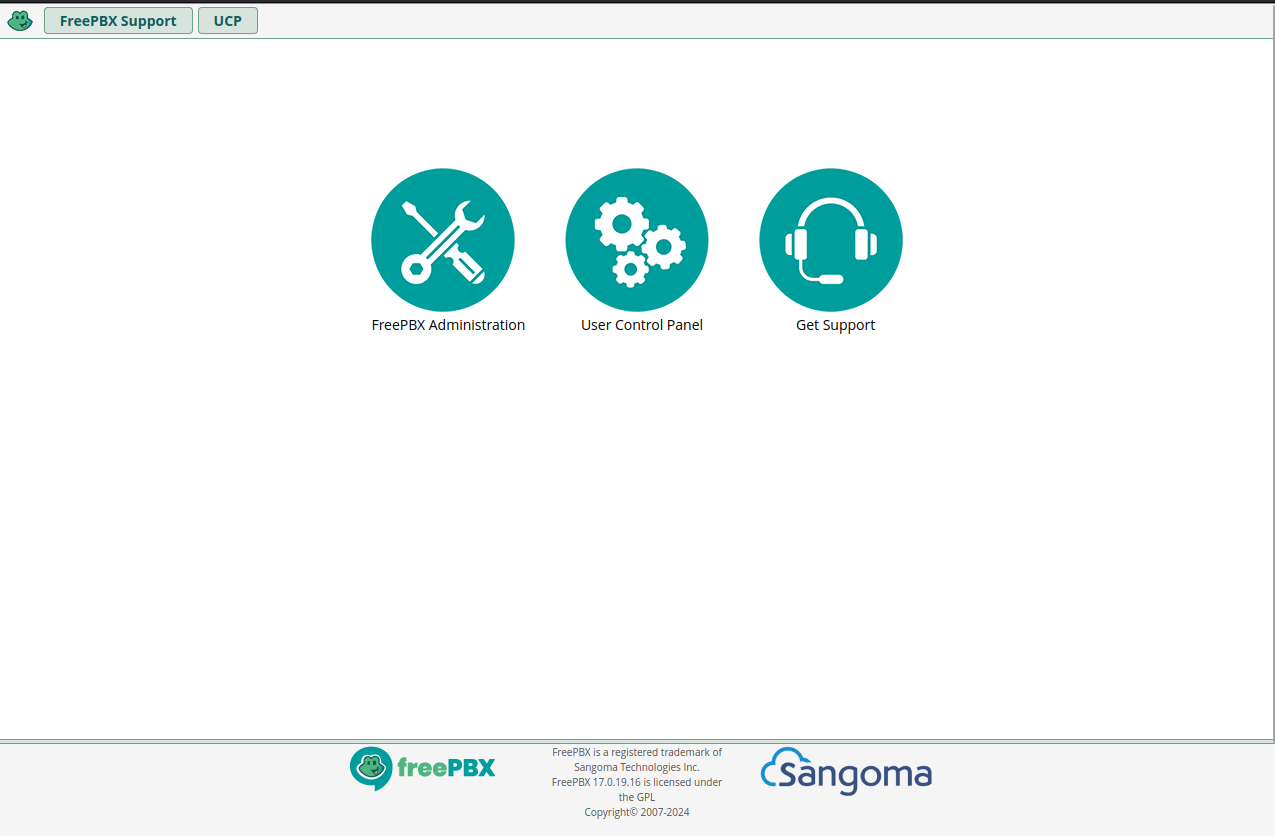

Step 2 - Setup and Login to the GUI

Then enter the Administration Section

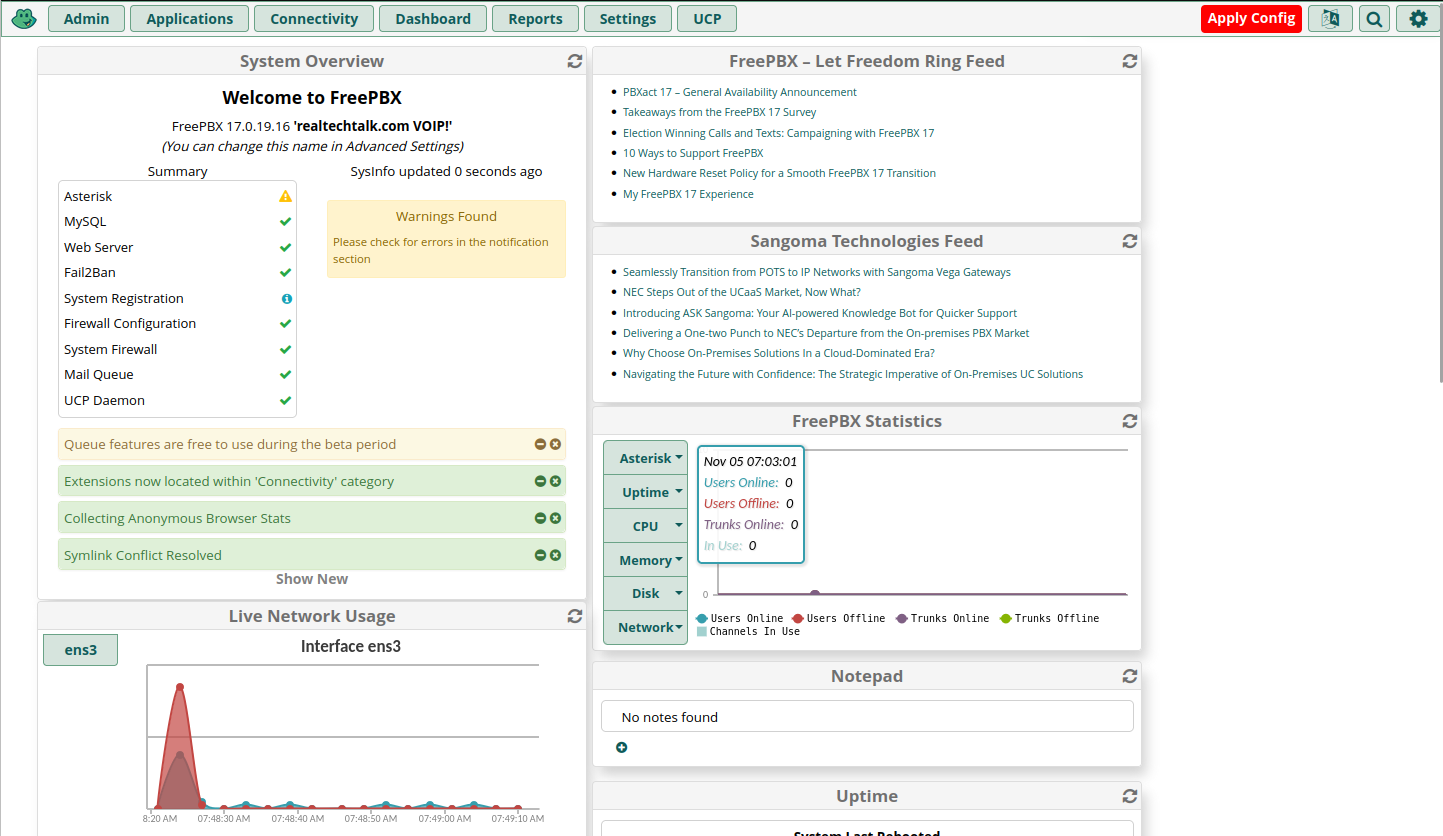

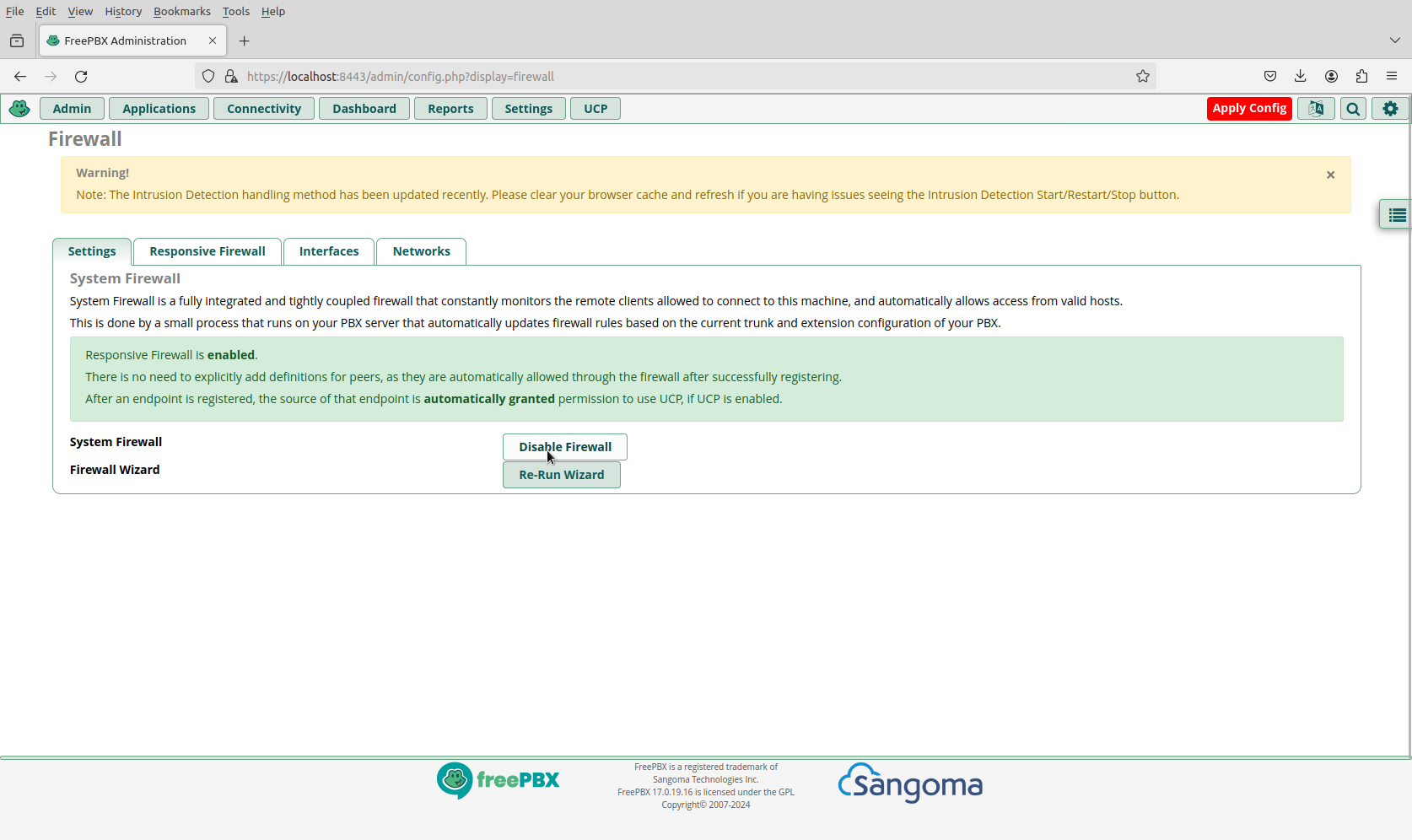

Do all of the default Firewall Settings and then you'll be in the Admin home page

Firewall Issues

CLI Firewall Commands:

fwconsole firewall help

Valid Commands:

disable : Disable the System Firewall. This will shut it down cleanly.

stop : Stop the System Firewall

start : Start (and enable, if disabled) the System Firewall

restart : Restart the System Firewall

lerules [enable] or [disable] : Enable or disable Lets Encrypt rules.

trust : Add the hostname or IP specified to the Trusted Zone

untrust : Remove the hostname or IP specified from the Trusted Zone

list [zone] : List all entries in zone 'zone'

add [zone] [id id id..] : Add to 'zone' the IDs provided.

del [zone] [id id id..] : Delete from 'zone' the IDs provided.

listzones : Show zones that can be used to add and del.

fix_custom_rules : Create the files for the custom rules if they don't exist and set the permissions and owners correctly.

sync : Synchronizes all selected zones of the firewall module with the intrusion detection whitelist.

f2bstatus or f2bs : Display ignored and banned IPs. (Only root user).

When adding or deleting from a zone, one or many IDs may be provided.

These may be IP addresses, hostnames, or networks.

Example:

fwconsole firewall add trusted 10.46.80.0/24 hostname.example.com 1.2.3.4

Note that the firewall in production should never be disabled as there are massive amounts of hackers that target FreePBX and SIP servers. This can be used for learning, but ideally the firewall should be configured to whitelist yourself or other trusted IPs, rather than completely disabling.

If you are locked out:

Change 192.168.1.0/24 to your subnet or IP

iptables -I INPUT -s 192.168.1.0/24 -j ACCEPT

systemctl stop fail2ban

This buys time before the firewall reactivates.

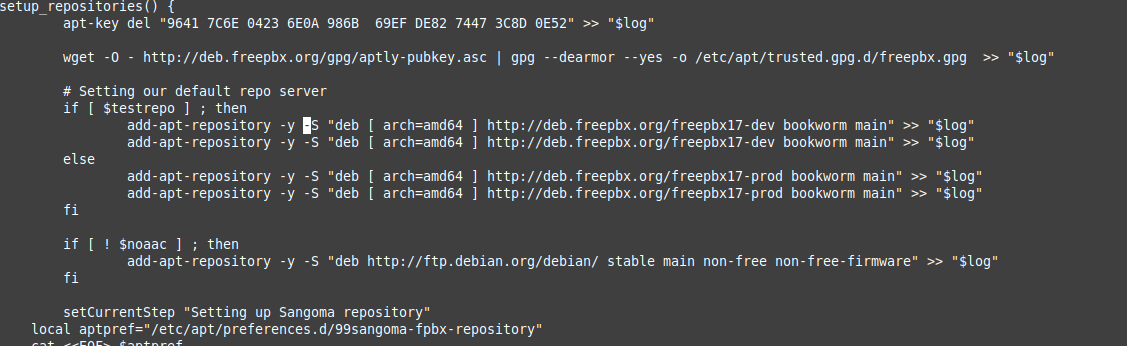

Bug in installer script:

This happens if you try the near impossible by using an older/different Debian

The current script as of 2024-10-11 has a bug where they pass -S to add-apt-repository when it must be a lower case -s which breaks everything as you can see in the log:

esolving deb.freepbx.org (deb.freepbx.org)... 52.217.40.28, 54.231.128.57, 52.217.9.44, ...

Connecting to deb.freepbx.org (deb.freepbx.org)|52.217.40.28|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3139 (3.1K) [binary/octet-stream]

Saving to: 'STDOUT'

0K ... 100% 19.4M=0s

2024-10-11 21:52:03 (19.4 MB/s) - written to stdout [3139/3139]

Usage: add-apt-repository

add-apt-repository is a script for adding apt sources.list entries.

It can be used to add any repository and also provides a shorthand

syntax for adding a Launchpad PPA (Personal Package Archive)

repository.

- The apt repository source line to add. This is one of:

a complete apt line in quotes,

a repo url and areas in quotes (areas defaults to 'main')

a PPA shortcut.

a distro component

Examples:

apt-add-repository 'deb http://myserver/path/to/repo stable myrepo'

apt-add-repository 'http://myserver/path/to/repo myrepo'

apt-add-repository 'https://packages.medibuntu.org free non-free'

apt-add-repository http://extras.ubuntu.com/ubuntu

apt-add-repository ppa:user/repository

apt-add-repository ppa:user/distro/repository

apt-add-repository multiverse

If --remove is given the tool will remove the given sourceline from your

sources.list

add-apt-repository: error: no such option: -S

This can be fixed by editing the bash installer file you downloaded and changing -S to -s

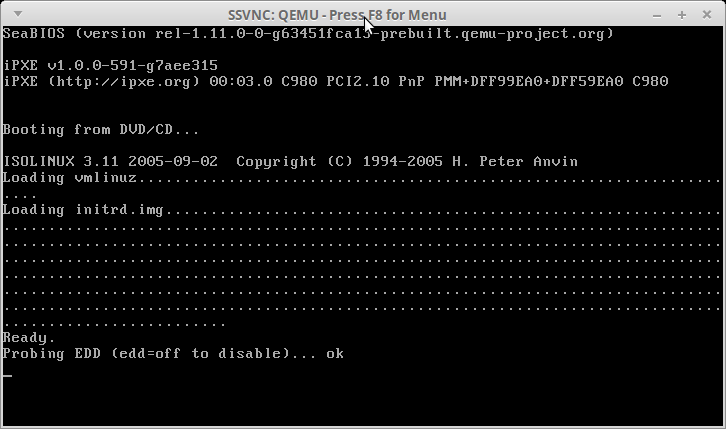

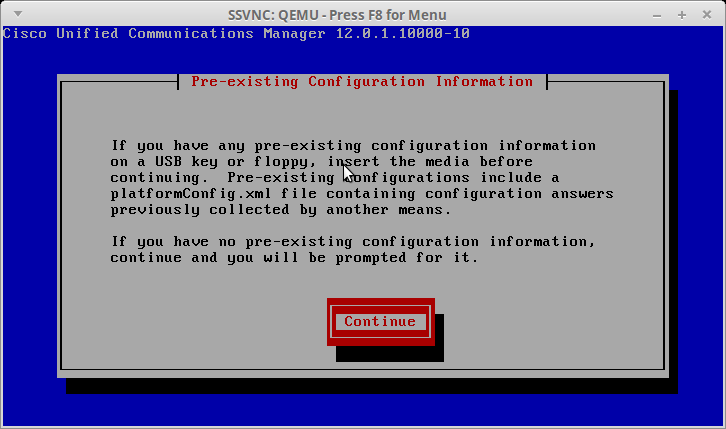

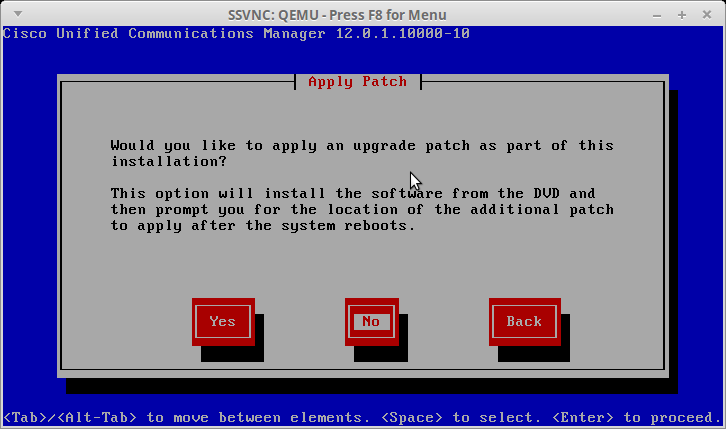

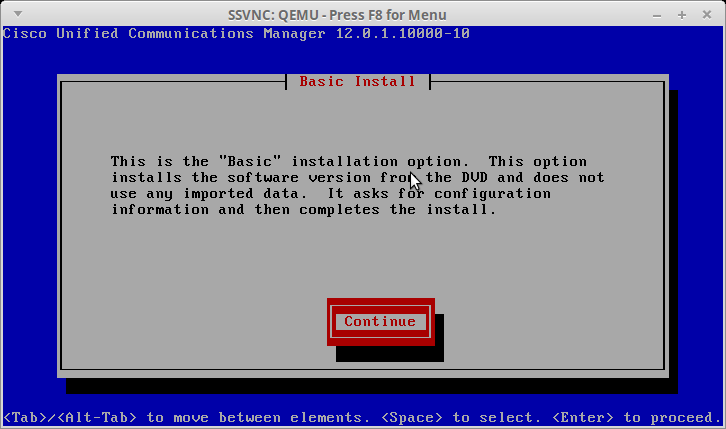

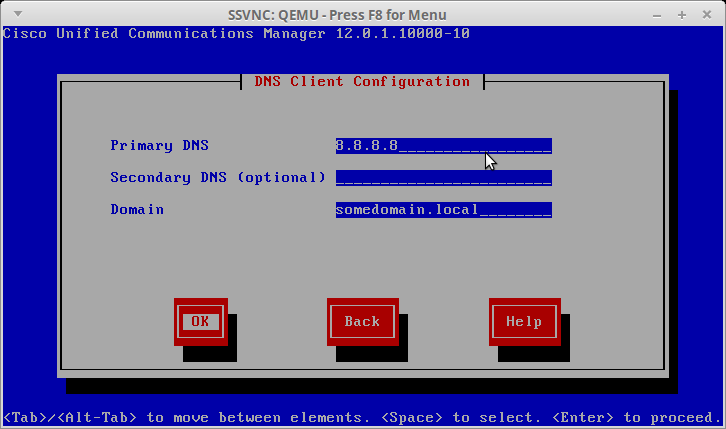

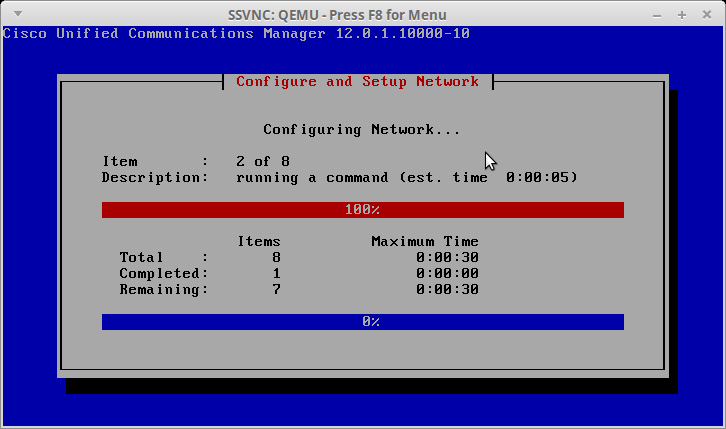

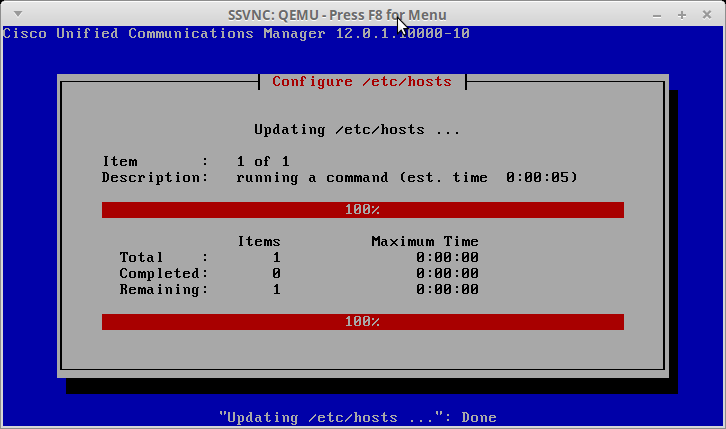

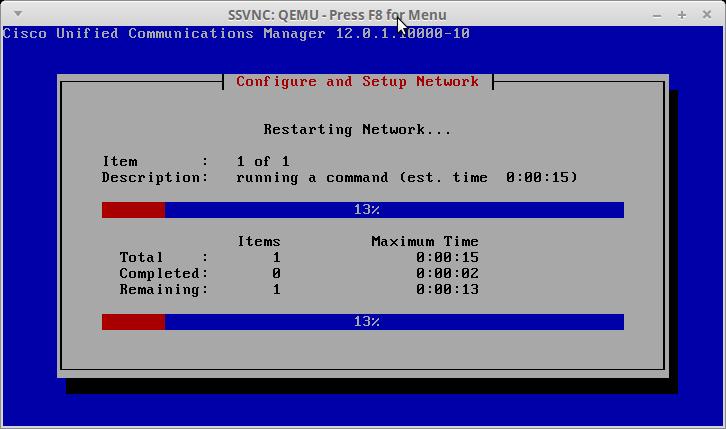

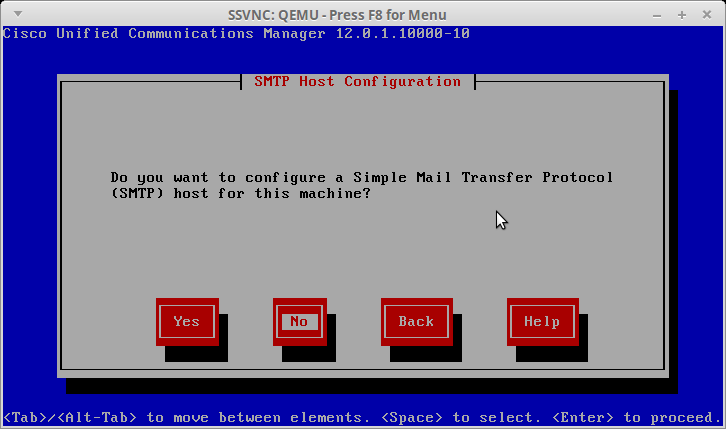

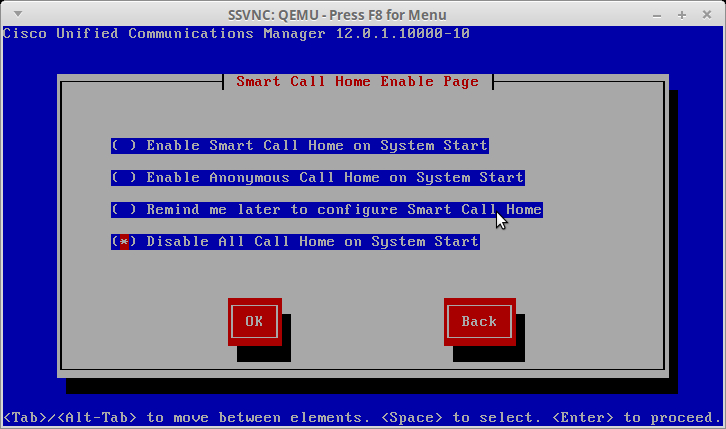

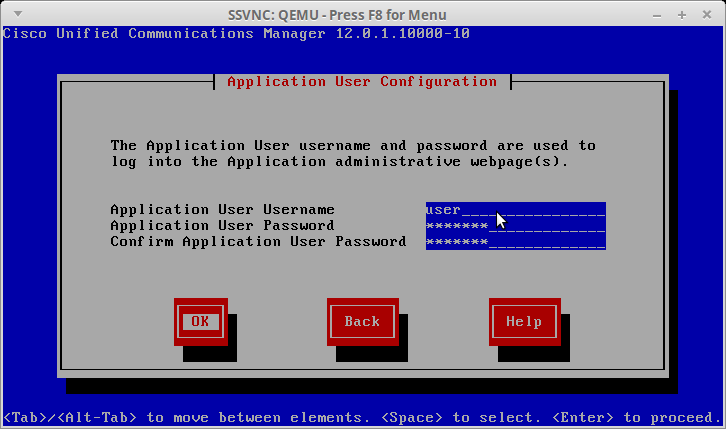

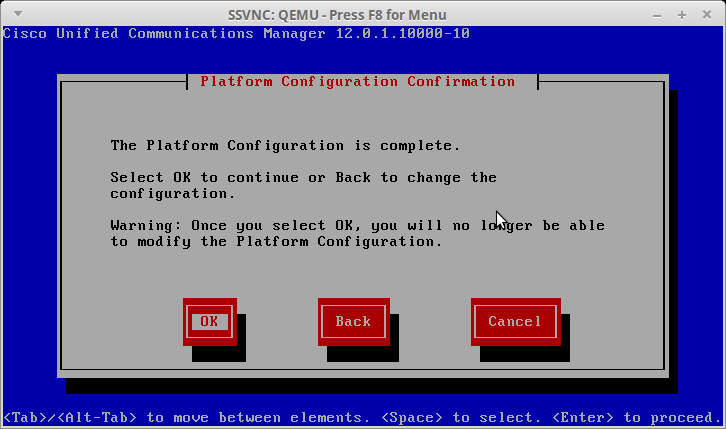

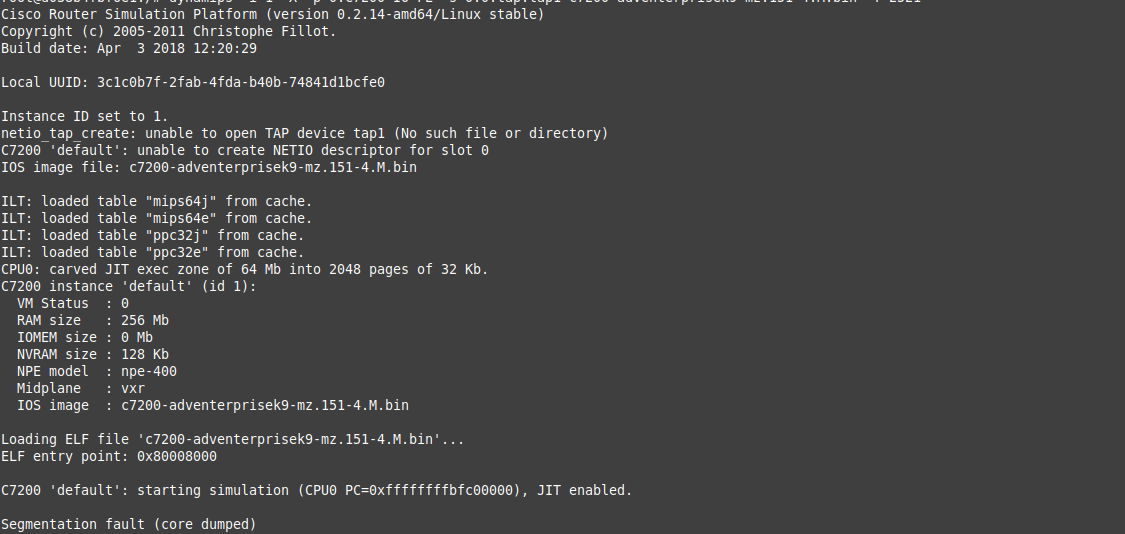

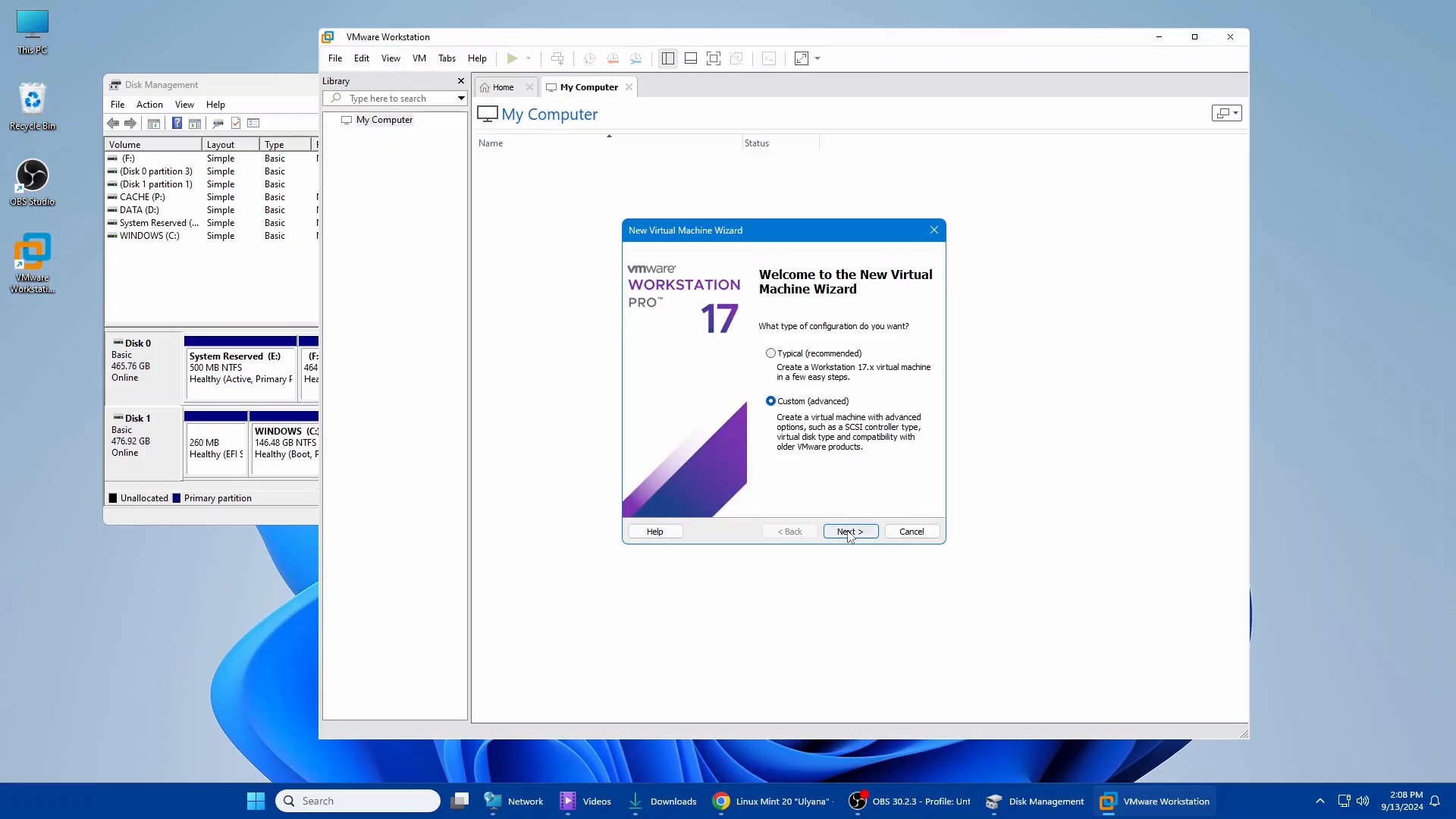

How To Install Cisco's CUCM (Cisco Unified Communication Manager) 12 Guide

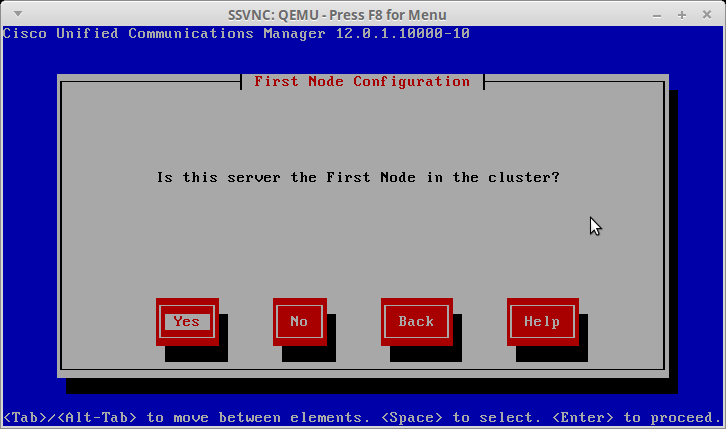

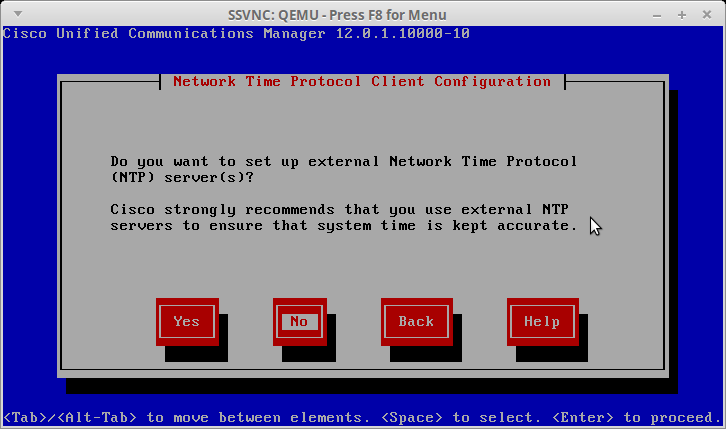

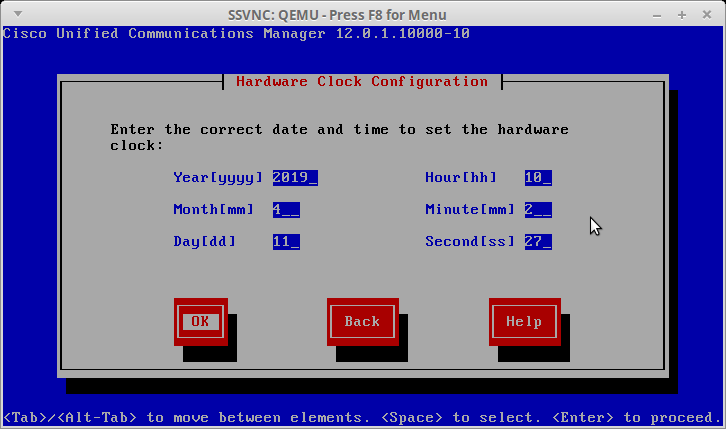

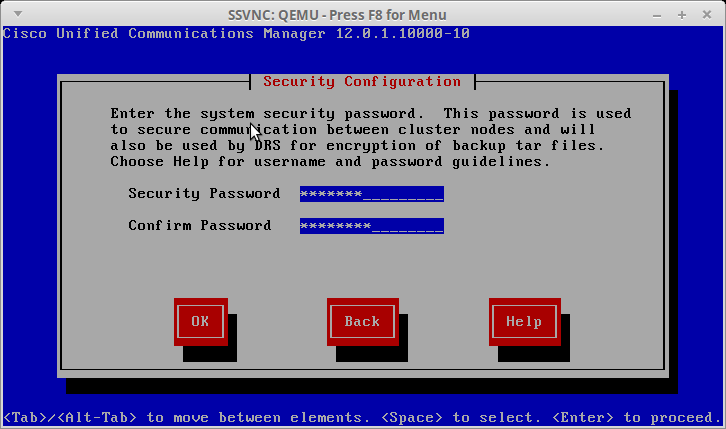

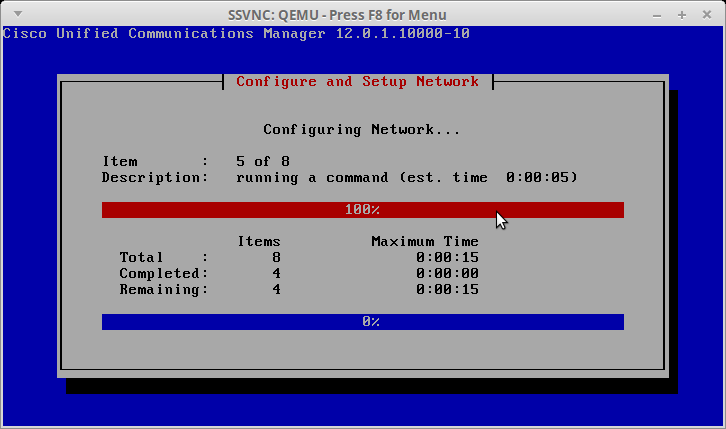

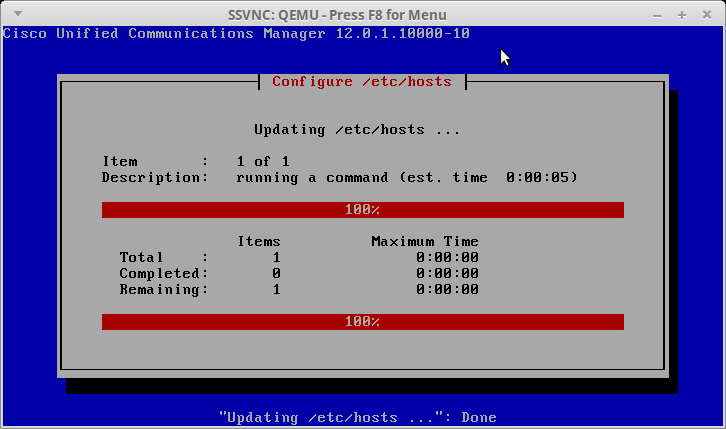

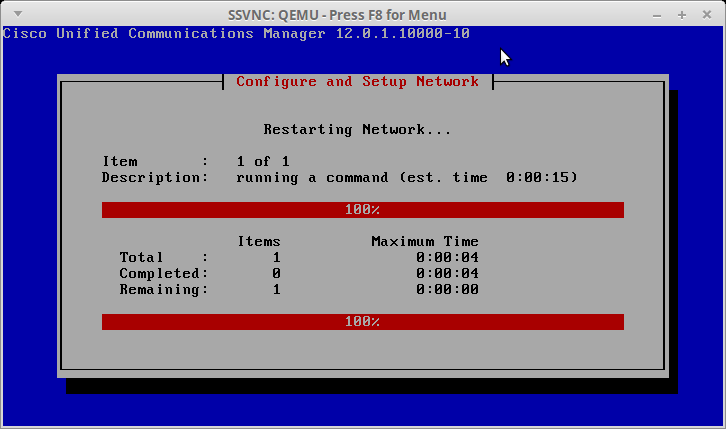

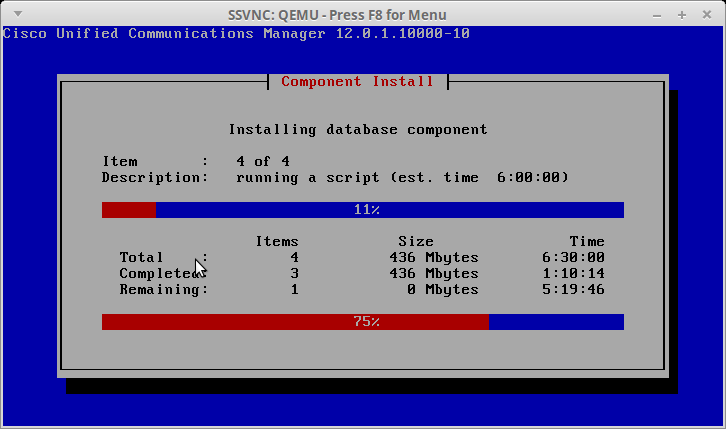

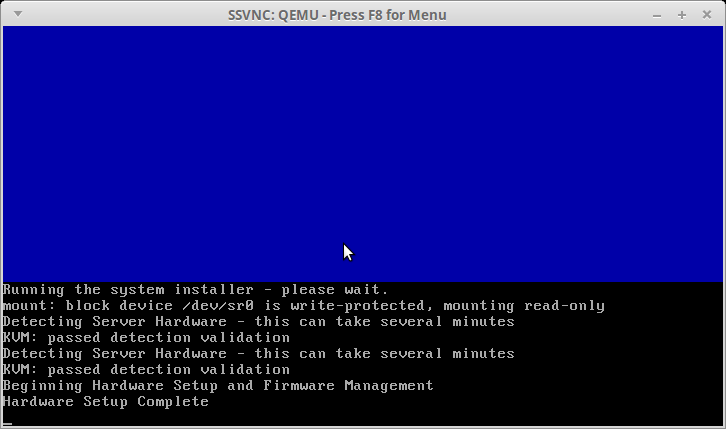

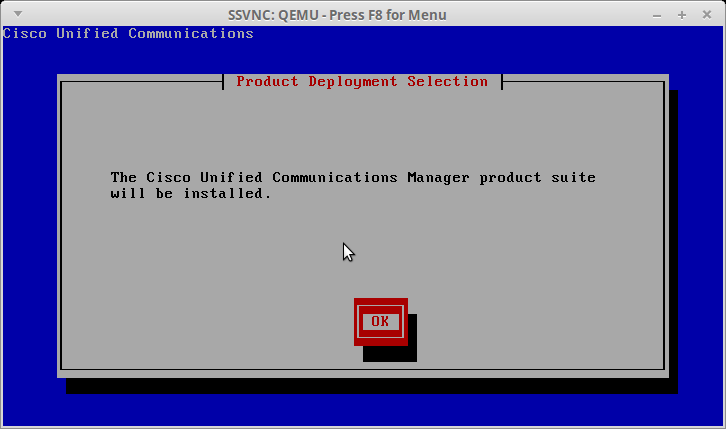

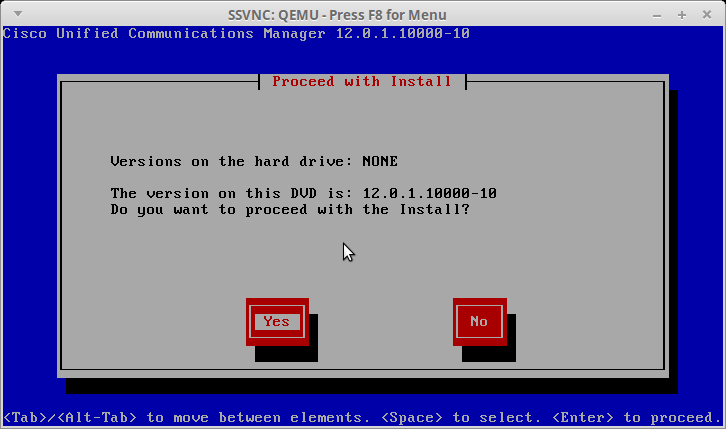

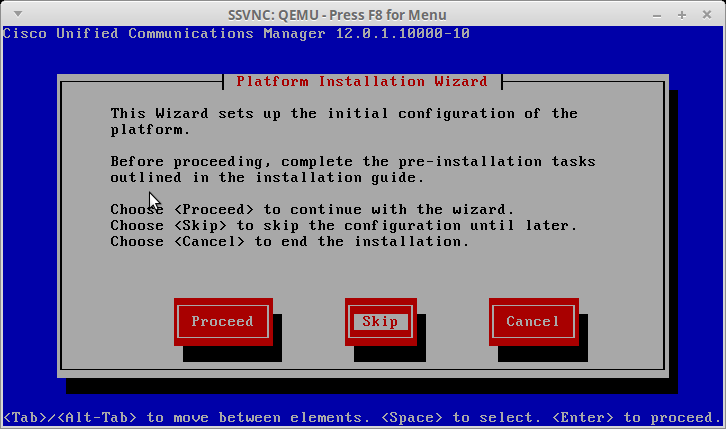

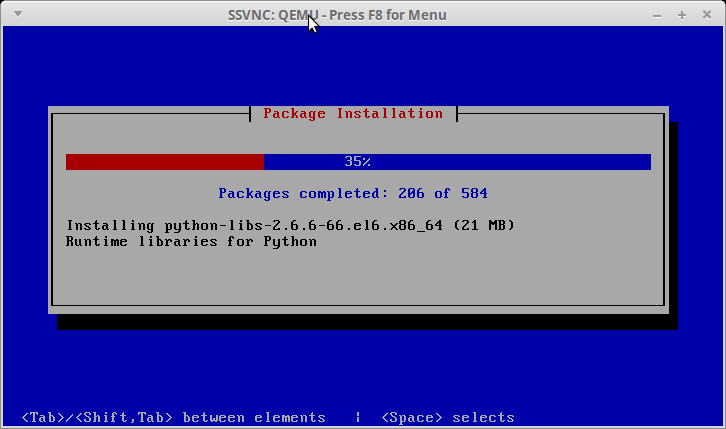

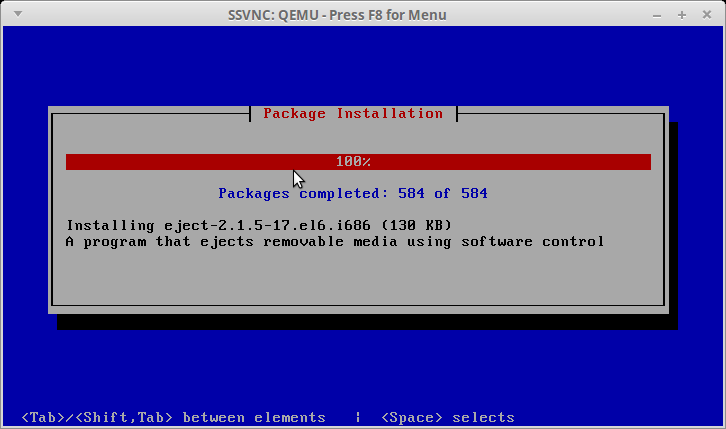

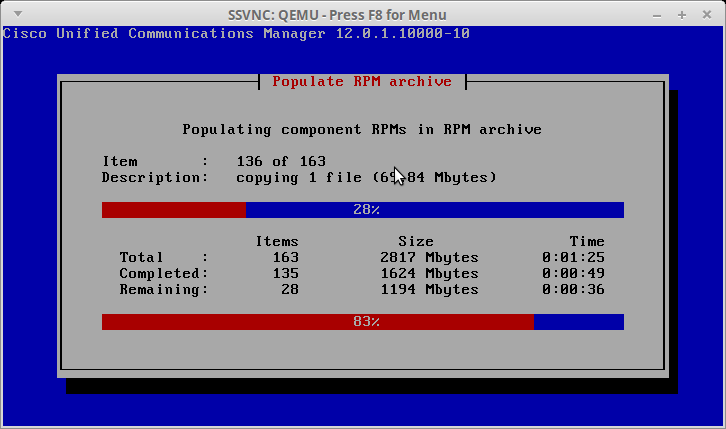

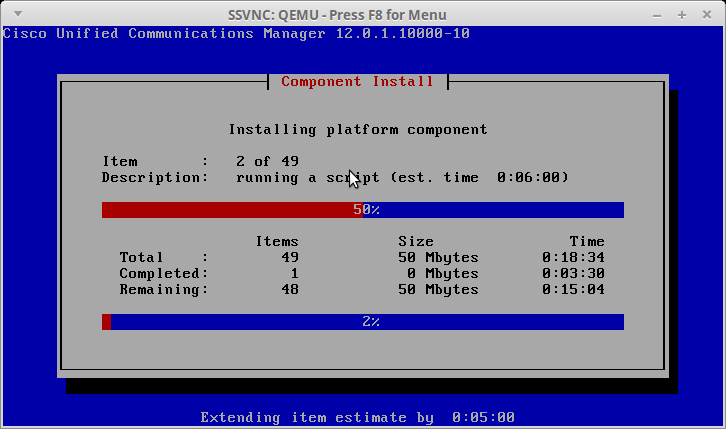

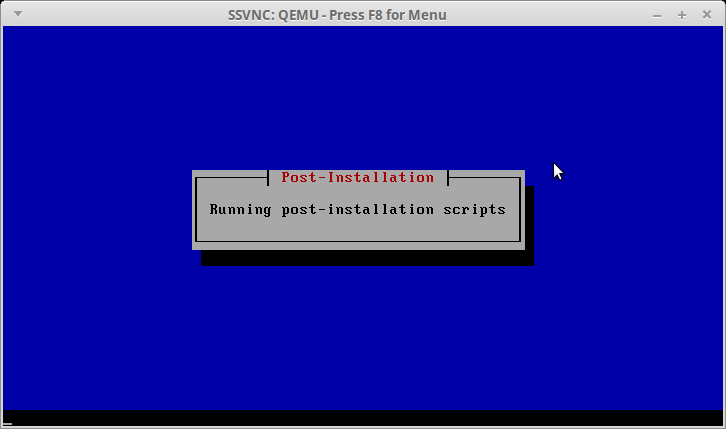

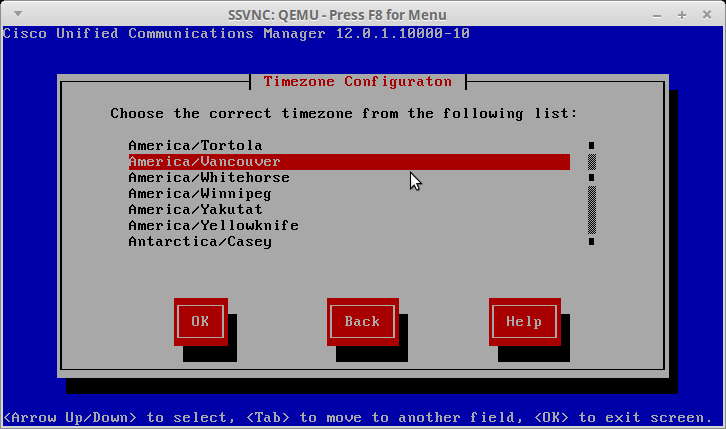

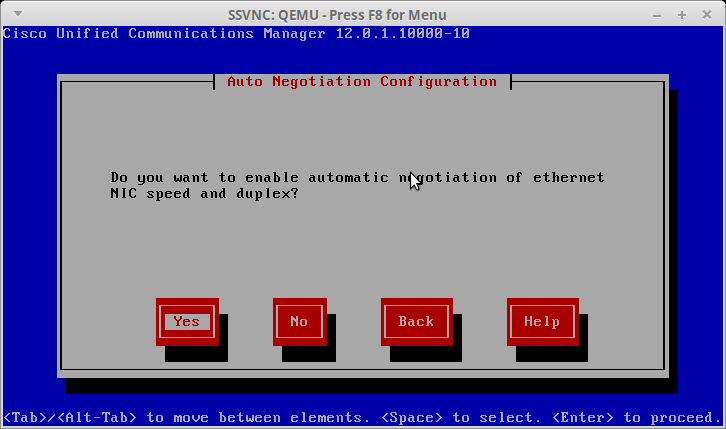

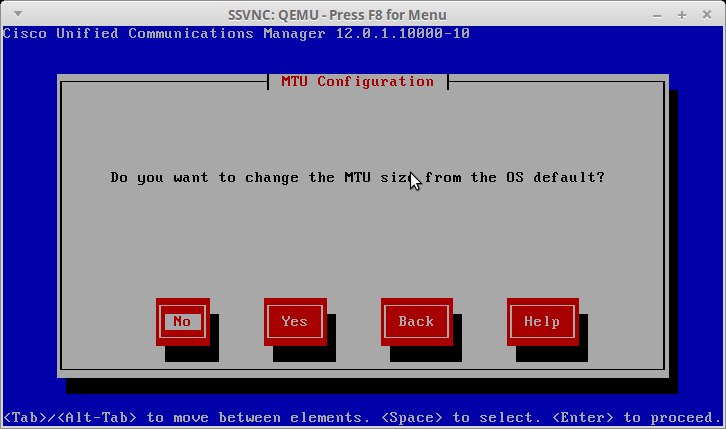

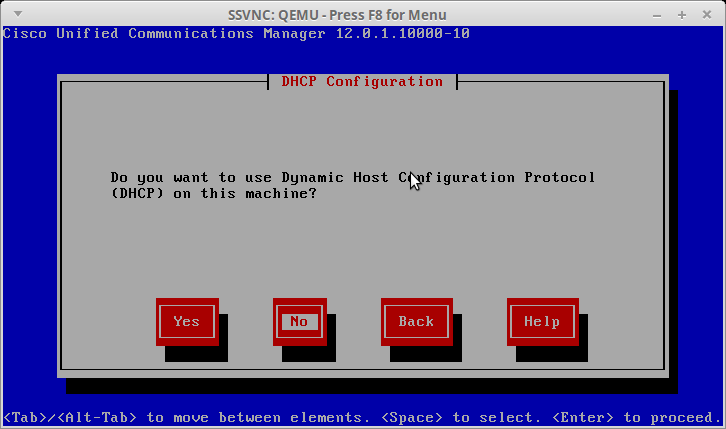

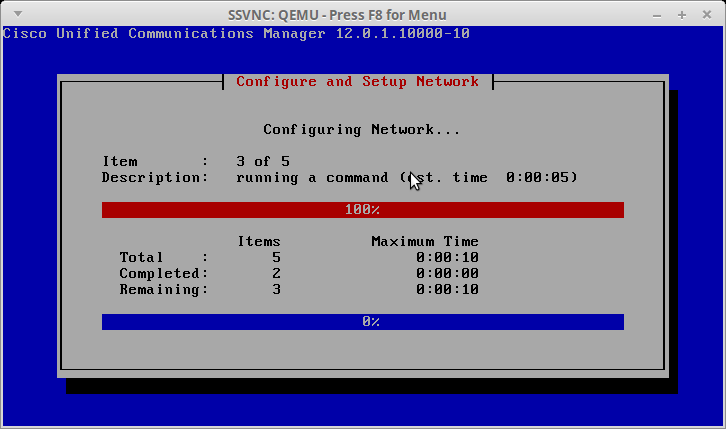

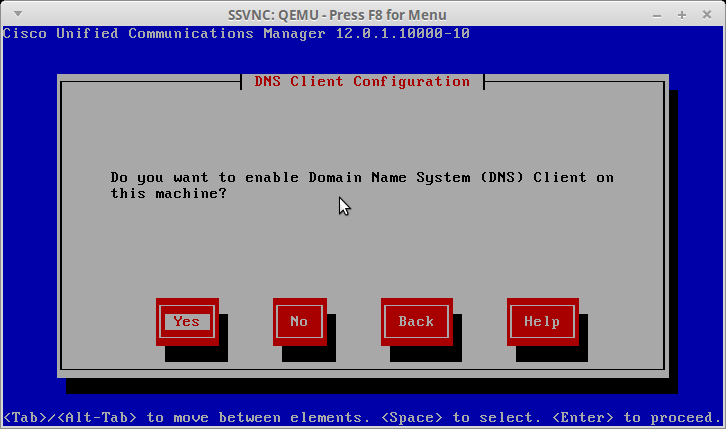

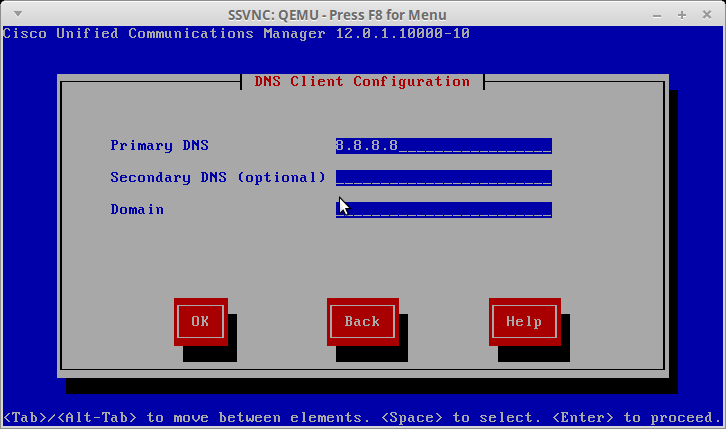

This is a quick overview of the main screens that you will need to go through to do the Cisco Unified Communication Manager installation (CUCM).

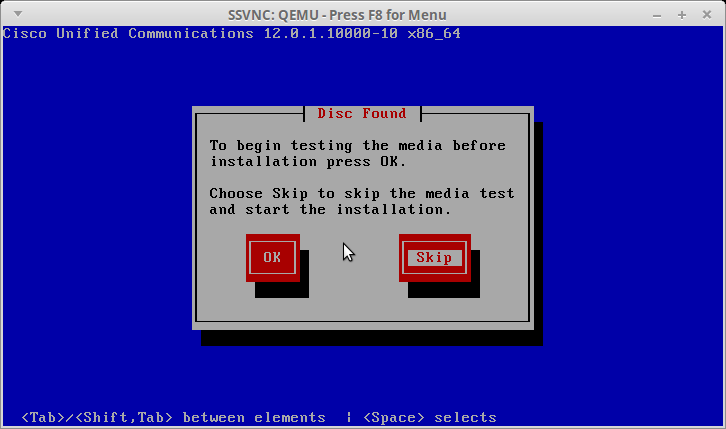

Skip the media test

Skip the Platform Installation Wizard (we'll do it later)

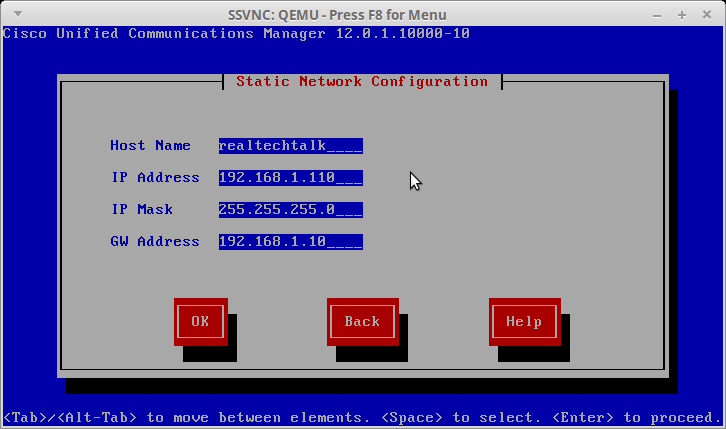

Set the relevant hostname and IP address information

Remember below is just an example, make sure you set a valid IP for your network.

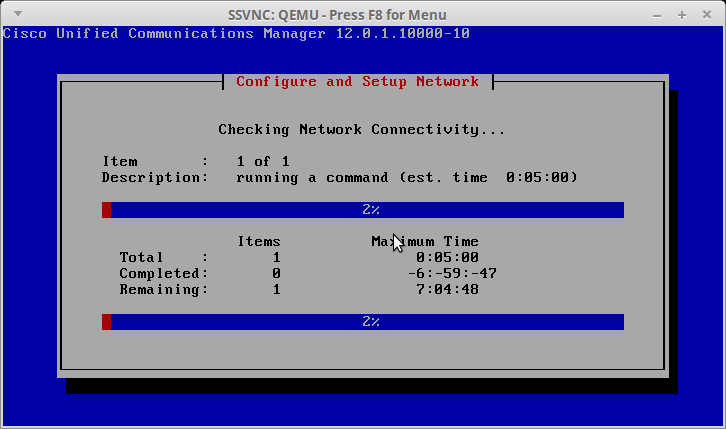

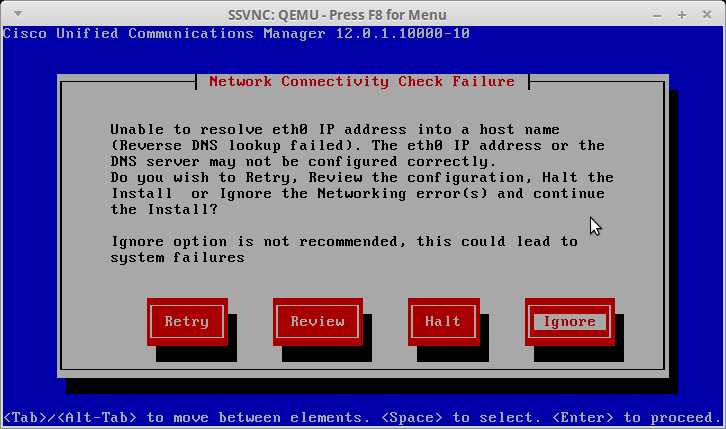

Don't worry about a DNS error exactly like below

If you get the exact error below notice that it is complaining about "Reverse DNS lookup failed". You can safely proceed and continue the install, or if you control your own DNS, then you can add a reverse entry for your IP to avoid this error.